by Martina Mara (Johannes Kepler University Linz)

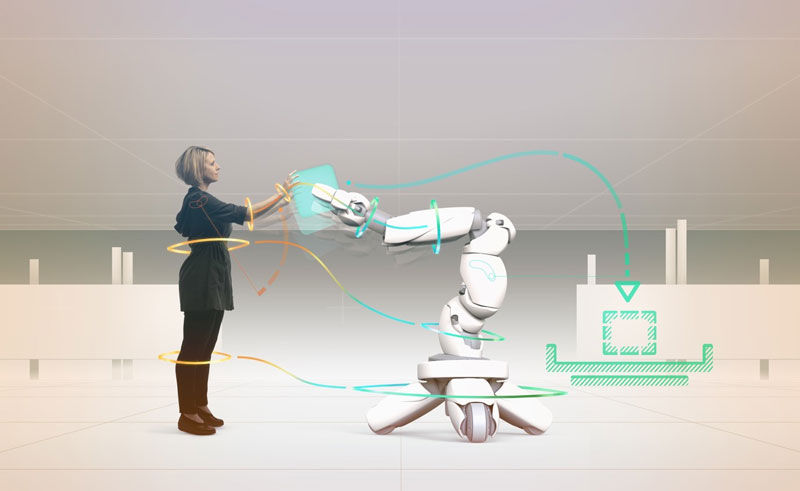

As close collaborations between humans and robots increase, the latter must be programmed to be reliable, predictable and thus trustworthy for people. To make this a reality, interdisciplinary research and development is needed. The Austrian project CoBot Studio is a research initiative in which experts from different fields work together towards the common goal of human-centred collaborative robots.

Trust is a fundamental building block of relationships. This is true not only for personal life, but also for relationships at work. People who cannot trust their co-workers are likely to feel insecure, be less efficient and experience less job satisfaction. A look into the emerging field of collaborative robotics reveals that, at least in some work environments, these co-workers will increasingly be robots.]

In the interdisciplinary research project Cobot Studio, a virtual simulation environment for trustworthy human-robot collaboration is being developed.

Unlike conventional industrial robots, collaborative robots—or CoBots for short—are light, safe and intelligent enough to operate in close physical proximity to people. CoBots will be increasingly used in production halls, warehouses and healthcare, working side by side with employees to conduct tasks such as assemble car seats, inspect packaging and prepare medication. Industrial robots have typically been put behind barriers or in cages for safety reasons, minimising their interactions with humans. The concept of the CoBot changes all this: the formerly isolated industrial robot becomes a social machine, sharing its space with humans and thus requiring an understanding of the states and goals of people. CoBots must be programmed in such a way that they can be trusted by their co-workers.

But what does it actually mean to trust someone (or something)? Psychologists have been concerned with this question for a long time. According to the American Psychological Association, the largest scientific organisation of psychologists worldwide, trust refers to the confidence that one party has in the reliability of another party. More specifically, it is described as the degree to which one party feels that they can depend on another party to do what they say they will do. Therefore, a key factor for the attribution of trustworthiness to a person (or a robot) is this person’s (or this robot’s) predictability. Being able to anticipate whether an interaction partner will act in accordance with one's own expectations is considered essential not only in the scientific literature on cognitive trust in interpersonal relations [1], but also in the Trust in Automation literature [2].

Applied to the area of human-robot collaboration, this means: Just as the states and intentions of the human partner must be identifiable for the robot, so too should the states and planned actions of the robot be easily understandable and predictable for the human co-worker [3]. This becomes especially important in situations that psychologists describe as trust-relevant, i.e., situations that involve vulnerability and risk, whether social, financial, personal, or organisational in nature. Trust is also thought of as something procedural. As the number of (positive) experiences with an interaction partner increases—let's say because an employee has been working successfully with a particular robot on similar tasks for a long time—the perceived predictability and thus trustworthiness should naturally increase along with them. However, one vision associated with CoBots is that even non-experts or employees who have not undergone any special training should be able to use them. Therefore, trustable CoBots must signalise their intentions in a manner that is also understandable for people with limited or no previous experience. For instance, when a CoBot is about to actively intervene in a work process, its actions, such as which direction it will move and which object it will grip, should be intuitively apparent to nearby humans.

But which signals and interfaces are the best indicators of where a robot is about to move or what it is going to do next? This question still needs addressing. The development of easily understandable intention signals in CoBots and the empirical evaluation of their assumed association with trust and acceptance from the perspective of the human co-worker requires research that is characterised by an interplay of many different disciplines and their complementary views on the topic. CoBot Studio is an exemplary collaborative research endeavor that gathers experts from various fields, including robotics, artificial intelligence, psychology, human-computer interaction, media arts, virtual reality and game design around a shared vision of “Mutual Understanding in Human-Robot Teams”. Funded by the Austrian Research Promotion Agency FFG and running from 2019 to 2022, the project focuses on the development of an immersive extended reality environment in which collaborative tasks with mobile industrial robots are simulated and the effects of different light-based and motion-based intention signals conveyed by these virtual robots can be studied under controlled conditions.

Using state-of-the-art VR headsets, study participants in the CoBot Studio play interactive mini games in which tasks such as organising small objects together with a CoBot or guessing the target location of a moving robot in space have to be completed. During the games, the robot’s intention signals (e.g., LED signals or nonverbal communication cues) are varied and their respective impacts on comprehensibility, trust, perceived safety, and collaborative task success are evaluated. After several iterative runs of such virtual CoBot games, findings about the effectiveness of different intention signals will be evaluated in a game with physically embodied CoBots. Based on this method, the interdisciplinary CoBot Studio team intends to create practice-oriented guidelines for the design of predictable and thus trustworthy collaborative robots.

Links:

http://www.cobotstudio.at/

https://www.jku.at/lit-robopsychology-lab/

References:

[1] J. K. Rempel, J. G. Holmes, M. P. Zanna: “Trust in close relationships”, Journal of Personality and Social Psychology, 49, 95-112, 1985.

[2] J. D. Lee, K. A. See: “Trust in automation: Designing for appropriate reliance”. Human Factors, 46, 50-80, 2004.

[3] A. Sciutti, et al.: “Humanizing human-robot interaction: On the importance of mutual understanding”, IEEE Technology and Society Mag., 37, 22-29, 2018.

Please contact:

Martina Mara, LIT Robopsychology Lab, Johannes Kepler University Linz, Austria