by Maria Teresa Paratore (ISTI-CNR) and Barbara Leporini (ISTI-CNR)

The aim of this study, which is currently underway, is to investigate how the haptic channel can be effectively exploited in a mobile app devoted to visually impaired users, for the preliminary exploration of a complex indoor environment, such as a shopping mall.

Navigation apps have proven to be effective assistive solutions for persons with visual impairments, helping them achieve better social inclusion and autonomy [1]. Navigation apps can be used to get real-time information about users’ actual position in a physical environment, route planning, and accessibility warnings. A mobile app can also be used to help users build a cognitive map (i.e. a mental representation) of the spatial environment before physically accessing it. An effective cognitive map allows a subject to localise and orient themselves in the space in relation to the landmarks and elaborate a route to reach a given point in the environment [2]. For a visually impaired person, this is particularly useful before physically accessing a complex, unknown or rarely visited environment [1]. The goal of our study is to investigate the potentialities of vibration patterns to enhance the learning rate of a cognitive map. Our idea is to adopt the haptic channel in order to provide not only spatial cognition and directional hints, but also an overview of the functional areas of the environment, also known as Points of Interest (POIs). Almost every public building nowadays provides visitors with aids for navigation (paper maps, digital signage, websites or mobile apps); however these aids are generally not accessible for visually impaired users. In the following, we describe a mobile Android application we designed and developed for testing purposes, with the aid of two experienced visually impaired users.

The Test Application

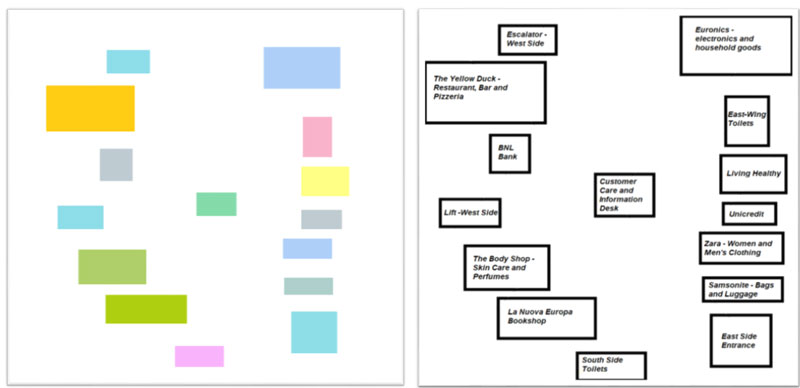

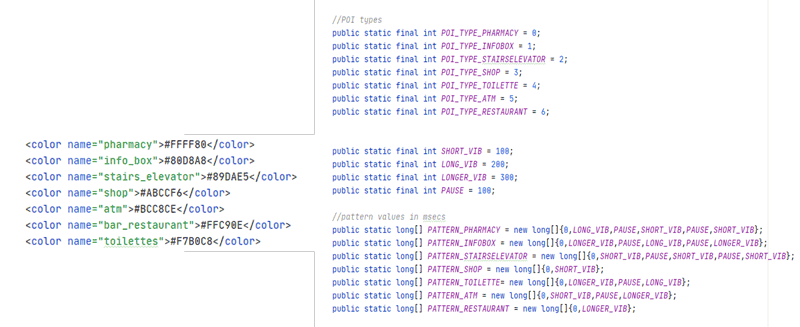

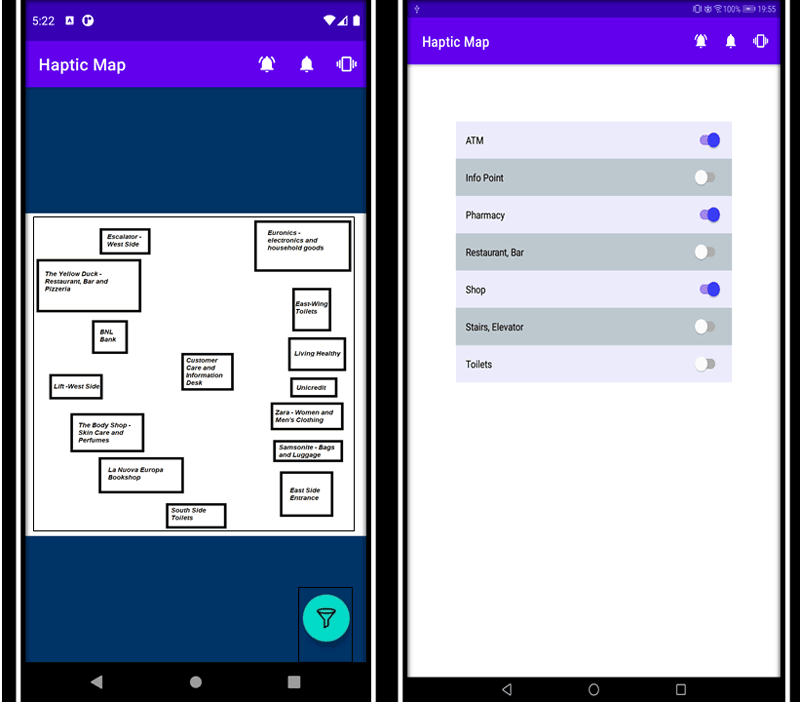

Our test application provides users with a simple audio-vibration map. Seven functional categories were identified, which are typical of a shopping mall, and each category was associated to a different vibration pattern. The map is composed of two layers, one of which is invisible, and is responsible for the haptic and audio rendering. The hidden layer is formed by a set of coloured areas, each corresponding to a POI. RGB (red, green, and blue) colour encoding was exploited to identify each POI; predefined couples of red and green levels were associated with many functional categories in the building. The blue component, on the other hand, was used to precisely identify each single POI. While the user explores the touchscreen with their finger, the app checks the colour of the underlying coordinates. Whenever a couple of red and green components is detected, which corresponds to a POI category, the matching vibration pattern is triggered, and if the user lifts their finger, the blue component will be considered to announce the matching descriptive label through the TTS engine. Vibration patterns were designed in such a way as to make the POI categories as distinguishable as possible, while keeping a low level of intrusiveness. Concern arose that the cognitive load may become too heavy in certain conditions or for certain categories of users, such as the elderly. A “filter by category” function was therefore introduced.

Figure 1: The coloured image used to recognise the different areas on the map, and the visible version of the map.

Figure 2: Colour encoding adopted to identify the different categories of POIs and the associated vibration patterns, as they are encoded according to the Android/Java formalism.

Figure 2: Colour encoding adopted to identify the different categories of POIs and the associated vibration patterns, as they are encoded according to the Android/Java formalism.

Experimental Results

The app was provided with three alternative modalities of feedback: audio only, vibration only, audio and vibration. Trials were carried out in which users were asked to build a cognitive map of a shopping mall in each of the three modalities of interaction. We found that, when only haptic feedbacks were enabled, users were able to get an idea of the arrangement of the POIs within the space and had no difficulty in recalling the location of specific POIs, as well as the total number of shops or entrances and stairs. The task of finding a given shop on the map was also successfully accomplished. Worse results were achieved when the exploration was only supported via auditory feedback. Using both the auditory and the haptic channels to announce the POI category was perceived as overwhelming, even though this modality was appreciated for a training phase, when correspondences between vibration patterns and POI categories had to be learned. Problems were occasionally reported, related to synchronisation of the TTS announcements. We are confident to solve these issues during the next phase of our study, when integration with Android’s accessibility service will be better exploited.

Future Work

Our aim is to integrate the described approach into traditional maps provided by services such as GoogleMaps [L1] and OpenStreetMap [L2]. To achieve this goal, further ad hoc trials will be carried out, focusing on specific aspects such as the maximum number of patterns that can be used at the same time and the most effective pause and vibration configurations, also in relation to users’ demographic data.

Links:

[L1] https://developers.google.com/maps?hl=en

[L2] https://www.openstreetmap.org/about

References:

[1] A. Khan, S. Khusro: “An insight into smartphone-based assistive solutions for visually impaired and blind people: issues, challenges and opportunities”, Universal Access in the Information Society. 1-34 (2020). https://doi.org/10.1007/s10209-020-00733-8

[2] R.A. Epstein, et al.: “The cognitive map in humans: spatial navigation and beyond”, Nature Neuroscience, 20, 1504-1513. (2017). https://doi.org/10.1038/nn.4656

Please contact:

Barbara Leporini, ISTI-CNR, Italy

Maria Teresa Paratore, ISTI-CNR, Italy