by Emanuela Tangari and Carmela Occhipinti (CyberEthics Lab.)

The use of Artificial Intelligence can be a vital resource for the development of precision medicine. Nonetheless, it is crucial to conduct a gender analysis of this technology for increasing its inclusiveness and democratic nature.

In the field of assistive technologies, an interesting project started two years ago, financed by the European Union (EU) under the Horizon 2020 (H2020) programme, which aims to support the follow-up of cancer survivors and to improve the diagnostic decisions of clinicians involved in the treatment of these cancers (with the perspective of extending the process to other fields of application). The PERSIST project (Patients-centred Survivorship care plan after Cancer treatments based on Big Data and Artificial Intelligence technologies) [L1] is based on Artificial Intelligence (AI), the use of Big Data and the detection of circulating tumour cells (liquid biopsy) to implement and refine a real-time and remote patient monitoring system, and at the same time to improve medical analyses and decisions regarding the prognosis and treatment of patients, targeting and supporting so-called “precision medicine”. The 13 partners (hospitals, companies and technology centres from 10 countries) and the 160 patient volunteers involved cooperate in the development of this assistive technology through the use of a smartwatch connected to a platform, to monitor and record patient-specific biomedical data (heart rate, blood pressure, sleep quality, etc.), and indicators concerning the patient’s quality of life.

Precision medicine, which makes use of AI, is the way to improve the management of both prevention and follow-up of patients, e.g., to promote faster recovery and more effective reintegration into the social, work, and relational spheres. In PERSIST, in addition to the use of AI and Big Data, a system is being developed based on an algorithm that allows the constant measurement of circulating tumour cells in order to detect possible metastases at an early stage. This type of monitoring, along with all the processes that have always been performed by technicians, requires longer analysis times; the technologies currently under development can therefore also help to significantly reduce the diagnostic treatment time, thus making therapies and discoveries much more effective.

On the one hand, AI aims towards coding by experts, who make use of their own knowledge; on the other hand, machine learning algorithms aim to build models from labelled classification cases. The common goal is to achieve an ever-increasing predictive capacity (also for the algorithm and Deep Learning); it is a matter of perfecting more and more such “labels” on which the data are structured. Machine learning then identifies itself as a continuous process with a great capacity for defining patterns that are not included in the training phase.

The challenge for such assistive and medical technologies, however, is to ensure that data-driven research models go hand in hand with good clinical practice, and that important ethical standards are considered and maintained in them. Among these, one of the most decisive issues – also for the very structuring of the learning models on which the algorithm drives – is certainly that of gender analysis.

There is a gender discrimination that precedes the results of medical research. The many studies on this subject [1] point out how already at the screening stage certain values are not adequately taken into account, for example the psychological distress of women with regard to their sense of family responsibility (which affects the decisions they make for their own care plan) or the under-representation of women in medical research [2]; or even the difficulty of particular groups of people in obtaining (for geographical or economic reasons) treatment. These forms of discrimination do not move over an immediately identifiable field, but are often underestimated due to unconscious patterns and a cultural (including clinical) tradition that increases inequality between social groups.

It is crucial, in referring to gender analysis, that it is not conceived in the sense of male–female difference. We have noted that gender also includes all socio-economic factors; the need then arises for an ‘intersectional’ analysis capable of showing how gender has to do with other social parameters, in order to raise awareness of prejudices (and thus actions) that drive analyses and studies.

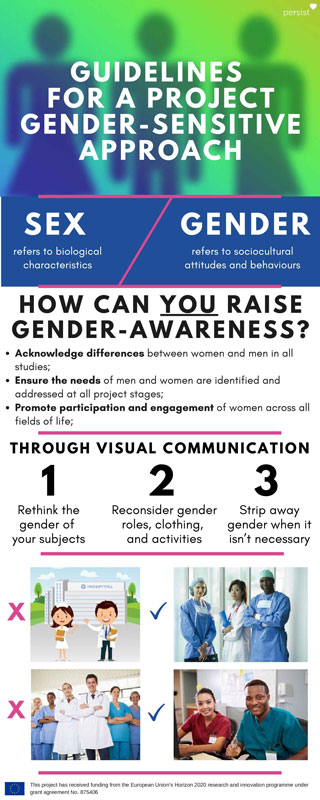

Then there are the large and numerous gender-related problems due both to access to information resources (and thus to information, to the news people can obtain) and to media communication (Figure 1) (according to which, in a large number of cases, we see represented male doctors and female nurses, for example). A “personalised” medicine needs therefore to consider these and numerous other issues, first and foremost by working on individual and collective awareness (e.g., through staff training) [3], decision-making policies and research and diagnostic methodologies.

Figure 1: Infographics of a gender-sensitive communication.

Conclusions

An appropriate analysis of the data, combined with a broad cultural system consistent with the complexity of reality and human beings, capable of recognising and resolving gender bias and adopting differentiated good practices, can allow the building of a democratic and inclusive approach (for a cultural and a technology education). On the other hand, it can allow the implementation of increasingly reliable and accurate technologies tailored to individual needs and characteristics. “Precision medicine” must therefore have as its perspective not only to be clinically reliable, but to become a medicine “for everyone”, able to leave no one behind and to start conceiving gender in its complex and important meaning of human gender.

“This document is part of a project that has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant Agreement No. 875406”.

Links:

[L1] https://www.projectpersist.com/

[L2] https://www.cyberethicslab.com

References:

[1] A. Hirsh et al.: The influence of patient sex, provider sex, and sexist attitudes on pain treatment decisions. J Pain 15(5):551–559, 2014. https://doi.org/10.1016/j.jpain.2014.02.003

[2] Duma et al.: “Representation of minorities and women in oncological clinical trials: review of the past 14 years”, J Oncol Practice 14(1):e1–e10, 2018. https://doi.org/10.1200/JOP.2017.025288

[3] G. Koren et al.: “A patient like me.” An algorithm-based program to inform patients on the likely conditions people with symptoms like theirs have. Medicine 98(42):e17596, 2019. https://doi.org/10.1097/MD.0000000000017596

Please contact:

Emanuela Tangari, CyberEthics Lab., Italy

Carmela Occhipinti, CyberEthics Lab., Italy