by Andreas Papalambrou, Christos Palaiologos and John Gialelis (University of Patras)

A team at University of Patras developed a software that gives students and educators, with special emphasis on the disabled and the visually impaired, the opportunity to enjoy the beauty of the natural sciences such as astronomy and geology.

Studying the natural sciences is more accessible than ever to groups such as the visually impaired or the disabled, with old or new technologies such as braille, text to speech, audiobooks and others. However, members of such groups cannot fully experience all aspects of the natural sciences. For instance, they may not be able to visually enjoy the magnificence of astronomical objects and the universe or an on-site visit to a geological site. Our work aims to bridge that gap by introducing inexpensive and unobtrusive means to the visually impaired and the disabled to experience astronomy [L1] and geology [L2]. A virtual assistance module has been developed for achieving this goal.

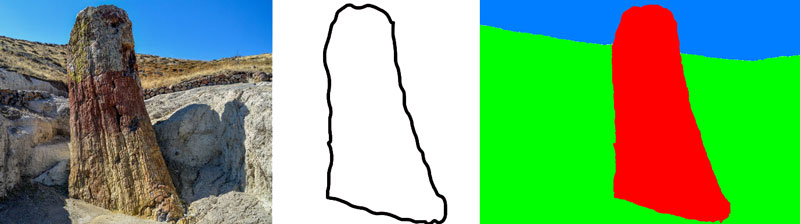

The first application transforms astronomical images (such as planets and galaxies) and geological evidence (such as fossils, rocks or petrified trees) into sound. When this happens, a visually impaired person can touch a touchscreen and “feel”, according to the sound changes, the shape of the astronomical object or geological evidence. An algorithm divides the object image in blocks and recognises its different parts (Figure 1). In the first stage, the user feels the image shape through vibration. After assessing the requirements of the users, we concluded that it will be easier to recognise the shape if it is cut off from the process of recognising the colours by reproducing the sound. Each photo examined in the application is accompanied by its contour, created algorithmically by tracing the edges of objects. Users feel the touch device vibrate when they touch the image borders. Proceeding to colour mode, when the user touches the screen, an algorithm detects on which of these blocks the coordinates of the finger belong. Then, the mean RGB value of this area is algebraically calculated, stored in a matrix and translated through the Harbisson’s Sonochromatic Scale [1] into hue, lightness and saturation. These values are used to generate the frequency and the volume of the sinewave, which is created and played in combination with the level of interest each part of the image has (foreground, main object, background) through an interest heat map. This process is repeated every time the user touches a different area of the image. A “train” mode is included, in which the user familiarises himself with the recognition of shapes through the vibration of the device and the recognition of colours through sounds.

Figure 1: Original picture of a petrified tree, its outline and interest heat map.

The second application is a mapping and navigation application with special features for the disabled and the visually impaired. With this mapping application, a visitor in a geological site is assisted in their path. Predetermined routes are calculated for the disability types supported and sites that are accessible for them are proposed. When a user actually goes near a geosite, the previous sound application can be called to translate the current view into sound.

The navigational functions of the software will soon include special functionality that aims at providing an easy and effective visiting experience for people who are visually impaired or disabled, but will also be usable for people with no disability. The software functions both with aural instructions as well as with visual instructions, so it can be used by the visually impaired, the disabled, as well as people with no disability. The simultaneous use by all users is useful for when a disabled person is accompanied by a guide, as well as for social inclusion and the simultaneous use by disabled and non-disabled.

Current applications will soon have extra abilities such as user uploading of images, photos, videos and information related to points of interest in the geopark. This will be extra useful for a real or a virtual visitor but also will be very educative for teachers who wish to provide their students with a multimedia lesson on a geological monument. Apart from this, a person with disabilities that cannot access a geopark can thus accomplish a sort of virtual visit.

Being strong supporters of open data, a publicly accessible website will be created, which will include the information gathered in the application development. In detail, the website will contain maps of the geoparks, details on the selected points of interest with photos and descriptions, suggested routes for visiting the geoparks and audio-visual content gathered in all intellectual outputs and connections to the map.

Links:

[L1] https://www.a4bd.eu/

[L2] https://www.g4vid.eu/

References:

[1] N. Harbisson: “Hearing colors: My life experience as a cyborg”, CREATIVITY, IMAGINATION AND INNOVATION: Perspectives and Inspirational Stories, 2019, 117-125.

[2] C. Palaiologos, A. Papalambrou, J. Gialelis: “A4BD: Astronomy outreach software for the visually impaired and the disabled”, Communicating Astronomy with the Public Conference (CAP) 2021.

Please contact:

John Gialelis

Electrical and Computer Engineering Department, University of Patras, Greece