by Hui-Yin Wu (Centre Inria d’Université Côte d’Azur, France), Aurélie Calabrèse (Aix-Marseille Univ, CNRS, LPC, France), and Pierre Kornprobst (Centre Inria d’Université Côte d’Azur, France)

The Inria Biovision team has been working on designing accessible news-reading experiences in virtual reality (VR) for low-vision patients. We present the advantages of VR as well as design principles based on clinical studies of low-vision reading and newspaper design. This is realised through a novel VR toolbox that can be customised to create a personalised and accessible news-reading environment.

Low-vision conditions generally refer to visual impairments that cannot be corrected or cured, resulting in a loss of visual acuity, which most notably impacts reading activities. Newspapers pose a unique challenge due to their unpredictable layout, timely nature, and condensed formatting, making them visually complex for low-vision audiences, and a challenge for news publishers to create accessible versions. News reading is an essential activity affording social connection, entertainment, and learning in modern society. With an estimated 180 million people suffering from low vision worldwide and rapidly rising, there is a strong need for accessible news-reading platforms.

Our Project

The Inria Biovision team [L1] has been tackling this problem since 2017 through virtual reality (VR) technology. The introduction of budget headsets such as Google Cardboard©, or Oculus©, has made VR available to the general public. With the freedom to fully customise a 360° visual space, VR solutions have already been studied and developed in the context of low vision for rehabilitation, architecture design, public awareness, and more.

For low-vision reading, VR allows the user to personalise the look and feel of the reading content. However, the development of reading applications in VR is still in its early stages. That is why our team took on this challenge to propose a novel toolbox for creating accessible news-reading experiences for low vision.

Design Principles for Low-Vision Reading in VR

We surveyed eight existing reading applications on the market for VR headsets and studied news journal design guidelines, and recommendations of low-vision scientists on how to make text accessible for patients. From this we identified five important principles to designing reading applications for low vision:

- Global and local navigation. The large visual space in VR can be properly used to design intuitive navigation both globally, between different articles and pages, and locally on line-to-line navigation by parsing and enlarging text.

- Adjustable print and text layout. Adjustable variables for print should include (1) size: reasonably up to 100 times the size of newsprint, (2) fonts: those such as Tiresias and Eido have been developed with accessibility in mind, and (3) spacing: between words and lines. Ideally, text should conform to medical standards for measuring visual acuity using visual degrees, and defining line width on the number of characters.

- Smart text contrasting. Intelligent color adjustments can increase the polarity of text, including white text on a black background and yellow on blue without affecting non-text media. Previous studies indicate an improvement of 10-40% in reading speed.

- Image enhancement. Integrating image-enhancement techniques to sharpen text and media content for better visibility.

- Accessibility Menu. Everyone has different preferences. These principles should be implemented as options to allow personalisation of the reading space. Enabling voice commands would further facilitate interaction, avoiding crowded interfaces of buttons, and simplifying the user experience.

An Open VR Toolbox for Accessible Reading Design

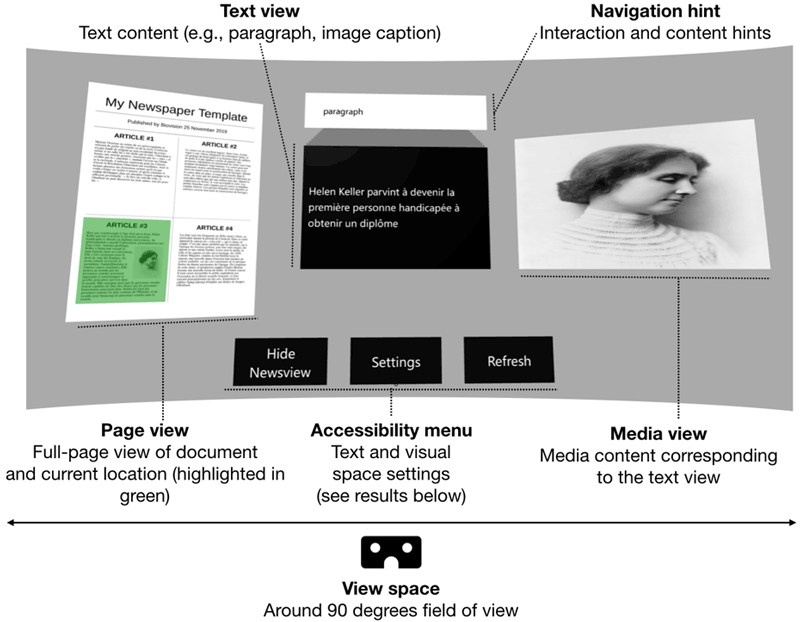

These design principles were implemented as an open toolbox [L2]. We chose to use WebGL technology, which runs on most VR browsers. The interface view space is composed of the page view, text view, media view, and accessibility menu (Figure 1), all of which can be flexibly moved around, hidden, or summoned at the user’s preference.

Figure 1: The interface of our toolbox is composed of four components: (1) a page view to highlight global reading position, (2) a text view presenting augmented text content along with the content type in the navigation hint, (3) a media view for associate visual media content, and (4) the accessibility menu to adjust visual parameters.

The page view displays the current page image with a global navigation indicator that highlights the part that is currently displayed in the text and media views to help the user immediately identify their reading position.

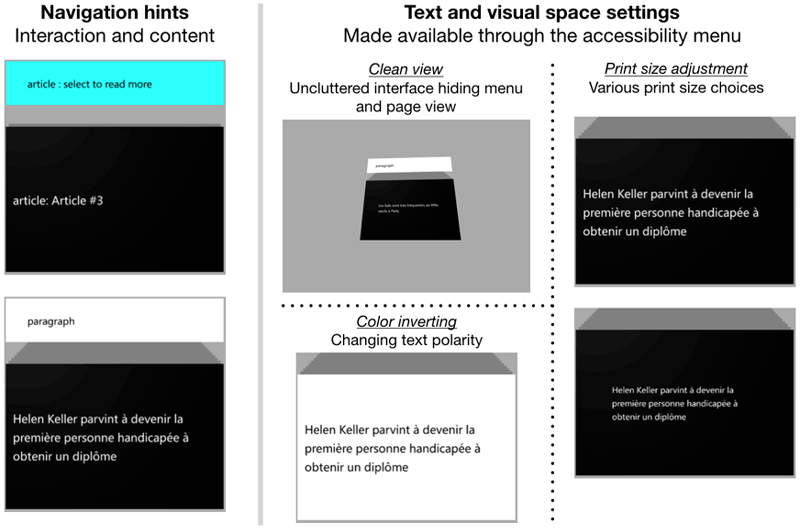

Text view in the middle of the visual space shows headings, captions, paragraphs, or other text content. Text is presented using a card-deck navigation metaphor, with a remote go-to next or previous cards and select cards (e.g., an article) for in-depth reading. Print size and color can be adjusted through the accessibility menu (Figure 2).

Figure 2: The toolbox offers various customisation of accessibility parameters such as navigation hints to indicate what text content is being shown and if more content is linked to a card (left), and print size, interface decluttering, and colour options (right).

Media view shows any media content associated with the current text view, with alternative text support. Navigation hint indicates to the reader what type of content is currently being displayed in the text and media views.

To Reading Accessibility and Beyond!

Our project highlights the high potential of immersive technologies to facilitate accessible media experiences far beyond simple magnification or image enhancement of classical assistive technologies. Their potential is supported by clinical proofs of concept, suggesting that smart augmentation principles might be beneficially implemented in VR [2], with the flexibility and full control over visual variables in a 3D environment, personalised to patients' needs. However, to leverage the VR/AR to design novel e-health systems, we need rigorous scientific, medical, and ergonomic evaluation of these systems.

This calls for translational research and cross-disciplinary approaches that implicate the vision science community to study fundamental and clinical vision science. An immediate challenge to confront here is the high technical barrier to create VR experiments. Existing packages like PsychoPy have successfully tackled this difficulty to generate stimuli on 2D monitors through a script programming interface. With the same ambition as PsychoPy, our team is currently working on the development of the Perception Toolbox for Virtual Reality (PTVR) package [L3], which proposes a scripting interface to design stimuli in virtual reality and is developed under an Open Science framework. This work is carried out in collaboration with E. Castet (Aix Marseille Univ, CNRS, LPC) in the context of ANR DEVISE [L4]. With the democratisation of immersive technologies and research-grounded solutions, our vision is that immersive technologies can soon be deployed on a larger scale to meet various patients' needs.

Links:

[L1] https://team.inria.fr/biovision/

[L2] https://team.inria.fr/biovision/cardnews3d/

[L3] https://ptvr.inria.fr/

[L4] https://anr.fr/Projet-ANR-20-CE19-0018

References:

[1] H.-Y. Wu, A. Calabrèse, P. Kornprobst: “Towards Accessible News Reading Design in Virtual Reality for Low Vision”, Multimedia Tools and Applications, Springer, 2021.

[2] A. Calabrèse, et al.: “A Vision Enhancement System to Improve Face Recognition with Central Vision Loss”, Optometry and Vision Science, 95(9), 2018.

[3] M. Raphanel, et al.: “Current Practice in Low Vision Rehabilitation of Age-related Macular Degeneration and Usefulness of Virtual Reality as a Rehabilitation Tool,” J. Aging Sci. 2018.

Please contact:

Hui-Yin Wu

Centre Inria d’Université Côte d’Azur, France