by Sergiy Denysov (OsloMet), Sølve Selstø (OsloMet) and Are Magnus Bruaset (Simula Research Laboratory)

Emulations of quantum algorithms on classical computers remain the key part of the benchmarking of the present-day quantum computers. The continuous growth of the number of qubits in these prototypes makes the corresponding simulations very resource intensive. When preparing to perform them on a cluster, we must make good use of classical high performance computing techniques, like multithreading and GPU acceleration, as well as take into account the particular architecture of the cluster. In this situation, the question posed in the title becomes highly relevant.

Currently, Quantum Computing (QC) experiences a period of unprecedentedly fast growth but, as a technology, it is still in its infancy. According to the most optimistic estimates, it will take another five-seven years for QC to fully mature. Further development of QC is still conditioned on the ability to successfully emulate quantum circuits and algorithms on classical computers, and most currently existing benchmarking protocols are based on comparisons between the performance of ‘ideal’ emulated circuits and those ran on the QC prototypes. The notion of Quantum Volume [1] promoted by the IBM and Honeywell as a measure of QC performance as well as the setup of Google’s Quantum Supremacy experiment [2] are both based on such comparisons.

The Curse of Dimensionality limits the horizon of QC emulations in a very strict manner. Addition of every new qubit to a circuit means doubling of the memory space and a reasonably large current-day supercomputer is not able to simulate circuits with more than 49 qubits (although further increase is possible at the price of imposing restrictions on the accessible states) [3]. At the same time, the QC technology has already progressed beyond this limit and recently IBM announced its new QC processor Eagle with 127 qubits [L1].

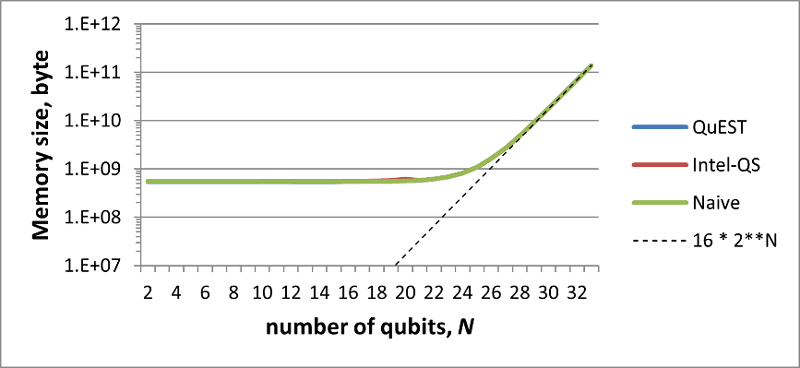

As we demonstrate below, the memory size needed to store the description of the wave function, i.e. to specify its complex amplitudes, of a system of N qubits can be trivially estimated. The additional memory size, needed to install the software, describe the circuit/algorithm, and finally to implement sequence of gates, does not scale exponentially with N (at worst polynomially) and is therefore negligible.

In this situation the performance becomes an important characteristic and the choice of software starts to really matter. Now the question we posed in the title can be made more specific: When planning to perform emulations of complex multi-qubit quantum circuits/algorithms on a specific computer cluster or supercomputer, what is the better option – to use one of the existing QC emulation packages or to build our own tailored software?

A good cluster-oriented QC emulation software should allow for parallelization (both on local and distributed memory) and for efficient control of the resource consumption during every stage of the emulations. Most of the existing open-source QC packages [L2] do not fulfil these conditions. In fact, we have found only two which do, QuEST [L3] and Intel-QS [L4]. We have analysed their performance on two supercomputers, the ENDEAVOR Intel cluster [L5] and eX3 [L6], which is the Norwegian national infrastructure for experimental exploration of exascale computing (on the latter we used an NVIDIA DGX-2 system). In both cases, we emulated circuits of N qubits with N Hadamard (single-qubit) gates and N cNOT (two-qubit) gates for N ranging from 2 to 32. The arrangement of the gates was purely random. For every value of N, the results were averaged over 50 random circuit realizations.

Additionally, we created a very basic software which does not use vectorization, cash optimization, and or other similar tricks that are usually taken into account when optimizing the performance. We named this software Naïve; its sole purpose was to create a background for comparing the performance of QuEST and Intel-QS.

Figure 1 shows the total memory size needed to emulate a random Hadamard-cNOt circuit on the ENDEAVOR cluster, as a function of N. It highlights two things: First, there is no difference between the three software implementations. Second, with the increase of N all three curves align the universal scaling which simply follows from the fact that every complex amplitude demands two real numbers (8B each) to be specified. As we noted above, other memory resources, which go on top, are negligible for N > 28.

Figure 1: Total memory size needed to emulate a random Hadamard-cNOt circuit on the ENDEAVOR cluster, as a function of N.

When run on the ENDEAVOR cluster, QuEST demonstrated a high degree of parallelization (near 90%), while it was found to be very low (≈15%) for the other two. In case of Naïve, this is obviously due to the lack of optimization. In the case of Intel-QS, it is because a single-threaded implementation of the operation updating the amplitude data array.

Only QuEST has been tested on the GPU-based DGX-2 system in the eX3 infrastructure since only this software package supports the use of graphics accelerators. Unfortunately, it is, however, able to use only one such accelerator. We designed a GPGPU version of Naïve to compare its performance with the performance of QuEST. The outcome is that Naïve performed better than QuEST by all the indicators, including the computation time.

To summarize, two observations can be made. First of all, the ability to simulate digital quantum circuits and algorithms are limited only by the available memory which has to be larger than 16*2N bytes. In this respect, the dedicated ATOS Quantum Learning Machine [L7] , which is able to emulate “up to 42 qubits”, mainly constitutes a stack of memory modules summing up to no less than 36 TB capacity. Second, as demonstrated by Naïve, the software component can be on-purpose designed and optimized when the choice of the cluster has been made. So, if you are up to the task, the answer to the question is: Build.

However, this answer also demonstrates that there is need for general-purpose QC emulators that do a better job of optimizing their performance for the specific problems you want to solve. When emulations are a part of large international project involving several computing centres and clusters, there will be a need for a unified cloud-accessible platform operating under a joint software environment.

Links:

[L1] https://research.ibm.com/blog/127-qubit-quantum-processor-eagle

[L2] https://quantiki.org/wiki/list-qc-simulators

[L3] https://github.com/QuEST-Kit/QuEST

[L4] https://intel-qs.readthedocs.io/en/docs/getting-started.html

[L5] https://www.top500.org/system/176908/

[L6] https://www.ex3.simula.no/

[L7] https://atos.net/en/solutions/quantum-learning-machine

References:

[1] A. Cross et al.: “Validating quantum computers using randomized model circuit”, Phys. Rev. A. 100, 032328 (2019)

[2] F. Arute et al.: “Quantum supremacy using a programmable superconducting processor”, Nature 574, 505 (2019).

[3] D. Willsch et al.: “Benchmarking supercomputers with the Julich universal Quantum Computer Simulator”, NIC Symposium 2020, Publication Series of the John von Neumann Institute for Computing (NIC) NIC Series 50, 255 - 264 (2020).

Please contact:

Sergiy Denysov

Oslo Metropolitan University, Norway