by Ilias K. Savvas and Ilias Galanis, (University of Thessaly)

Our world will be shaken if and when quantum computing becomes reliable and accessible to the majority of researchers. Almost all branches of science will be affected by this new technology. From Chemistry and Pharmacology to the prediction of climate change and Geology, quantum computing promises fast-paced and instantaneous solutions to problems that are still considered unsolvable. But has this promising technology arrived? Are today's quantum computers ready and above all reliable?

No matter what we buy, from a simple charger to an expensive laptop, we tend to compare our available options and choose the one that we think is the best. When a new device becomes available to the public one of the first things that determine its performance is the benchmarking tests, scores that indicate its ability to perform certain tasks based on time and accuracy. The same things more or less apply to quantum computers. Of course, none of us will be able to buy a quantum computer anytime soon.

Quantum Computing Devices (QCDs) as of now are expensive, bulky machines, that can operate under-protected closed environment conditions and most importantly still under development. One thing that indicates how early we are in the era of quantum computing is how programming one feels like. Over the years many high-level programming languages have been developed for classical computers when programming a QCD we need to think of gates and registers. A quantum program – as of now – is mostly a quantum circuit. Keeping all that in mind, we cannot tell when the first quantum computer becomes the main device for searching in a big database or used by a big pharmaceutical company in developing new drugs. Thus, the next step to make us feel confident about real progress that has been done is benchmarking these devices and this is a significant step in order to reach quantum supremacy (a quantum computer will solve a problem that is considered practically unsolved for a classical computer).

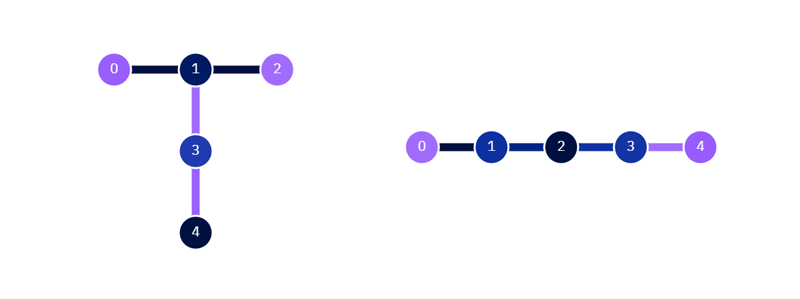

Benchmarking QCDs is a new challenging and promising task taking into consideration the many different physical realizations/approaches to the construction of QCDs. Trapped ions and superconducting quantum computers to name a few. We cannot be quite sure yet what is the best approach or which one of them will dominate in the forthcoming years. In addition, QCDs of the same realization can have different architectures (the way each qubit is directly connected with the other) as it seems in Figure 1. Finally, an extra layer of difficulty is that quantum systems are not deterministic. That makes it quite difficult to tell what is right and what is wrong. So, in the near future many measurements will be necessary to predict accurately the QCDs’ behaviour. And there is more. How one can tell what is the best evaluation unit used to compare QCDs? Is it the number of available qubits? Unfortunately, that is not the case. Many qubits do not imply the effectiveness of QCDs. A phenomenon called Quantum Decoherence causes qubits to change their state over time thus reliability is – as of now – the main issue concern that keeps QCDs as a future computational device.

Figure 1: IBMs QCD ibmq_Quito (left) and QCD ibmq_Bogota (right). The quantum Devices have the same number of qubits but different architectures resulting in different performance.

Manipulating a qubit is a challenging procedure that needs precision and most importantly ideal conditions. Benchmarking techniques such as Randomized Benchmarking (a method for assessing the capabilities of quantum computing hardware platforms through estimating the average error rates that are measured under the implementation of long sequences of random quantum gate operations) [1] and Quantum State Tomography (information about the state of the qubit which is gained by performing multiple tomographic measurements to the system) are useful to evaluate a single QCD but cannot compare devices of different architectures and realizations. In 2018 a single value metric called Quantum Volume (QV) [2] was introduced by IBM [L1] modifying its definition one year later. For QV two issues are taken under consideration: the number of qubits (N) and the depth of the quantum circuit (d) a QCD can successfully run with meaningful results. It is desirable to exclude extreme cases such as the smallest error rates and therefore the largest circuit depth will result from very few qubits; also, the other extreme, where a device has many qubits but little coherence, i.e. d ≈ 1, resulting in uninteresting information of the device. So, to be more accurate and give a more complete view of the device, QV expresses the maximum size of square quantum circuits that can be implemented successfully by the computer.

The form of the circuits is independent of the quantum computer architecture, but the compiler can transform and optimize it in order to take advantage of the computer's features. Thus, quantum volumes for different architectures can be compared making the Quantum Volume metric seem like a very promising technique to evaluate QCDs but still it is an ongoing procedure. The early era of quantum computing is keeping us back on implementing real applications while theory is far ahead. Today, no one knows the circuit size their classical computer or smartphone can implement, and probably when quantum computing becomes a mature technology, different things will be taken into consideration to evaluate them.

After all, one of the main topics that in the Department of Digital Systems, University of Thessaly, Gr, we focus our research efforts is to explore and evaluate the existing benchmarking approaches and beyond them to discover more accurate techniques according to the reliability of QCDs. It is very important to ensure the enthusiastic researchers in the field of Quantum Computing that their efforts will not remain in theory but the well-proven reliability of QCDs will drive the World soon to this new promising era.

Link:

[L1] https://quantum-computing.ibm.com/

References:

[1] J. Eisert et al.: “Quantum certification and benchmarking,” Nat. Rev. Phys., vol. 2, no. 7, pp. 382-390-382–390, 2020, doi: 10.1038/s42254-020-0186-4.

[2] N. Moll et al.: “Quantum optimization using variational algorithms on near-term quantum devices,” Quantum Sci. Technol., vol. 3, no. 3, pp. 030503–030503, Jun. 2018, doi: 10.1088/2058-9565/aab822.

Please contact:

ΙIlias K. Savvas, University of Thessaly, Greece