by Anne-Laure Rousseau (Assistance Publique - Hôpitaux de Paris)

Machine learning has made a remarkable entry into the world of healthcare, but there remain some concerns about this technology. According to journalists, a revolution is upon us: One day the first artificial intelligence robot receives its medical degree, the next, new algorithms have surpassed the skill of medical experts. It seems that any day now, medical doctors will be unemployed, superseded by the younger siblings of Siri. But having worked on both medical imaging and machine learning, I think the reality is different, and, at the risk of disappointing some physicians’ colleagues who thought the holidays were close, there is still work for us doctors for many decades. The new technology, in fact, offers a great opportunity to enhance health services worldwide, if doctors and engineers collaborate better together.

The new techniques of computer vision can be seen as a modern form of automation [1]: after automating the legs (the physical work made possible with the motors), the hands (precision work made possible by robots in the industry), we automate the eyes (the detection of patterns in images). The computer vision algorithms are skilled at specific tasks: imagine having with you not one or two, but a thousand medical students who can quickly perform tasks that are not necessarily complex but very time consuming. This will change a lot of things: it will reduce the time necessary to complete an imaging exam but it will also change the very nature of these exams by changing the cost efficiency of certain procedures. It is the combination of the doctor and the machine that will create a revolution, not the machine alone.

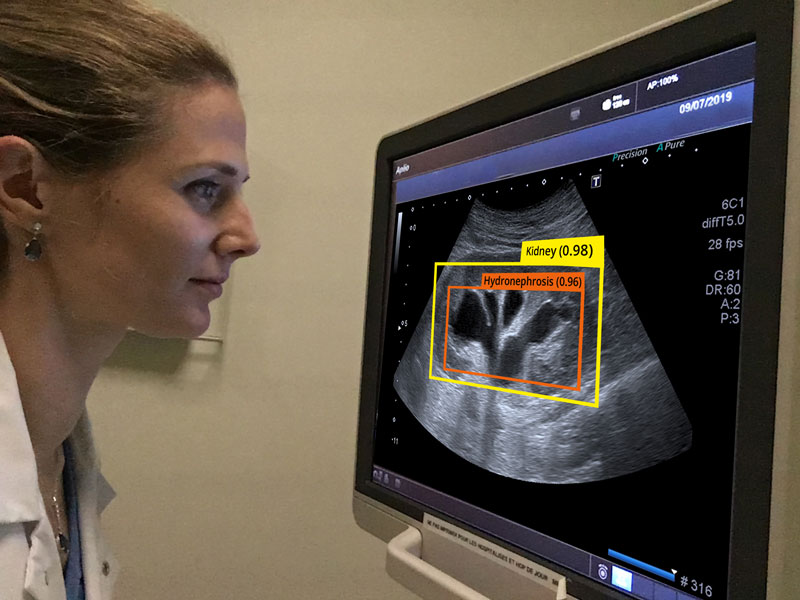

This concept-image depicts how physicians could use deep learning algorithms in their future practice.

I gathered together a group of engineers and healthcare workers, the NHance team [L1], to apply deep learning algorithms to ultrasound imaging. Ultrasound imaging has seen a most impressive growth in recent years and over 20 medical and surgical specialties have expressed interest in its use as a hand-carried ultrasound tool. According to WHO, two thirds of the world’s population does not have access to medical imaging, and ultrasound associated with X-ray could cover 90 % of these needs. The decrease in hardware ultrasound prices allows its diffusion, and it is no longer access to equipment but the lack of training that limits its use [2].

Indeed, learning ultrasound is currently complex and not scalable. Machine learning could be particularly useful to alleviate the constraints of ultrasound, and further democratise medical imaging around the world.

Using algorithms to detect kidney diseases is one example of this. We trained our algorithms on 4,428 kidney images. To measure our model’s performance we use the receiver operating characteristic curves (ROC). The areas under the ROC curves (AU-ROC) quantify the discrimination capabilities of the algorithms for the detection of a disease compared to the diagnosis made by Nhance medical team. The higher the AU-ROC, the better the model is at distinguishing between patients with disease and no disease. The modele whose predictions are 100% wrong has an AU-ROC of 0.0. On the contrary, an excellent classifier has an AU-ROC of around 1. In our study; the AU-ROC for differentiating normal kidneys from abnormal kidneys is 0.9.

We have trained algorithms to solve an emergency problem: for every acute renal failure, the physician needs to know if the urinary tract is blocked, and ultrasound is a good way to perform that diagnosis (a kidney state called hydronephrosis). AU-ROC for identifying hydronephrosis is 0.94 in our study.

We have trained our algorithms to detect kidney cancer. If kidney cancer is diagnosed when the patient has symptoms, such as blood in the urine or low back pain, it means in most cases that the cancer has already evolved and enlarged outside the kidney and it will be extremely difficult to cure, so highlighting a kidney cancer when it is asymptomatic can be a determinant factor for the patient. AU-ROC for identifying parenchymal lesion of the kidney is 0.94 in our study.

We have applied our research to other organs, such as the liver. The incidence of and mortality from liver diseases grows yearly. Our deep learning algorithms present an AU-ROC of 0.90 for characterisation of a benign liver lesion and up to 0.90 for a malignant liver lesion. For medical experts, the AUROC for discriminating the malignant masses from benign masses was 0.724 (95 % CI, 0.566-0.883, P = 0.048) according to Hana Park et al.

These results are very encouraging and show that in the near future our algorithms could play an important role in assisting doctors with diagnoses. Our next step is to scale these results in collaboration with the Epione team led by Nicholas Ayache and Hervé Delingette at INRIA. We will work on all the abdominal organs in partnership with public assistance hospitals of Paris (APHP) that puts 1.3 million exams at our disposal for our project.

It’s time to take cooperation between medical teams and engineering teams to the next level, in order to build better tools to enhance healthcare for everyone.

Link:

[L1] http://www.nhance.ngo/

References:

[1] B. Evans: “10 Year Futures (Vs. What’s Happening Now) [Internet], Andreessen Horowitz, 6 Dec 2017 https://kwz.me/hy9

[2] C.L. Moore, J.A. Copel: “Point-of-Care Ultrasonography”, N. Engl. J. Med. 24 Feb. 2011, 364(8):749‑757. doi:10.1056/NEJMra0909487.

[3] S. Shah, BA Bellows, AA Adedipe, JE Totten, BH Backlund, D Sajed: Perceived barriers in the use of ultrasound in developing countries, Crit. Ultrasound J. 19 June 2015; 7.

doi: 10.1186/s13089-015-0028-2.

Please contact:

Anne-Laure Rousseau, President of the NGO NHance, European Hospital Georges Pompidou and Robert Debré Hospital, Paris, France