by Antonis Louka (University of Cyprus), Andreas Dionysiou (Frederick University), and Elias Athanasopoulos (University of Cyprus)

In today’s programming landscape, ensuring software security is more critical than ever. Rust, a relatively new programming language, incorporates safety features that produce secure and efficient machine code without relying on runtime support. In our work, developed at the University of Cyprus, we explore how an attacker might deliberately create vulnerabilities in Rust binaries post-compilation, and the need for code validation for such systems.

Current programming systems can be split into safe and unsafe based on how they manage memory. Traditional (unsafe) systems like C/C++ are built using a loose memory management model that depends on the programmer to manage memory. Safe systems on the other hand, such as Java and C#, use heavy runtime support to perform memory management accounting and ensure safety. Rust, a new programming language, enforces memory safety without runtime support, providing a new middle ground between the two aforementioned categories. Other similar efforts are Go, which depends on a lightweight garbage collector, and Swift, which uses automatic reference counting.

Specifically, Rust uses the Ownership, Borrowing, and Lifetime concepts to create Rust-specific rules to automate memory management and avoid temporal-safety bugs like use-after-free (UaF) or double free (DF) bugs. Such rules are enforced by a Rust compiler routine called the “Borrow Checker”. Additionally, Rust adds automatic checks in the program that are essentially conditional statements during compilation for avoiding spatial-safety bugs, such as buffer overflows (BO). Overall, Rust’s safety measures are enforced during compilation, leaving the binary without any guarantees during execution other than trusting the compiler’s output. These measures can be also bypassed using the unsafe keyword that is mainly used to integrate C code in Rust programs.

In our work, we argue that the machine code produced by a Rust compiler should be validated for safety before reaching the end user. Such a validation process is not new, but has been applied in the past when web browsers supported running third-party native code using the NaCl framework [1]. More precisely, we argue that when a binary is submitted to an app store, there should be a validating routine along with code-reviewing mechanisms that assess if the given binary adheres to memory-safety Rust-specific rules.

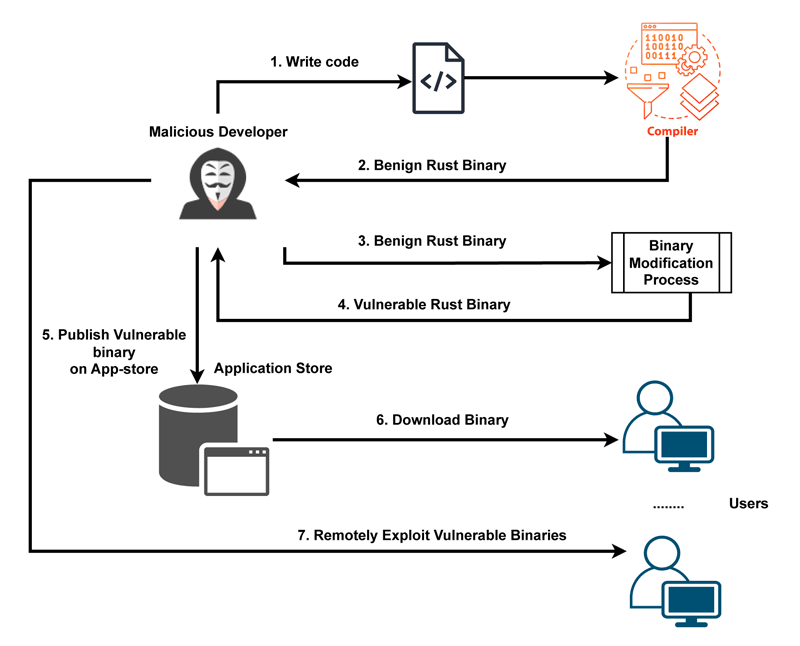

We demonstrate that an attacker can stealthily modify a Rust binary and recreate temporal and spatial safety bugs. The machine code produced by the Rust compiler can later be altered to introduce such bugs into the binary that reaches the end user. This provides an opportunity for malicious developers to compile an application and stealthily add malicious bugs for later exploitation (see Figure 1).

Figure 1: Threat model diagram: A malicious developer creates a benign Rust application that successfully passes the compilation phase. The developer then uses a modification process that alters the binary and creates a bug i.e. BO, UaF making the binary vulnerable. Finally, the developer publishes the vulnerable binary to an application store that does not utilise language-specific validation, bypassing the different reviewing mechanisms. After multiple users download and install the malicious application, the developer can exploit the vulnerable binaries remotely.

This is a similar scenario to that depicted by Wang et al. [2], and the creation of Jekyll apps (intentionally malicious applications that bypass the defence mechanisms of the IOS store). In this case (i) the compiler cannot be trusted to be the only entity that ensures memory safety for such systems, (ii) the digital signature measures cannot detect if the application has been tampered with or not since the developer is the actual attacker, and (iii) current review mechanisms cannot capture such alterations as they do not follow Rust’s memory safety rules. The aforementioned reasons mandate the creation of validation routines that check specific artifacts in an executable that are language-specific and fundamental to enforce memory safety.

The validation methodology proposed in this work focuses on Rust binaries only, and during our experiments, we demonstrate that certain Rust safety measures, such as checks for BOs, can be more easily manipulated, while others, such as UaF, need more elaborate modifications. We demonstrate that by stealthily changing the conditional statements added by the compiler, traditional BOs can be created and used to corrupt stack control data, i.e. return addresses. We additionally show that fundamental Rust rules enforced by the Borrow Checker can be bypassed to create dangling pointers and UaF bugs.

We develop a preliminary validation framework that uses heuristics and other specific patterns of Rust, i.e. exception-handling patterns and buffer initialisation patterns, and we focus on the validation of buffer-overflow checks and buffer references. Our framework validates buffer-overflow checks by comparing them with a ground truth conditional check (created from analysing the binary). For reference validation, we use data-flow tracking from a given buffer (source) to other aliases (references) while simulating Rust’s rules and checking for any violations.

The ability to validate code for such programming systems is crucial not only for scenarios as described in this article. Rust and Go are currently very popular and attractive choices for creating system applications. Many developers have started migrating code from C/C++ to Rust to benefit from memory-safety guarantees. Validating such code is also not trivial as unsafe blocks of code need to be handled differently than regular safe code. This information is not present in a compiled binary, which creates the need to add validation symbols in an executable to assist future validation frameworks. On the other hand, some work has demonstrated that concepts used to automate memory allocation and deallocation may produce bugs, especially when interacting with unsafe Rust code [3]. Validation frameworks may be able to capture such bugs before the application reaches the end user.

Our research introduces an initial validation approach specifically targeting Rust buffers in binaries; however, much work remains to develop a comprehensive validation framework for the new era of programming systems such as Rust.

References:

[1] B. Yee et al., “Native client: a sandbox for portable, untrusted x86 native code,” in 30th IEEE Symposium on Security and Privacy, 2009, pp. 79–93.

[2] T. Wang, et al., “Jekyll on iOS: when benign apps become evil,” in 22nd USENIX Security Symposium (USENIX Security 13), 2013, pp. 559–572.

[3] M. Cui and Y. Zhou, “SafeDrop: Detecting memory deallocation bugs of rust programs via static data-flow analysis,” ACM Transactions on Software Engineering and Methodology, vol. 32, no. 4, pp. 1–21, 2023.

Please contact:

Elias Athanasopoulos, University of Cyprus