by Luca Caviglione (CNR-IMATI), Gianluigi (CNR-ICAR), Massimo Guarascio (CNR-ICAR), and Paolo Zicari (CNR-ICAR)

Modern Man-At-The-End (MATE) attacks can take advantage of Language Models to produce deceptive information or malware variations. Fortunately, they can also be used to implement advanced defensive techniques. In this vein, we present the use of Small Language Models to reveal fraudulent communications or to automatically generate test cases for preventing the exfiltration of data through tampered MQTT brokers.

The typical MATE adversary is supposed to have an unrestricted access to the target. In modern heterogeneous scenarios, the attacker can interact with software artifacts (e.g. libraries, firmware and digital images) as well as with a multitude of hardware devices, such as IoT nodes or industrial-class assets. Therefore, available offensive tools and techniques are almost boundless, ranging from debuggers to virtualisation frameworks.

With the advent of generative AI, the ability of the adversary has expanded, making MATE attacks difficult to prevent and mitigate. For instance, Large Language Models (LLMs) can be used during the reconnaissance stage to gain insights from traffic dumps, execution logs, or documents for implementing a business process or instructing operators. LLMs can also be used to weaponise threats as they have proven to be effective in synthetising ad-hoc phishing mails or in producing malicious code, such as SQL injections (e.g. [1] and the references therein). Fortunately, LLMs are emerging as valuable defensive tools, especially for anticipating attackers. For instance, AI fuzzers can test libraries, configuration files and protocol specifications effectively [1], especially in preventing some manipulations that a MATE adversary is likely to perform when accessing the target.

Aiming to mitigate the impact of MATE attacks, we showcase two major results leveraging Small Language Models (SMLs). Unlike LLMs, they are trained on smaller, more specific, and cleaner datasets. This allows them to achieve better performances than LLMs for specific tasks without the need for super-computers with huge computational resources, which is an unfeasible requirement for SMEs.

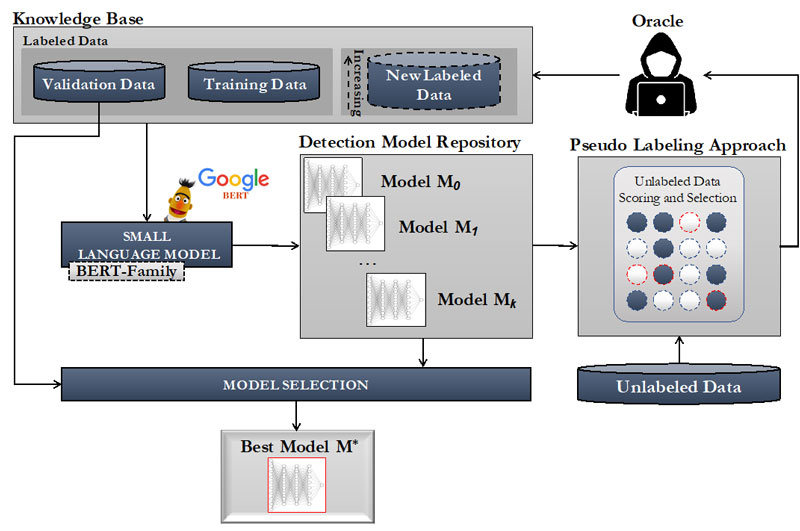

The first result considers the usage of SMLs for mitigating possible phishing attacks or communications crafted to induce human operators to support the attacker, for instance to grant access to an infrastructure. To this end, the threat actor could collect information on the used software, vendors, CVEs, or supported hardware to produce “fake news”, e.g. misleading security bulletins. As a countermeasure, we devised a human-in-the-loop semi-supervised framework for detecting ad-hoc malicious information in a challenging scenario where small amounts of labelled data are available for the training stage. It leverages the iterative Active Learning (AL) process for integrating newly labelled data. Specifically, it uses a “small” instance of a Bidirectional Encoder Representation from Transformers (small BERT) model within an AL scheme where novel labelled data is acquired incrementally, with the help of a human expert, and used to fine-tune the model. The learning process introduced above is depicted in Figure 1. In more detail, the initial set of training data is used to build a preliminary version of the classifier. This training set is expanded iteratively by automatically selecting a small subset of uncertain unlabelled data instances, which are passed to a human expert who will confirm or reject the model classification. By adding these newly labelled instances to the original training set, a new version of the classifier is trained at each iteration of the AL procedure. This framework has been tested for detecting fake news (e.g. gossip and politics) and has demonstrated its effectiveness with improvements in accuracy up to 10%.

Figure 1: Learning process of the Detection Model and interaction with the expert.

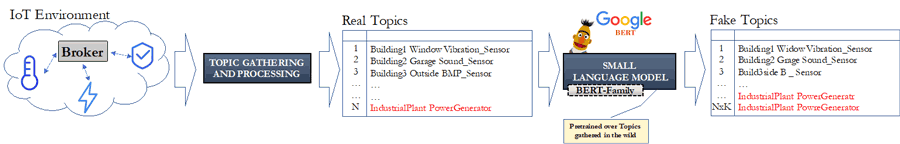

The second result for limiting MATE attacks employs SMLs to help the security expert reveal the presence of a malicious actor trying to exfiltrate data through an MQTT broker, which is a core building block of many smart homes and an important “glue layer” to gather IoT measurements for feeding digital twins of cities and industrial settings. A malicious actor could collect MQTT topics of the victim (e.g. a smart building) through a MATE attack and then craft “fake” entries to implement a wide array of information-hiding-capable offensive schemes. Such bogus entries can be used to conceal unauthorised transmissions or encode stolen data, e.g. the creation of a specific topic could signal infected IoT nodes to activate. To prevent such an attack scheme, together with colleagues from the University of Pavia, we used a small BERT to capture all the nuances of the topics made available on a specific broker, e.g. all the measurements belonging to a set of IoT sensors grouped under the umbrella name IndustrialPlant/PowerGenerator. The model can then be used to fuzz-test detection metrics, defensive tools, or best practices used within an organisation to define naming conventions. Figure 2 depicts the proposed approach. Starting from real topics gathered “in the wild” the small BERT can produce “fake” but realistic topics to check the resilience of security policies against information hiding [3]. As an example, a properly trained SLM can automate the exploration of all the possible permutations used by an attacker to encode data (e.g. in the case-lowercase sequence of the letters composing a topic) or determine whether fake entries such as “IndustrialPlant/PowerGeneratr” or “IndustrialPlant/ PowreGenerator” are correctly handled at runtime.

The two results are the outcome of research activities carried out by a joint group of scientists from the Institute for High Performance Computing and Networking and the Institute for Applied Mathematics and Information Technologies, within the framework of the National Recovery and Resilience Plan [L1]. Our ongoing research aims at improving the overall security posture of modern software ecosystems when exposed to MATE attacks, e.g. to prevent the abuse of digital images for cloaking malicious payloads or to protect software artifacts via watermarks.

This work was partially supported by project SERICS (PE00000014) under the MUR National Recovery and Resilience Plan funded by the European Union - NextGenerationEU.

Link:

[L1] https://serics.eu

References:

[1] J. Wang, L. Yu, and X. Luo, “LLMIF: augmented Large Language Model for fuzzing IoT devices,” in Proc. of the 2024 IEEE Symposium on Security and Privacy, pp. 196-209, San Francisco, CA, USA, 2024.

[2] F. Folino, et al., “Towards data- and compute-efficient fake-news detection: an approach combining active learning and pre-trained language models,” SN Computer Science, Vol. 5, pp. 470:1–470:18, 2024.

[3] C. Cespi Polisiani, et al., “Mitigation of covert communications in MQTT topics through small language models,” submitted to EuroCyberSec 2024 co-located with the 32nd International Symposium on the Modeling, Analysis, and Simulation of Computer and Telecommunication Systems, Krakow, Poland, 2024.

Please contact:

Gianluigi Folino, CNR-ICAR, Italy