by Patrick Kochberger, Philipp Haindl (St. Pölten University of Applied Sciences), Matteo Battaglin and Patrick Felbauer (University of Vienna)

In this project, we investigate how different tools measure code complexity of software protections, revealing significant variations in their results. While simpler metrics like lines of code (LOC) often produce similar outcomes, more advanced metrics such as cyclomatic complexity (CC) and maintainability index (MI) show major differences across tools. These discrepancies highlight the need for better methodologies when assessing the effectiveness of obfuscation techniques for protecting software.

In software development and software and program protection, techniques for estimating the complexity of source and/or program code are an important tool. On the one hand, when writing code, keeping it simple, easy to understand and employing efficient programming paradigms is a key requirement for long-term maintainability. On the other hand, in software protection, the goal is to make it as hard as possible to understand programs to increase the resilience against intellectual theft, tampering and exploitation. In this and the ongoing work at the St. Pölten University of Applied Sciences and the Christian Doppler Laboratory AsTra [L1], we focus on advancing the methodological foundations of software protection, particularly against adversary-at-the-end attacks, by studying and comparing code complexity metrics. Code metrics [1] are functions used for measuring certain properties of software. They are objective, reproducible and quantifiable measurements to gauge characteristics, e.g. the complexity of code. The resulting information is useful in a variety of applications of software development, including quality assurance, estimating performance, and costs. Specifically, these metrics are used to identify code which is overly complex, and not maintainable.

In software protection [2], code obfuscation is used to protect benign applications against attacks such as illegal distribution, intellectual theft, tampering, and exploitation. The idea is to intentionally increase the complexity of code in such a way that some functionality is more difficult to detect and/or understand. The goal is to make analysis of the application more time-consuming and difficult. To assess obfuscation techniques and obfuscation and deobfuscation tools and evaluate them against each other, one possible option is to compare the code complexity by measuring and calculating code complexity metrics.

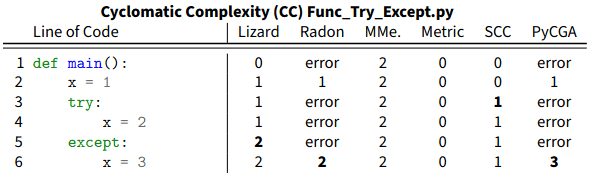

In a first step towards comparable measurements, we performed a study on five different groups of code complexity metrics: Lines Of Code (LOC), Cyclomatic Complexity (CC), Halstead (Hal), Maintainability Index (MI), COnstructive COst MOdel (COCOMO), implemented and measured by 12 different tools. The LOC-related metrics quantify the size of a program by counting the number of lines. Each concrete metric and implementation has its own rules regarding blank lines, comment lines, import lines, and non-executable lines. The CC measures the amount of decision logic based on the software’s control flow graph. The Hal software metrics are a set of measurements based on the number of distinct operators and operands. The Halstead Difficulty is related to the complexity of the program. The MI is a weighted combination of Hal, CC, and LOC metrics. The COCOMO estimates software project effort and costs in person-months. The model relies on additional information, aside from the source code, such as the type of software project and its cost drivers to calculate its results. We surveyed the availability and differences among several code metrics across individual implementations in several tools. For a detailed investigation, we constructed a dataset containing different test cases and implemented an analysis framework which feeds the program code as a whole or piece-by-piece to the tools calculating the metric. In the piece-by-piece mode (e.g. line-by-line in Figure 1) the framework helps to find out which individual lines of code affect the overall metric score.

Figure 1: A small excerpt from our results on how six of the tools measure the cyclomatic complexity when facing a try-except construct in Python.

Our results indicate that the comparability of the tools’ results varies significantly depending on the specific metric in question. For example, for the relatively simple LOC and source lines of code (SLOC) metrics, the tools calculated the most similar values, although even with these metrics, some tools had significant divergence. For the other studied metrics, some of the results were so different as to render them incomparable. Therefore, it is essential to not only specify the metric but also the tool which performed the measurements.

We plan to analyse even more code complexity metrics and want to evaluate their performance and capability in evaluating the strength of software protections. The goal is to find and develop measurements to compare code on different levels (source, intermediate, and binary code) to estimate the strength of protections before and after the compilation step as well as the effect of different compilers and optimisation passes.

Link:

[L1] https://cdl-astra.at

References:

[1] A. S. Nuñez-Varela, et al., “Source code metrics: a systematic mapping study,” J. of Systems and Software, vol. 128, pp. 164–197, 2017, doi: 10.1016/j.jss.2017.03.044.

[2] S. Schrittwieser, et al., “Protecting software through obfuscation: can it keep pace with progress in code analysis?,” ACM Computing Surveys, 2017. https://doi.org/10.1145/2886012

Please contact:

Patrick Kochberger, St. Pölten University of Applied Sciences, Austria,