by Ella Janotte (Italian Institute of Technology), Michele Mastella, Elisabetta Chicca (University of Groningen) and Chiara Bartolozzi (Italian Institute of Technology)

In nature, touch is a fundamental sense. This should also be true for robots and prosthetic devices. In this project we aim to emulate the biological principles of tactile sensing and to apply it to artificial autonomous systems.

Babies are born with a grasping reflex, triggered when something touches their palms. Thanks to this reflex, they are able to hold on to fingers and, later on, manually explore objects and their surroundings. This simple fact shows the importance of biological touch for the understanding of the environment. However, artificial touch is less prominent than vision: even tasks such as manipulation, which require tactile information for slip detection, grip strength modulation and active exploration, are widely dominated by vision-based algorithms. There are many reasons for the underrepresentation of tactile sensing, starting from the challenges posed by the physical integration of robust tactile sensing technologies in robots. Here, we focus on the problem of the large amount of data generated by e-skin systems that strongly limits their application on autonomous agents which require low power and data-efficient sensors. A promising solution is the use of event-driven e-skin and on-chip spiking neural networks for local pre-processing of the tactile signal [1].

Motivation

E-skins must cover large surfaces while achieving high spatial resolution and enabling the detection of wide bandwidth stimuli, resulting in the generation of a large data stream. In the H2020 NeuTouch [L1] project, we draw inspiration from the solutions adopted by human skin.

Coupled with non-uniform spatial sampling (denser at the fingertips and sparser on the body), tactile information can be sampled in an event-driven way, i.e., upon contact, or upon the detection of a change in contact. This reduces the amount of data to be processed and, if merged with on-chip spiking neural networks for processing, supports the development of efficient tactile systems for robotics and prosthetics.

Neuromorphic sensors

Mechanoreceptors of hairless human skin can be roughly divided into two groups: slowly and rapidly adapting. Slowly adapting afferents encode stimulus intensity while rapidly adapting ones respond to changes in intensity. In both cases, tactile afferents generate a series of digital pulses (action potentials, or spikes) upon contact. This can be applied to an artificial e-skin, implementing neuromorphic, or event-driven, sensors’ readout.

Like neuromorphic vision sensors, the signal is sampled individually and asynchronously, at the detection of a change in the sensing element's analogue value. Initially, the encoding strategy can be based on emitting an event (a digital voltage pulse) when the measured signal changes by a given amount with respect to the value at the previous event. We will then study more sophisticated encoding based on local circuits that emulate the slow and fast adaptive afferents. Events are transmitted off-chip asynchronously, via AER-protocol, identifying the sensing element that observed the change. In this representation, time represents itself and the temporal event pattern contains the stimulus information. Thus, the sensor remains idle in periods of no change, avoiding the production of redundant data, while not being limited by a fixed sampling rate if changes happen fast.

We aim to exploit the advantages of event-driven sensing to create a neuromorphic e-skin that produces a sparse spiking output, to solve the engineering problem of data bandwidth for robotic e-skin. The signal can be then processed by traditional perception modules. However, the spike-based encoding calls for the implementation of spiking neural networks for extracting information.

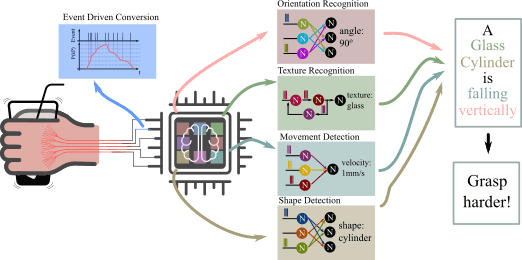

Figure 1: A graphical representation of the desired outcome of our project. The realised architecture takes information from biologically inspired sensors, interfacing with the environment. The outcoming data are translated into spikes using event-driven circuits and provide input to different parts in the electronic chip. These different parts are responsible for analysing the incoming spikes and delivering information about environmental properties of objects. The responses are then used to generate an approximation about what is happening in the surroundings and impact the reaction of the autonomous agent.

Spiking neural networks

The spiking asynchronous nature of neuromorphic tactile encoding paves the way to the use of spiking neural networks (SNNs) to infer information about the tactile stimulus. SNNs use neuron and synapse models that more closely match the behaviour of biology, using spatio-temporal sequences of spike to encode and decode information. Synaptic strength and the connectivity of the networks are shaped by experience, through learning.

Examples of neuromorphic tactile systems that couple event-driven sensing with SNN have been developed for orientation detection, where the coincident activations of several event-driven sensors is used to understand the angle at which a bar, pressed on the skin, is tilted [2]. Different sensors’ outputs are joined together and connected to a neuron, when the neuron spikes it signals that those sensors were active together. Another example is the recognition of textures, which can be done by recreating a spiking neural network that senses frequencies [3]. A novel architecture, composed only of neurons and synapses organised in a recurrent fashion, can spot these frequencies and signal the texture of a given material.

These networks can be built in neuromorphic mixed-mode subthreshold CMOS technology, to emulate SNNs using the same technological processes of traditional chips, but exploiting an extremely low-power region of the transistor’s behaviour. By embedding the networks directly on silicon using this strategy, we aim for low power consumption, enabled by the neuron’s ability to consume energy only when active and to interface directly with event-driven data.

Conclusion

Our goal is to equip autonomous agents, such as robots or prosthesis, with the sense of touch, using the neuromorphic approach both for sensing and processing. We will employ event-driven sensors to capture temporal information in stimuli and to encode it in spikes and spiking neural networks to process data in real time, with low power consumption. The network will be implemented on a silicon technology, delivering the first neuromorphic chip for touch.

These two novel paradigms have several advantages that will result in a structure capable of exploring the environment with bioinspired and efficient architectures. This combined approach can greatly enhance the world of autonomous agents.

Link:

[L1] https://neutouch.eu/

References:

[1] C. Bartolozzi, L. Natale, L., Nori, G. Metta: “Robots with a sense of touch”, Nature Materials 15, 921–925 (2016).

[2] A. Dabbous, et. al.: “Artificial Bio-inspired Tactile Receptive Fields for Edge Orientation Classification”, ISCAS (2021) [in press].

[3] M. Mastella, E. Chicca: “A Hardware-friendly Neuromorphic Spiking Neural Network for Frequency Detection and Fine Texture Decoding”, ISCAS (2021) [in press].

Please contact:

Ella Janotte, Event Driven Perception for Robotics, Italian Institute of Technology, iCub facility, Genoa, Italy.

Michele Mastella, BICS Lab, Zernike Inst Adv Mat, University of Groningen, Netherlands