by Dave Raggett, (W3C/ERCIM)

Human-like general purpose AI will dramatically change how we work, how we communicate, and how we see and understand ourselves. It is key to the prosperity of post-industrial societies as human populations shrink to a sustainable level. It will further enable us to safely exploit the resources of the solar system given the extremely harsh environment of outer space.

Human-like AI seeks to mimic human memory, reasoning, feelings and learning, inspired by decades of advances across the cognitive sciences, and over 500 million years of neural evolution since the emergence of multicellular life. Human-like AI can be realised on conventional computer hardware, complementing deep learning with artificial neural networks, and exploited for practical applications and ideas for further research.

The aim is to create cognitive agents that are knowledgeable, general purpose, collaborative, empathic, sociable and trustworthy. Metacognition and past experience will be used for reasoning about new situations. Continuous learning will be driven by curiosity about the unexpected. Cognitive agents will be self-aware in respect to current state, goals and actions, as well as being aware of the beliefs, desires and intents of others (i.e. embodying a theory of mind). Cognitive agents will be multilingual, interacting with people using their own languages.

Human-like AI will catalyse changes in how we live and work, supporting human-machine collaboration to boost productivity, either as disembodied agents or by powering robots to help us in the physical world and beyond. Human-like AI will help people with cognitive or physical disabilities – which in practice means most of us when we are old.

We sometimes hear claims about existential risks from strong AI as it learns to improve itself resulting in a superintelligence that rapidly evolves and no-longer cares about human welfare. To avoid this fate, we need to focus on responsible AI that learns and applies human values. Learning from human behaviour avoids the peril of unforeseen effects from prescribed rules of behaviour.

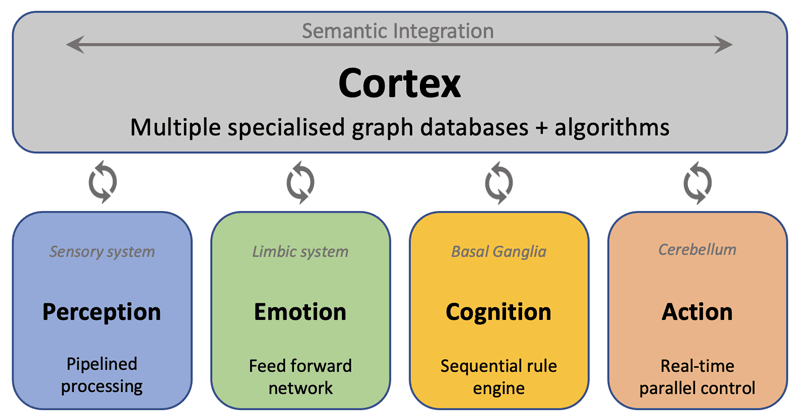

Human-like AI is being incubated in the W3C Cognitive AI Community Group [L1], along with a growing suite of web-based demos, including counting, decision trees, industrial robots, smart homes, natural language, self-driving cars, a browser sandbox and test suite, and an open-source JavaScript chunks library. The approach is based upon the cognitive architecture depicted in Figure 1:

- Perception interprets sensory data and places the resulting models into the cortex. Cognitive rules can set the context for perception, and direct attention as needed. Events are signalled by queuing chunks to cognitive buffers to trigger rules describing the appropriate behaviour. A prioritised first-in first-out queue is used to avoid missing closely spaced events.

- Emotion is about cognitive control and prioritising what’s important. The limbic system provides rapid assessment of situations without the delays incurred in deliberative thought. This is sometimes referred to as System 1 vs System 2.

- Cognition is slower and more deliberate thought, involving sequential execution of rules to carry out particular tasks, including the means to invoke graph algorithms in the cortex, and to invoke operations involving other cognitive systems. Thought can be expressed at many different levels of abstraction.

- Action is about carrying out actions initiated under conscious control, leaving the mind free to work on other things. An example is playing a musical instrument where muscle memory is needed to control your finger placements as thinking explicitly about each finger would be far too slow.

Figure 1: Cognitive architecture with multiple cognitive circuits equivalent to a shared blackboard.

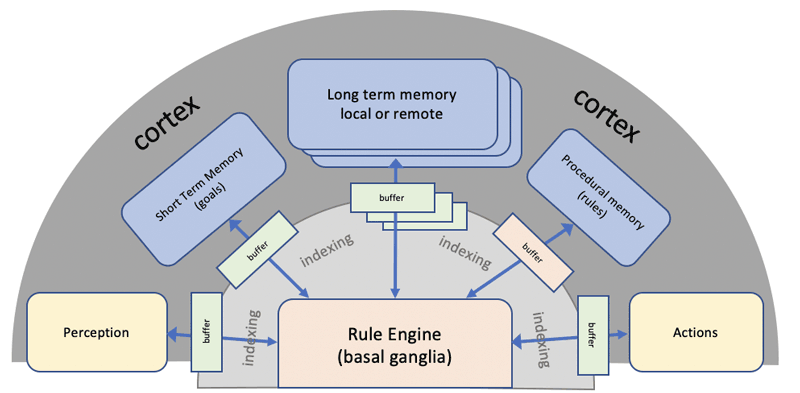

Figure 2: Cortico-basal ganglia circuit for cognition.

Zooming in on cognition, we have the following architecture, which derives from work by John Anderson on ACT-R [L2, 1]. The buffers each hold a single chunk, where each chunk is equivalent to the concurrent firing pattern of the bundle of nerve fibres connecting to a given cortical region. This works in an analogous way to HTTP, with buffers acting as HTTP clients and the cortical modules as HTTP servers. The rule engine sequentially selects rules matching the buffers and either updates them directly or invokes cortical operations that asynchronously update the buffers.

A lightweight syntax has been devised for chunks as collections of properties for literals or names of other chunks, and equivalent to n-ary relationships in RDF. Chunks provide a combination of symbolic and sub-symbolic approaches, with graphs + statistics + rules + algorithms. Chunk modules support stochastic recall analogous to web search. Chunks enable explainable AI/ML and learning with smaller datasets using prior knowledge and past experience.

Symbolic AI suffers from the bottleneck caused by reliance on manual knowledge engineering. To overcome this challenge, human-like AI will mimic how children learn in the classroom and playground. Natural language is key to human-agent collaboration, including teaching agents new skills. Human languages are complex yet easily learned by children [2], and we need to emulate that for scalable AI. Semantics is represented as chunk-based knowledge graphs in contrast to computational linguistics and deep learning, which use large statistics as a weak surrogate. Human-like AI doesn’t reason with logic or statistical models, but rather with mental models of examples and the use of metaphors and analogies, taking inspiration from human studies by Philip Johnson-Laird [L3, 3].

Humans are good at mimicking each other’s behaviour. For example: babies learning to smile socially at the age of 6 to 12 weeks. Language involves a similar process of mimicry with shared rules and statistics for generation and understanding. Work on cognitive natural language processing is focusing on the end-to-end communication of meaning, with constrained working memory and incremental processing, avoiding backtracking through concurrent processing of syntax and semantics.

Work on human-like AI is still in its infancy but is already providing fresh insights for building AI systems, combining ideas from multiple disciplines. It is time to give computers a human touch!

An expanded version of this article is available [L4]. The described work has been supported by the Horizon 2020 project Boost 4.0 (big data in smart factories).

Links:

[L1] https://www.w3.org/community/cogai/

[L2] http://act-r.psy.cmu.edu/about/

[L3] https://www.pnas.org/content/108/50/19862

[L4] https://www.w3.org/2021/Human-like-AI-article-raggett-long.pdf

[L5] https://www.w3.org/2021/digital-transformation-2021-03-17.pdf

References:

[1] J. R. Anderson: “How Can the Human Mind Occur in the Physical Universe?”, Oxford University Press, 2007, https://doi.org/ 10.1093/acprof:oso/9780195324259.001.0001

[2] W. O’Grady: “How Children Learn Language”, Cambridge University Press, 2012, https://doi.org/10.1017/CBO9780511791192

[3] P. Johnson-Laird “How We Reason”, Oxford University Press, 2012, https://doi.org/10.1093/acprof:oso/9780199551330.001.0001

Please contact:

Dave Raggett, W3C/ERCIM