by Timo Oess and Heiko Neumann (Ulm University)

Audition equips us with a 360-degree far-reaching sense to enable rough but fast target detection in the environment. However, it lacks the precision of vision when more precise localisation is required. Integrating signals from both modalities to a multisensory audio-visual signal leads to concise and robust perception of the environment. We present a brain-inspired neuromorphic modelling approach that integrates auditory and visual signals coming from neuromorphic sensors to perform multisensory stimulus localisation in real time.

Multimodal signal integration improves perceptual robustness and fault tolerance, increasing the information gain compared to merely superimposing input streams. In addition, if one sensor fails, the other can compensate for the lost information. In biology, integration of sensory signals from different modalities in different species is a crucial survival factor and it has been demonstrated that such integration is performed in a Bayesian optimal fashion However, multimodal integration is not trivial, since signals from different modalities typically are represented in distinct reference frames and one needs to align these frames to relate inferred locations to the spatial surround. An example is the integration of audio and visual signals. The auditory space is computed by level or time differences of binaural input whereas the visual space is derived from positions related to the sensory receptor surfaces (retina, camera). Robust alignment of such distinct spaces is a key step to exploit the information gain provided by multisensory integration.

In a joint project [L1] between the Institutes of Applied Cognitive Psychology and Neural Information Processing at Ulm University, we investigated the processing of auditory and visual input streams for object localisation with the aim of developing biologically inspired neural network models for multimodal object localisation based on optimal visual-auditory integration as seen in humans and animals. In particular, we developed models of neuron populations and their mutual interactions in different brain areas that are involved in visual and auditory object localisation. These models were behaviourally validated and, in a subsequent step, implemented on IBM’s brain-inspired neurosynaptic chip TrueNorth [3]. There, they ran in real-time and controlled a robotic test platform to solve target-driven orientation tasks.

In contrast to vision with its topographic representation of space, auditory space is represented tonotopically, with incoming sound signals decomposed into their frequency components. Horizontal sound source positions cannot directly be assigned a location relative to the head direction. This is because this position is computed from different cues, caused by (i) the temporal and intensity difference of the signals arriving at the left and right ear and (ii) the frequency modulation induced by the head and shape of the ears. Thus, to spatially localise a sound source an agent needs to process auditory signals over a cascade of several stages. We modelled these stages utilising biologically plausible neural mechanisms that facilitate subsequent deployment on neuromorphic hardware. In particular, we constructed a model for binaural integration of sound signals encoding the interaural level difference of signals from the left and right ear [1]. The model incorporates synaptic adaptation to dynamically optimise estimations of sound sources in the horizontal plane. The elevation of the source in the vertical plane is separately estimated by a model that demonstrates performance enhancement by binaural signal integration. These estimates jointly define a 2D map of auditory space. This map needs to be calibrated to spatial positions in the environment of the agent. Such a calibration is accomplished by learning a registered auditory position map which is guided by visual representations in retinal coordinates. Connection weights are adapted by correlated activities where learning is triggered by a third factor leading to robust map alignment but is sufficiently flexible to adapt to altered sensory inputs.

Such calibrated internal world representations establish accurate and stable multimodal object representations. We modelled multisensory integration neurons that receive excitatory and inhibitory inputs from unimodal auditory and visual neurons, respectively, as well as feedback from cortex [2]. Such feedback projections facilitate multisensory integration (MSI) responses and lead to commonly observed properties like inverse effectiveness, within-modality suppression, and the spatial principle of neural activity in multisensory neurons. In our model, these properties emerge from network dynamics without specific receptive field tuning. A sketch of the functionality of the circuit is shown in Figure 1. We have further investigated how multimodal signals are integrated and how cortical modulation signals affect it. Our modelling investigations demonstrate that near-optimal Bayesian integration of visual and auditory signals can be accomplished with a significant contribution by active cortical feedback projections. In conclusion, the results shed new light how recurrent feedback processing supports near-optimal perceptual inference in cue integration by adaptively enhancing the coherence of representations.

![Figure 1: Model Architectures. Left panel, architecture of the multisensory integration model. Blue lines indicate excitatory connections, green lines indicate modulatory connections, and red lines indicate inhibitory connections. Green box is modulatory cortical signal that is elaborated in grey box. Filled circles represent model neurons, hollow circles indicate inputs to the model. Letters indicate the name of neurons and inputs. Right panel, arrangement of the model architecture on the TrueNorth neurosynpatic chip. Note the similar hierarchy of the architectures with differences only in the fine structure. Adapted from [2], Creative Commons license 4.0 (https://creativecommons.org/licenses/by/4.0/)](/images/stories/EN125/oess1.png)

Figure 1: Model Architectures. Left panel, architecture of the multisensory integration model. Blue lines indicate excitatory connections, green lines indicate modulatory connections, and red lines indicate inhibitory connections. Green box is modulatory cortical signal that is elaborated in grey box. Filled circles represent model neurons, hollow circles indicate inputs to the model. Letters indicate the name of neurons and inputs. Right panel, arrangement of the model architecture on the TrueNorth neurosynpatic chip. Note the similar hierarchy of the architectures with differences only in the fine structure. Adapted from [2], Creative Commons license 4.0 (https://creativecommons.org/licenses/by/4.0/)

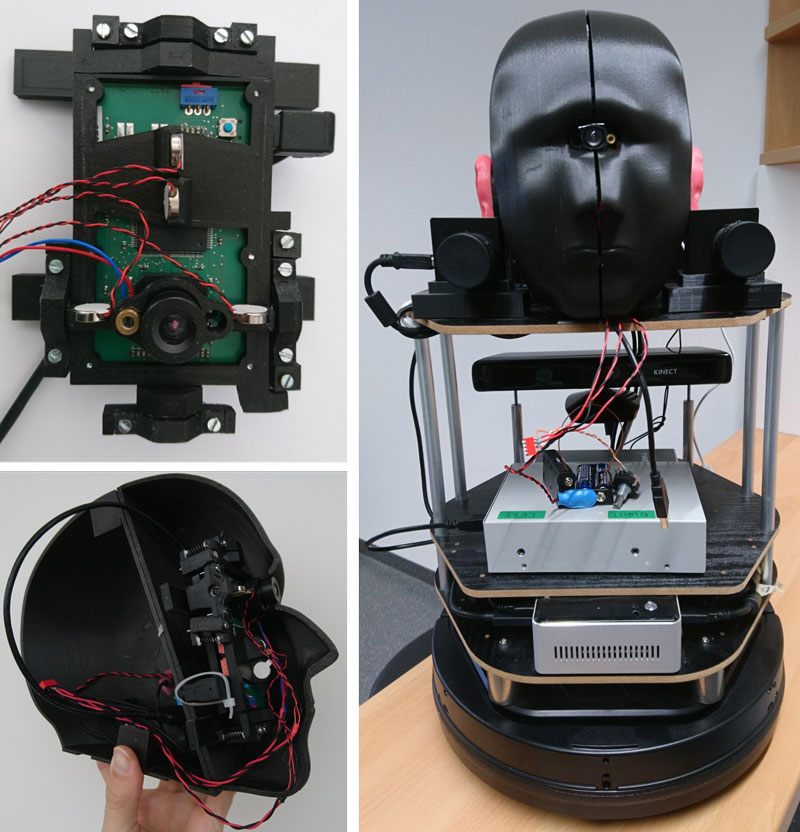

Figure 2: Left top, eDVS in mounted, vibrational frame driven by vibration motors to induce micro saccades for detection of static objects. Left bottom, left side of 3D printed head with eDVS frame and microphone in ear channel. Right side, complete head mounted on a robotic platform.

Having analysed the functional properties of our models for audio object localisation and multisensory integration, we deployed the neural models on a neuromorphic robotic platform (Figure 2). The platform is equipped with bio-inspired sensors (DVS and bio-inspired gamma-tone filters) for energy efficient processing of audio and visual signals, respectively, and a neuromorphic processing unit. We defined an event-based processing pipeline from sensory perception up to stages of subcortical multisensory integration. Performance evaluation for real world inputs demonstrate that the neural framework runs in real time and is robust against interferences making it suitable for robotic applications with low-energy consumption.

Further evaluations of this platform are planned, that comprise investigating the ability of the model to flexibly react to altered sensory inputs by, e.g., modifying the position of the microphones. Attention mechanisms will additionally enable a selective enhancement of multisensory signals during active search. Deeper understanding of such principles and testing their function and behaviour on robots provides the basis for developing advanced systems to self-organise stable orientation, localisation, and recognition performance.

Link:

[L1] https://www.uni-ulm.de/in/neuroinformatik/forschung/schwerpunkte/va-morph/

References:

[1] T. Oess, M. O. Ernst, H. Neumann: “Computational principles of neural adaptation for binaural signal integration”, PLoS Comput Biol (2020) 16(7): e1008020. https://doi.org/10.1371/journal.pcbi.1008020

[2] T. Oess, et al.: “From near-optimal Bayesian integration to neuromorphic hardware: a neural network model of multisensory integration”, Front Neurorobot (2020) 14:29. doi: 10.3389/fnbot.2020.00029

[3] P. A. Merolla, et al., "A million spiking-neuron integrated circuit with a scalable communication network and interface", Science, vol. 345, no. 6197, pp. 668-673, Aug 2014.

Please contact:

Timo Oess, Heiko Neumann

Dept. Applied Cognitive Psychology; Inst. for Neural Information Processing, Ulm University, Germany

{timo.oess | heiko.neumann}@uni-ulm.de