by Johannes Schemmel (Heidelberg University, Germany)

By incorporating recent results from neuroscience into analogue neuromorphic hardware we can replicate biological learning mechanisms in silicon to advance bio-inspired artificial intelligence.

Biology is still vastly ahead of our engineering approaches when we look at computer systems that cope with natural data. Machine learning and artificial intelligence increasingly use neural network technologies inspired by nature, but most of these concepts are founded on our understanding of biology from the 1950s. The BrainScaleS neuromorphic architecture builds upon contemporary biology to incorporate the knowledge of modern neuroscience within an electronic emulation of neurons and synapses [1].

Biological mechanisms are directly transferred to analogue electronic circuits, representing the signals in our brains as closely as possible with respect to the constraints of energy and area efficiency. Continuous signals like voltages and currents at cell membranes are directly represented as they evolve over time [2]. The communication between neurons can be modelled by either faithfully reproducing each action potential or by summarising them as firing rates, as is commonly done in contemporary artificial intelligence (AI).

Using the advantages of modern microelectronics, the circuits are operated at a much higher speed than the natural nervous system. Acceleration factors from 1000 to 100,000 have been achieved in previous implementations, thereby dramatically shortening execution times.

The BrainScaleS architecture consists of three pillars:

- microelectronics as the physical substrate for the emulation of neurons and synapses

- a software stack controlling the operation of a BrainScaleS neuromorphic system from the user interface down to the individual transistors

- training and learning algorithms supported by dedicated software and hardware throughout the system.

The analogue realisation of the network emulation does not only have the advantage of speed, but also consumes much less energy and is easier and therefore cheaper to fabricate than comparable digital implementations. It does not need the smallest possible transistor size to achieve a high energy efficiency. To configure the analogue circuits for their assigned tasks, they are accompanied by specialised microprocessors optimised for the efficient implementation of modern bio-inspired learning algorithms.

Suitable tasks range from fundamental neuroscientific research, like the validation of results from experimental observations in living tissue, to AI applications like data classification and pattern recognition.

These custom-developed plasticity processing units have access to a multitude of analogue variables characterising the state of the network. For example, the temporal correlation of action potentials is continuously measured by the analogue circuitry and can be used as input variables for learning algorithms [3]. This combination of analogue and digital hardware-based learning is called hybrid plasticity.

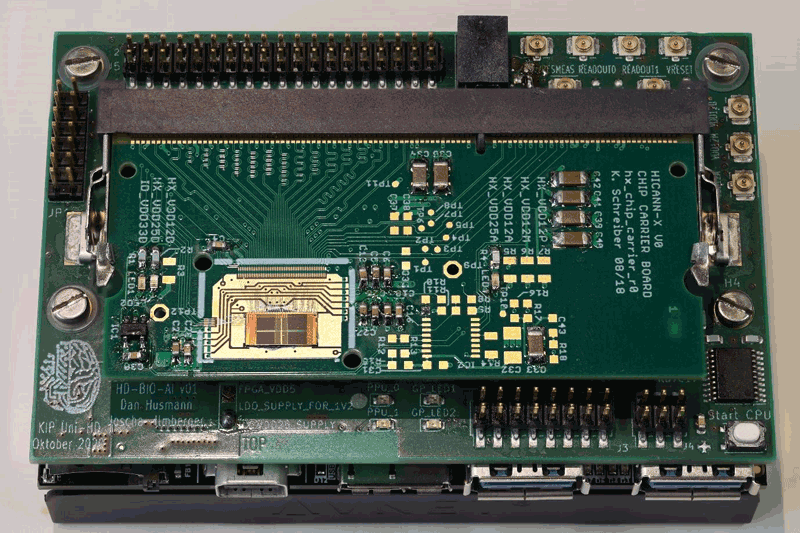

Figure 1: The most recent addition to the BrainScaleS family of analogue accelerated neuromorphic hardware systems is a credit-card sized mobile platform for EdgeAI applications. The protective lid above the BrainScaleS ASIC has been removed for the photograph.

Figure 1 shows the recently finished BrainScaleS mobile system. A BrainScaleS ASIC is visible on the lower left side of the topmost circuit board. This version is suited for edge-based AI applications. Edge-based AI avoids the transfer of full sensor information to the data centre and thus enhances data privacy and has the potential to vastly reduce the energy consumption of the data centre and data communication infrastructure. An initial demonstration successfully detects signs of heart disease in EKG traces. The energy used to perform this operation is so low that a wearable medical device could work for years on battery power using the BrainScaleS ASIC.

The BrainScaleS architecture can be accessed and tested free of charge online at [L1].

This work has received funding by the EC (H2020) under grant agreements 785907 and 945539 (HBP) and the BMBF (HD-BIO-AI, 16ES1127)

Link:

[L1] https://ebrains.eu/service/neuromorphic-computing/#BrainScaleS

References:

[1] S. A. Aamir et al.: “A Mixed-Signal Structured AdEx Neuron for Accelerated Neuromorphic Cores,” IEEE Trans. Biomed. Circuits Syst., vol. 12, no. 5, pp. 1027–1037, Oct. 2018, doi: 10.1109/TBCAS.2018.2848203.

[2] S. A. Aamir, Y. Stradmann, and P. Müller: “An accelerated lif neuronal network array for a large-scale mixed-signal neuromorphic architecture,” on Circuits and …, 2018, [Online]. Available: https://ieeexplore.ieee.org/abstract/document/8398542

[3] S. Billaudelle et al.: “Structural plasticity on an accelerated analog neuromorphic hardware system,” Neural Netw., vol. 133, pp. 11–20, Oct. 2020, doi: 10.1016/j.neunet.2020.09.024.

Please contact:

Johannes Schemmel

Heidelberg University, Germany,

Björn Kindler

Heidelberg University, Germany,