by Alexandru Gheorghiu (University of Edinburgh) and Elham Kashefi (University of Edinburgh, CNRS)

Quantum computers promise to efficiently solve not only problems believed to be intractable for classical computers, but also problems for which verifying the solution is also intractable. How then, can one check whether quantum computers are indeed producing correct results? We propose a protocol to answer this question.

Quantum information theory has radically altered our perspective about quantum mechanics. Initially, research into quantum mechanics was devoted to explaining phenomena as they are observed in nature. But the focus then changed to designing and creating quantum systems for computation, information processing, communication, and cryptography among many other tasks. In particular, what became clear was that quantum interference - “the heart of quantum mechanics”, as Richard Feynman described it - can be harnessed for quantum computation. Algorithms running on a hypothetical quantum computer would be able to solve problems by creating an interference pattern of different computational branches. This can lead to an exponential saving in the amount of resources used by a quantum algorithm, when compared to the best known classical algorithms. The most famous example of this is Shor's algorithm for factoring numbers which is exponentially faster than the best known classical factoring algorithms.

But having a device which can solve problems exponentially faster than classical computers raises an interesting question: can a classical computer efficiently verify the results produced by this device? At first, one might be tempted to dismiss this question and say that as long as each component of a quantum computer has been tested and works correctly, there is no need to worry about the validity of the device's results. However, the point of verification is much more profound. Quantum computers would provide one of the most stringent tests of the laws of quantum mechanics. While numerous experiments involving quantum systems have already been performed to a remarkable precision, they all utilized relatively few degrees of freedom. But when many degrees of freedom are involved, and because predicting the outcome of the experiment requires exponential resources, it quickly becomes infeasible to calculate the possible results of the experiment without resorting to lax approximations. Verification of quantum computation would therefore allow for a new test of quantum mechanics, a test in the regime of high complexity.

There is another important reason for verifying quantum computations, having to do with cryptography. The first quantum computers are likely to be servers, to which clients can connect through the Internet. We can already see an instance of this with the recent 5-qubit and 16-qubit devices that IBM has made available to the general public [L1]. When larger devices become available, users will wish to delegate complex computations to them. However, in such a distributed environment, malicious agents might perform man-in-the-middle attacks or compromise the remote server. The clients would then need a means to check the validity of the server's responses. In fact, in this setting, users might also wish to keep their data hidden even from the quantum computer itself, as it might involve sensitive or classified information.

So can one verify quantum computations while also maintaining the secrecy of the client's input? The answer is yes. In fact, the client's ability to keep the input hidden is what makes verification possible. This was shown by Fitzsimons and Kashefi when they proposed a verification protocol based on a cryptographic primitive known as Universal Blind Quantum Computation (UBQC) [1,2]. In UBQC, a client that can prepare single qubits has the ability to delegate quantum computations to a server, in such a way that the server is oblivious to the computation being performed. To do verification, the client can then exploit this property by embedding tests in the computation, referred to as traps, which will fail if the server doesn't perform the correct computation. Of course, the problem with this approach is that the client needs to trust that the qubit preparation device works correctly and produces the specified states. But if, prior to the start of the protocol, a malicious agent corrupts the preparation device, the client could later be tricked into accepting incorrect results.

To address this issue, we, together with Dr. Petros Wallden, at the University of Edinburgh, proposed a verification protocol which is device-independent [3]. In other words, the client need not trust any of the quantum devices in the protocol. This is achieved by using a powerful result of Reichardt, Unger and Vazirani, known as rigidity of non-local correlations [4]. Non-local correlations are correlations between responses of non-communicating parties that cannot be reproduced classically, unless the parties are allowed to communicate. Such correlations can be produced, quantum mechanically, through a suitable strategy for measuring certain entangled states. The rigidity result is essentially a converse to this. It states that certain non-local correlations can only be produced by a particular, unique strategy. Observing such correlations between non-communicating devices then implies that the devices are behaving according to this fixed strategy. What is remarkable about this result is that it only requires examining the outputs of the devices, without assuming anything about their inner workings.

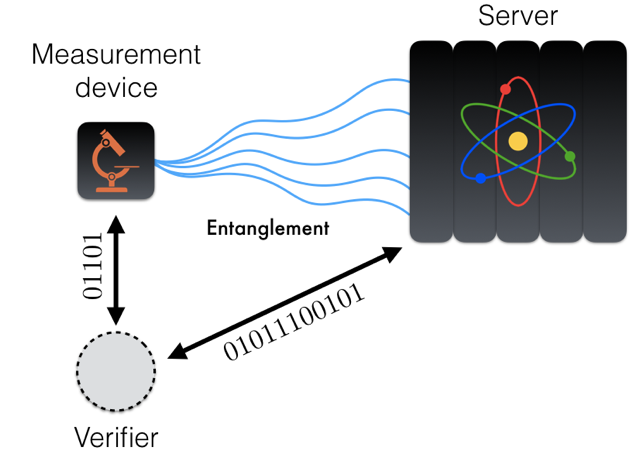

The protocol then works as follows: the client has an untrusted device for measuring single qubits and is also communicating classically with the quantum server. By examining the outputs of the two devices, it follows from the rigidity result that the client can check whether the two devices are sharing entanglement and performing measurements as instructed. If so, the client leverages this and uses the entanglement to remotely prepare single qubit states on the server's side. Finally, the client uses the trap-based scheme of Fitzsimons and Kashefi to delegate and verify an arbitrary quantum computation to the server.

Figure 1: Device-independent verification protocol. The client, or verifier, will instruct both the measurement device and the server to measure entangled qubits. The statistics of these measurements are then checked by the verifier. All communication with the quantum devices is classical.

Verification is an important milestone on the road to scalable quantum computing technology. As we have seen, verification protocols exist even for the most paranoid users. But even so, questions still remain regarding their optimality, their ability to tolerate noise and imperfections, as well as other issues. Addressing all these questions is a key challenge for both theorists and experimentalists and their resolution will shape the landscape of the emerging Quantum Internet.

Link:

[L1] https://kwz.me/hBv

References:

[1] A. Broadbent, J.F. Fitzsimons, E. Kashefi: “Universal blind quantum computation”, in Proc. of FOCS ‘09, IEEE Computer Society (2009) 517 – 526.

[2] J.F. Fitzsimons, E. Kashefi: “Unconditionally verifiable blind quantum computation”, Phys. Rev. A 96 (2017) 012303.

[3] A. Gheorghiu, E. Kashefi, P. Wallden: “Robustness and device independence of verifiable blind quantum computing”, New Journal of Physics 17(8) (2015) 083040.

[4] B.W. Reichardt, F. Unger, U. Vazirani: Classical command of quantum systems. Nature 496(7446) (2013) 456.

Please contact:

Elham Kashefi, University of Edinburgh, UK and CNRS, France

Alexandru Gheorghiu

University of Edinburgh, UK