by Oliver Zendel, Markus Murschitz and Martin Humenberger (AIT Austrian Institute of Technology)

Do we really need to drive several million kilometres to test self-driving cars? Can proper test data validation reduce the workload? CV-HAZOP is a first step for finding the answers. It is a guideline for evaluation of existing datasets as well as for designing of new test data. Both with emphasis on maximising test case coverage, diversity, and reduce redundancies.

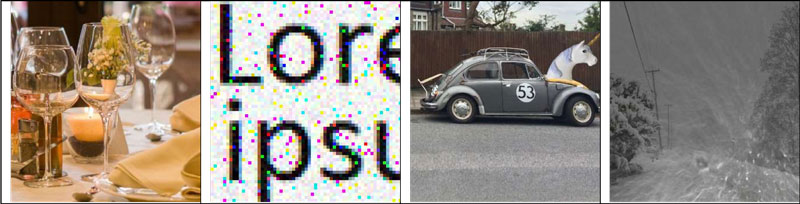

Computational imaging has played a very important role in a series of applications in the past decade. Methods such as pattern recognition, 3D reconstruction, and image understanding are used in industrial production, entertainment, online services, and recently in advanced driver assistance systems and self-driving cars. Many safety-critical systems depend on computer vision (CV) technologies to navigate or manipulate their environment and, owing to the potential risk to human life, require a rigorous safety assessment. Due to the inherent uncertainty of purely visual information, quality assurance is essential in CV and using test data is a common way of achieving this. Figure 1 shows examples for challenging situations for CV algorithms.

Figure 1: Examples for challenging situations for computer vision algorithms (from left to right): transparent objects, noise, unexpected objects, particles in the air causing reflections and blur.

Unfortunately, researchers and engineers often observe that algorithms scoring high in public benchmarks [1] perform rather poorly in real world scenarios. This is because those limited benchmarks are applied to open world problems. While every new proposed algorithm is evaluated based on these benchmark datasets, the datasets themselves rarely have to undergo independent evaluation. This article presents a new way to facilitate this safety assessment process and goes beyond the basic scope of benchmarking: applying a proven method used by the safety community (HAZOP) for the first time to the CV domain. It provides an independent measure that counts the challenges in a dataset that are testing the robustness of CV algorithms. Our main goal is to provide methods and tools to minimise the amount of test data by maximising test coverage. Taking autonomous driving as example, instead of driving one million kilometres to test an algorithm, we systematically select the right 100 km.

Verification and validation for computer vision

A good starting point is software quality assurance, which typically uses two steps to provide objective evidence that a given system fulfils its requirements: verification and validation. Verification checks whether or not the specification was implemented correctly (e.g., no bugs). Validation addresses the question of whether or not the algorithm fulfils the task at hand, e.g., is robust enough under difficult circumstances. Validation is performed using test datasets as inputs and comparing the algorithm’s output against the expected results (ground truth, GT). While general methods for verification can be applied to CV algorithms, the validation part is rather specific. A big problem when validating CV algorithms is the enormous amount of possible input datasets, i.e., test images (e.g., for 640x480 8bit image inputs there are 256640x480 ~ 10739811 different test images). An effective way to overcome this problem is to find equivalence classes, which represent challenges and potential hazards, and to test the system with a representative of each class. Well defined challenging situations, such as all images that contain a glare, are such equivalence classes and certain images are the corresponding representatives (see Figure 1).

CV-HAZOP checklist

Below is a brief explanation of how potential hazards can be found and mapped to entries in the checklist. A more detailed description and an example application is presented in [2].

The identification and collection of CV hazards follows a systematic manner and the results should be applicable to many CV solutions. To create an accepted tool for the validation of CV systems, the process has to be in line with well-established practices from the risk and safety assessment community. The more generic method HAZOP [3] is chosen over FME(C)A and FTA because it is feasible for systems for which little initial knowledge is available. In addition, the concept of guide words adds a strong source of inspiration that the other methods are missing. In the following, we outline the main steps of a HAZOP applied for CV:

1. Model the system: We propose a novel model which is entirely based on the idea of information flow (Figure 2). The common goal of all CV algorithms is the extraction of information from image data. Therefore ‘information’ is chosen to be the central aspect handled by the system.

2. Partition the model into subcomponents, called locations: Our model consists of five locations: light sources, medium, objects, observer, and algorithm.

3. Find appropriate parameters for each location which describe its configuration. Exemplary parameters for the location object are texture, position or reflectance.

4. Define guide words: A guide word is a short expression that triggers the imagination of a deviation from the design / process intent.

5. Assign meanings for each guide word / parameter combination and derive consequences as well as hazards from each meaning to generate an entry in the checklist.

![Figure 2: Information flow within the generic model (image taken from [5]). Light travels from the light source and the objects through the medium to the observer, which generates the image. Finally, the algorithm processes the image and provides the result.](/images/stories/EN108/zendel2.png)

Figure 2: Information flow within the generic model. Light travels from the light source and the objects through the medium to the observer, which generates the image. Finally, the algorithm processes the image and provides the result.

The CV-HAZOP risk analysis was conducted by nine computer vision experts over the course of one year and results in over 900 entries of possible hazards for CV. We provide a catalog of the identified challenges and hazards (e.g., misdetection of obstacles) which is open and can be freely used by anyone. It will be collaboratively extended and improved by the community. We will maintain it and moderate its modifications, but we invite everyone to contribute improvements. The entire CV-HAZOP checklist can be found on www.vitro-testing.com.

References:

[1] D. Scharstein ,R. Szeliski: “A taxonomy and evaluation of dense two-frame stereo correspondence algorithms”, Int J. Computer Vision, 2002.

[2] O. Zendel, et al.: “CV-HAZOP: “Introducing Test Data Validation for Computer Vision”, in Proc. of ICCV, 2015.

[3] T. Kletz: “Hazop & Hazan: Identifying and Assessing Process Industry Hazards”, IChemE, 1999.

Link:

http://www.vitro-testing.com

Please contact:

Oliver Zendel, Markus Murschitz, Martin Humenberger

AIT Austrian Institute of Technology