by Guido Rocchietti, Cristina Ioana Muntean, Franco Maria Nardini (CNR-ISTI)

In the context of the Horizon Europe EFRA research project [L1], we explore the innovative use of Large Language Models (LLMs), both instructed and fine-tuned, in improving the quality of conversational search. The focus is on applying these models in rewriting conversational utterances to enhance the capability of conversational agents to retrieve accurate responses.

Conversational search represents an innovative approach that enables users to engage with information systems naturally and amiably through everyday language dialogues. This entails the system’s ability to effectively preserve contextual information, including the concepts and details conveyed in prior interactions between the user and the conversational agents. Examples of such systems include familiar agents like Alexa, Siri, and others that are seamlessly integrated into our daily routines. Conversational search poses new challenges for information retrieval systems that are asked to extract the correct information from a collection of documents: user utterances submitted during a conversation may be ambiguous, incomplete, or their meaning dependent on the context of previous utterances. Therefore, conversational utterance rewriting is a crucial task aiming to reformulate the user’s requests into more precise and complete queries that enhance retrieval effectiveness.

In our research [1], we explore using Large Language Models (LLMs), both instructed and fine-tuned, to rewrite user queries and increase the quality of the information retrieved by a conversational agent. LLMs have shown their ability to comprehend and generate natural language for diverse tasks. Instructed LLMs, such as ChatGPT, are trained to receive detailed instructions from the user about what they must do. On the other hand, fine-tuned LLMs are based on pre-trained models further trained to excel on a particular task using a transfer learning technique.

Our investigation explores to what extent these models are exploitable and reliable when used in an information retrieval setting for rewriting conversational utterances, trying to stress the limitations and the weak points of models that have already become used in many fields of our daily lives.

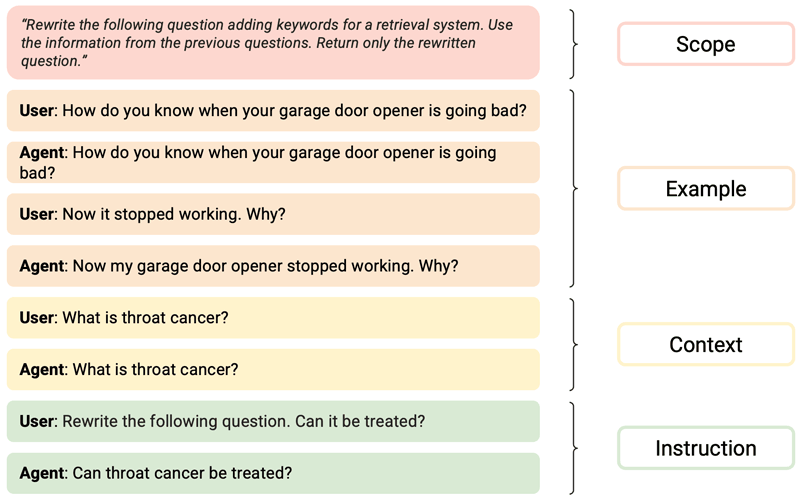

As instructed LLM, we used ChatGPT powered by GPT 3.5-turbo. We designed and tested prompting templates to evaluate ChatGPT rewriting capabilities. A prompt is a textual instruction specifying the task the model is expected to do. Of course, many ways exist to instruct the model to obtain the same expected results. To establish the best way to input the model, we devised 14 different prompts with different settings, with and without context (i.e. the previous utterances), and generated artificial interactions to provide the model as examples.

Figure 1: Example of interaction between the user and ChatGPT. Scope indicates the task the models should perform, Example is the artificial interactions provided to the system, Context is the real previous interactions, and Instruction reports the current prompt together with the system response.

Our experiments evidenced that prompting instructed LLMs with few-shot learning, i.e. providing few behavioural examples, is the best setting to produce valuable rewriting, obtaining results significantly higher than the ones obtained with zero-shot learning (i.e. providing only the prompt) and to state-of-the-art utterance rewriting techniques. Specifically, we selected a few couples of utterances, original and rewritten, which we used as the history of interactions between us and ChatGPT. In this way, the model used these as examples to better generate the rewritten version of the queries.

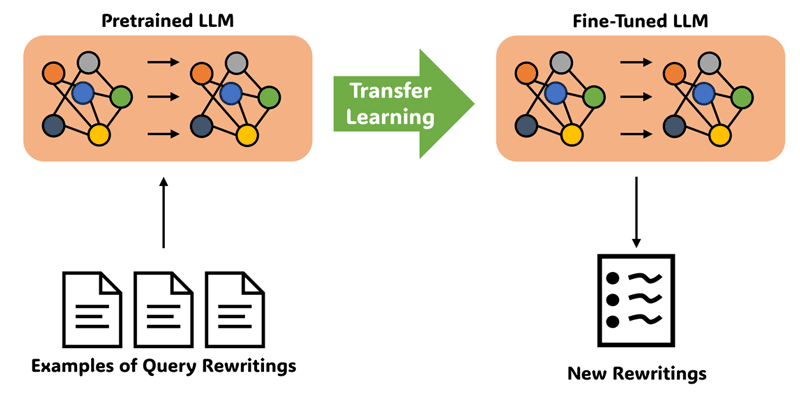

Subsequently, we fine-tuned publicly available LLMs such as Falcon-7B, and Llama-2-13B to generate rewritten and self-explanatory versions of the conversational utterances. The scope of this experiment was to assess whether using much smaller and open-source models than the closed-source ones powering ChatGPT could achieve comparable results.

Figure 2: Illustration of the fine-tuning technique. On the left, examples of rewritten queries are provided to the pre-trained model during a transfer learning process. On the right is the resulting model fine-tuned to rewrite new queries.

To fine-tune the models, we used QReCC, an open-domain dataset with about 81,000 question–answer pairs for conversational question answering. As input for the training phase, we used the previous questions of the conversation as context, followed by the query we wanted to rewrite, while our target was the rewritten version of the same query.

Our results showed that relatively small LLMs, fine-tuned for rewriting user queries, can significantly outperform large instructed LLMs such as ChatGPT. The best performance was obtained with the 13B parameters version of Llama-2.

The reproducible experiments [L2] were conducted on the publicly available TREC CAsT [L3] 2019 and 2020 datasets, which are collections of open-domain conversational dialogues with relevance judgements (i.e. judgements made by humans as to whether a document is relevant to an information need) provided by NIST. We use a two-stage retrieval pipeline based on PyTerrier to assess the rewritten utterances’ retrieval effectiveness. The first stage performs document retrieval using the DPH weighting model, and the second stage performs a neural reranking of the top 1,000 candidates using the MonoT5 model. We compare our methods with state-of-the-art baselines for conversational utterance rewriting, such as QuReTeC and CQR.

Our work opens new directions for research on conversational utterance rewriting with LLMs. The following steps of our research will try to find new ways to obtain even better rewriting results while reducing the size and the computational cost of the inference phase of the model. The idea is to apply existing and novel quantisation techniques to devise an optimal trade-off between rewriting accuracy and computational cost. The availability of such powerful models forces us to thoroughly investigate to what extent we can rely on these kinds of systems, especially given that their impact on society is not negligible, both from a user experience and a sustainability perspective.

Funding for this research has been provided by the EU’s Horizon Europe research and innovation programme EFRA (Grant Agreement Number 101093026). However, views and opinions expressed are those of the authors only and do not necessarily reflect those of the EU or European Commission-EU. Neither the EU nor the granting authority can be held responsible for them.

Links:

[L1] https://efraproject.eu

[L2] https://github.com/hpclab/conv-llm

[L3] https://www.treccast.ai/

Reference:

[1] E. Galimzhanova et al., “Rewriting conversational utterances with instructed large language models,” In Proc. of the 22nd IEEE/WIC Int. Conf. on Web Intelligence and Intelligent Agent Technology (WI-IAT), 2023.

Please contact:

Guido Rocchietti, CNR-ISTI, Pisa, Italy, and University of Pisa, Italy