by Davide Ceolin (CWI) and Ji Qi (Netherlands eScience Center)

The spread of disinformation affects society as a whole and the recent developments in AI are likely to aggravate the problem. This calls for automated solutions that assist humans in the task of automated information quality assessment, a task that can be perceived as subjective or biased, and that thus requires a high level of transparency and customisation. At CWI, together with the Netherlands eScience Center, we investigate how to design AI pipelines that are fully transparent and tunable by an end user. These pipelines will be applied to automated information quality assessment, using reasoning, natural language processing (NLP), and crowdsourcing components, and are available in the form of open source workflows.

The assessment of the information quality of online content is a challenging yet necessary step to help users benefit from the vast amount of information available online. The challenge lies in the complexity of the assessment process, the expertise and resources required to perform it, and the fact that the resulting assessment needs to be understood by laypeople to be trusted and, therefore, used. This means that, on the one hand, we need to scale up the process, which is onerous, while the number of items to analyse is vast. Scalability is essential to guarantee the feasibility of the effort. On the other hand, we need to aim at explainable and transparent approaches to address this problem. For the user to trust the result of the computation, they need to understand the computation process and the quality assessment itself should be explainable.

The Eye of the Beholder project [L1] aims to enhance the transparency and the explainability of information quality assessments. The Eye of the Beholder started in 2022, is led by the Human-Centered Data Analytics Group [L2] at CWI and is funded by the Netherlands eScience Center. We focus on one specific class of information items, namely product reviews. Reviews are meant to provide second-hand assessments about the quality of products; however, their quality is diverse, and since they represent personal opinions, they are difficult to verify.

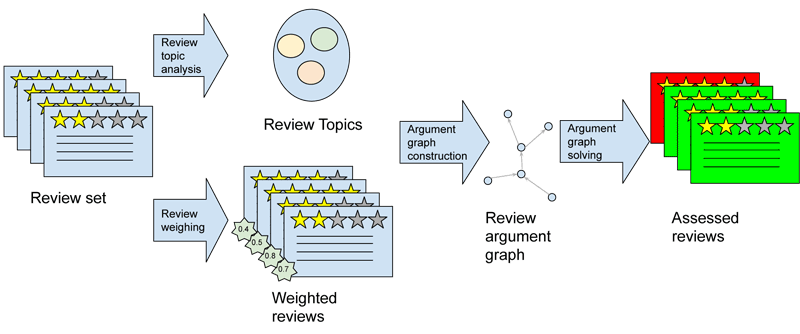

Several product-review collection websites, however, address the problem of reviewing the quality of reviews by collecting judgements from the readers, for instance in terms of upvotes (e.g., ‘likes’ or ‘thumbs ups’ collected to rank reviews). This method helps spot the most useful, informative, and possibly high-quality reviews. Previous work of ours [1] showed that employing an argument-based approach is a promising way to automate the identification of the most useful product reviews. This is useful because it helps identify high-quality reviews before users spend time on them. However, mining arguments in text and reasoning on their strength is a difficult task. Several AI components can be employed in order to identify arguments based on the sentence structure, text topic and text similarity, and these arguments can be evaluated against conflicting ones by employing different reasoning and different weighing schemes. When conflicting arguments are identified, it is possible to indeed weigh them to determine their quality, but there is no universal method to do so. Some of the models that allow reasoning on these arguments, for instance, allow modelling of support among arguments explicitly, while others focus on modelling attacks only. In principle, both types of model could serve our purposes but, in practice, we need to evaluate which of them better fits the use case at hand.

For this reason, within Eye of the Beholder, we developed a series of open source workflows that implement such computational argumentation-based information quality assessment pipelines. These workflows have been implemented in the Orange platform [L3]. These open source platforms allow design AI and machine learning workflows, and by leveraging them, we enhance the transparency of our pipeline for the purpose of actionability. This means that we do not only make the pipeline transparent and understandable by users, but we also allow users to tune and tweak the pipeline itself. For instance, users may want to increase or decrease the granularity of their topic-detection component (so to allow it to identify coarser- or finer-grained topics), or, it may want to use a different argument-reasoning engine than the one we propose. By using our workflows, the user will be able to make these changes and evaluate their performance implications, or they can also propose new theoretical frameworks to this purpose and evaluate them. For instance, in our project, we developed a theoretical framework for reasoning specifically on the arguments of product reviews [2] and we will evaluate it in the future through our workflows.

Figure 1: Example of an argument-based product review assessment pipeline.

In the future, we will explore the development of these automated pipelines further. While implementing them, we are focusing on determining methods to anticipate the influence of parameter tuning on the pipeline performance. This will help us guide non-technical users in the management of the computation.

Lastly, we collaborate with the AI, Media and Democracy lab [L4] – an interdisciplinary lab that brings together AI researchers, communication scientists, information law scholars and media organisations that will provide an ideal setting to evaluate applications of our approach in the media and democracy fields.

Links:

[L1] https://www.esciencecenter.nl/projects/the-eye-of-the-beholder-transparent-pipelines-for-assessing-online-information-quality/

[L2] https://www.cwi.nl/en/groups/human-centered-data-analytics/

[L3] https://github.com/EyeofBeholder-NLeSC/orange3-argument

[L4] https://www.aim4dem.nl/

References:

[1] D. Ceolin et al., “Transparent assessment of information quality of online reviews using formal argumentation theory,” Information Systems, vol. 110, 2022, Art. no. 102107.

[2] A. K. Zafarghandi and D. Ceolin, “Fostering explainable online review assessment through computational argumentation,” in Proc. 1st International Workshop on Argumentation for eXplainable AI, ArgXAI@COMMA, 2022.

Please contact:

Davide Ceolin, CWI, The Netherlands