by Andżelika Zalewska-Küpçü (QED Software), Andrzej Janusz (University of Warsaw & QED Software) and Dominik Ślęzak (University of Warsaw & QED Software)

BrightBox technology presents a novel approach to investigating mistakes in machine learning model operations.

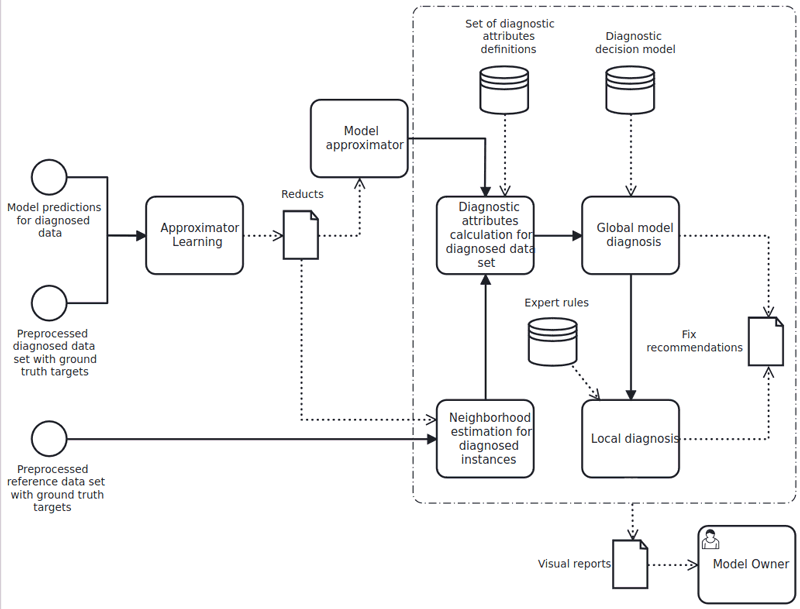

The main feature offered by BrightBox technology [1] is the capability to create a surrogate model that can closely imitate any black-box classification or regression algorithm. The approximations of the diagnosed model's predictions are computed using an ensemble of approximate reducts, which are irreducible subsets of attributes preserving almost the same information about the decisions as the whole set of attributes. The prediction is made separately for each reduct, and the results are then averaged. It is worth noting that the decision values for the surrogate are the predictions made by the diagnosed model, not the true target values. An ensemble of approximate reducts can then be used as a classification model.

BrightBox technology is based on neighbourhoods. The surrogate models are used to identify neighbourhoods of instances that have been processed by a machine learning model. The neighbourhood for a diagnosed instance relative to a single reduct is a subset of instances from the reference dataset which belong to the same indiscernibility class. The final neighbourhood is the sum of neighbourhoods computed for all reducts in the ensemble. The neighbourhoods consist of historical instances that were processed in a similar way by rough set-based models.

The neighbourhood is analysed for consistency in labels (ground-truth labels, original model predictions, and their approximations), its size, and the uncertainty of predictions. Such features are called diagnostic attributes. By analysing the mistakes made in these neighbourhoods, we can gain valuable insights into the reasons behind the poor performance of machine learning models.

BrightBox is diagnosing black-box models without requiring any knowledge about the model or direct access to it. The diagnosis is performed on the diagnosed dataset and the model's outputs, like predictions or classifications, without using predictions on the training set. This approach is particularly useful in industrial environments where machine learning models are deployed.

To construct a surrogate model in the current version of BrightBox, it is necessary that all attributes have discrete values. Therefore, the first step of the approximation procedure involves discretising all numeric attributes using the quantile method.

To construct the approximator, values for two hyper-parameters need to be selected: epsilon, which represents the threshold for reducts approximation, and the number of reducts in the ensemble. Since the goal is to find the best possible approximation of the model's predictions, a grid search is performed to tune these hyper-parameters. The final selection of the surrogate model is based on achieving a minimum Cohen's kappa value of 0.9 to ensure high-quality approximation. If this quality cannot be attained, the ensemble of reducts is trained with the settings that provide the best-possible approximation quality. The resulting approximator is then utilised to determine neighbourhoods of instances from the diagnosed data and to compute values of diagnostic attributes.

Next, in the BrightBox diagnostics process, a global diagnosis of the model is conducted. This involves computing a summary of diagnostic attribute values obtained for the diagnosed dataset, followed by the use of a pretrained classifier to categorise the investigated model into one of three categories: Near-optimal fit, Under-fitted model, or Over-fitted model. The classifier is pretrained on a manually-labeled dataset consisting of diagnostic attribute summaries computed for numerous datasets and commonly used prediction models.

The next step involves examining individual data instances and predictions made by the diagnosed model. This analysis utilises the diagnostic attribute values computed earlier, along with the output of the global diagnostic model, to provide a comprehensive assessment of model predictions and related issues. Moreover, a set of local diagnostic rules, designed by experts, is applied to provide users with accessible insights. An example of such a rule and its corresponding fix recommendation is: "If the model is diagnosed as over-fitted and the incorrectly classified instance has low model uncertainty, and its neighbourhood is not small, then the error is likely due to over-fitting. Improve the model fitting procedure."

After completing the diagnosis process, BrightBox generates visual reports emphasising the most important findings. They include relevant statistics related to the diagnosed model, the quality of its approximator, and the distributions of diagnostic attributes. Interactive plots help to explore diagnoses for individual instances and analyse their statistics for specific groups. Reports also offer valuable insights on the importance of original attributes, approximated by the significance of attributes in the surrogate model. This diagnostic process is both efficient and effective in providing us with a deeper understanding of model operations. Figure 1 shows the entire workflow of BrightBox.

In conclusion, BrightBox employs ensembles of rough set-based reducts to approximate black-box machine learning models. This approach is at the core of XAI, which aims to make black-box models more interpretable. However, in our case, these ensembles also enable us to identify collections of historical instances that are processed in a similar way to each new instance. These collections, known as neighbourhoods, help us categorise errors made by diagnosed models.

Actually, analysing neighbourhoods – rather than just attribute values – is the key advantage of BrightBox. This means that diagnostic attributes can reveal valuable characteristics of specific data instances, providing domain experts and data scientists with the needed information to create better prediction models. Focusing on neighbourhoods, the technology offers deeper insights and more meaningful guidance for model improvement.

Figure 1: Diagram of diagnostic method workflow.

Our goal is to further develop BrightBox in practical applications (see e.g. [2]). This approach can be highly beneficial in, for example, detecting errors in models submitted for online data science competitions, allowing their organisers and sponsors to gain valuable insights into the performance of each solution. Such insights can then suggest improvements to the models and facilitate their deployment in production-ready environments. In addition, it is possible to construct improved solutions from the existing ones. For instance, by using information about different degrees of risk (uncertainty) of decision-making by different classifiers of an ensemble, it is possible to modify the procedure for resolving conflicts in voting.

References:

[1] A. Janusz, et. al., “BrightBox – a rough set based technology for diagnosing mistakes of machine learning models,” Applied Soft Computing, vol. 141, pp. 110285, 2023. https://doi.org/10.1016/j.asoc.2023.110285

[2] A. Janusz and D. Ślęzak, “KnowledgePit meets BrightBox: a step toward insightful investigation of the results of data science competitions,” in Proc. FedCSIS 2023 in ACSIS vol. 30, pp. 393-398. https://doi.org/10.15439/2022F309

Please contact:

Andżelika Zalewska-Küpçü,

QED Software, Poland

Dominik Ślęzak,

University of Warsaw & QED Software, Poland