by Nikolaos Rodis (Harokopio University of Athens), Christos Sardianos (Harokopio University of Athens) and Georgios Th. Papadopoulos (Harokopio University of Athens)

Despite the outstanding advances in Artificial Intelligence (AI) and its widespread adoption in several application domains, there are still significant challenges that need to be addressed regarding the explanation of how decisions are reached to the end-user. The latter need becomes more complex and demanding when multiple types of data are involved in the AI-based generated decisions; hence, leading to the emergence of the so-called multimodal explainable AI (MXAI) field. The above challenges become even more imperative for some critical domains, for example, medical applications (where human lives are involved).

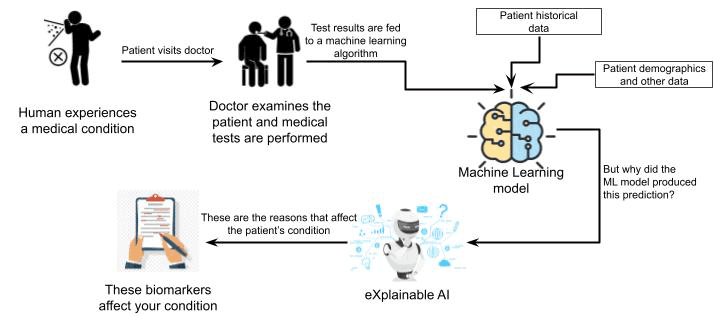

Figure 1: A visual representation of a patient-centric data flow: enabling AI-driven biomarker analysis and explainable insights for doctors

The capabilities of the medical sector have recently been tremendously improved, largely due to the introduction of multiple AI-boosted applications for improving, for example, decision-making, drug development and disease prevention. However, explaining the obtained results is not always easy, with the involvement of multiple data modalities acting as an extra obstacle, as graphically illustrated in Figure 1. Within the context of the EC-funded project ONELAB [L1], diverse and heterogeneous information sources will be used for addressing the issue of biomarker detection in breath data, for example, gas chromatography–ion mobility spectrometry (GC-IMS), and gas chromatography–mass spectrometry (GC-MS). Towards this goal, MXAI approaches are employed to provide the required explanations of the obtained AI-based predictions. The challenges of this problem are numerous, and brief descriptions of some of them are presented below.

Convergence to Formal and Widely Accepted Definitions/Terminology

Despite numerous research works being recently introduced in the MXAI field, little-to-no formality has been introduced concerning the adopted definitions and terminology. In particular, many researchers make use of ad-hoc descriptions to delineate their research activities, while they often define “explainability” and “interpretability” in various ways. As a result, no exact and widely accepted terminology is present in the field. Defining what an explanation is and how its efficiency can be measured (based on both qualitative and quantitative norms and experimental frameworks), apart from enhancing formalisation aspects in the field, will also significantly facilitate the comparative evaluation of the numerous proposed MXAI methods. The latter will also greatly assist in addressing current controversies, like assigning different terms to similar methods or associating similar names with fundamentally different (algorithmic) concepts.

Usage of Attention Mechanisms in Explanation Schemes

Attention schemes, apart from being used in numerous data-analysis tasks, have also been utilised for generating explanations of corresponding prediction models, typically in the form of visualisation methods (indicating text segments or image areas where the primary AI prediction model focuses) or estimating feature-importance metrics. However, several concerns and controversies have emerged, fundamentally raising doubts regarding the suitability of attention mechanisms to produce actual explanations. In particular, experimental studies show that attention distributions between learned attention weights and gradient-based feature relevance methods are not highly correlated for similar predictions [1]; hence, conventional attention explanations cannot be considered equivalent to others. However, contradictory experimental results in more recent works move to the opposite direction, that is, the usage of attention schemes for explanation generation is not always applicable, but it depends on the actual definition of explanation that is adopted in the particular application at hand. In this context, more detailed and in-depth studies need to be conducted, in order to shed more light on whether and under which exact conditions certain attention schemes can be used for providing meaningful explanations, as well as how such methods relate to other non-attention-based MXAI approaches.

Generalisation Ability of MXAI Methods

The wide majority of the available methods have only been designed for specific AI model architectures (regarding the primary prediction task) and in many cases they are constrained to specific analysis tasks [2]. For example, there is a significant number of methods that have been designed for the particular visual question answering (VQA) task; however, such approaches have not been evaluated in other vision-language applications. Naturally, it can be well admitted that introducing model-specific explanation schemes is very restrictive and expensive. Robustly extending existing methods to other tasks and architectures would significantly reduce research and development efforts.

Extension of MXAI Schemes to More than Two Output Modalities

The wide majority of MXAI methods focus on producing unimodal or bimodal explanations. However, extending explanation representations to higher dimensionality multimodal feature spaces (i.e. feature spaces that are composed of more than two modalities) would inevitably further increase the expressiveness and accuracy of the produced interpretations.

Estimation of Causal Explanations

So far, no significant attention has been given to the causality perspective of explanations, while causal relationships are the main type of relationships that humans inherently perceive. In this respect, causal explanations can enable the understanding of how one event can lead to another and, hence, to develop a deeper understanding of the world. On the other hand, identifying the factors that cause an event to occur can also facilitate how the event might unfold and/or how it should be encountered [3]. Therefore, apart from identifying which features are important for a given model, how predictions are affected from modification in the feature values is important to understand the model’s reasoning process itself. In the context of the multimodal setting, causality needs to be examined in terms of how each individual modality and the corresponding features affect the prediction outcome (and not simply identifying which features are important).

Removing Bias in Textual Explanations

The main paradigm being followed for estimating textual explanations consists of collecting natural language rationales from humans (for a given dataset) and subsequently developing/training an explanation module with these descriptions as ground truth. However, human textual annotations (especially when it comes to long textual justifications) typically contain (contradictory) biases that are related to the particular background and temperament of each involved individual. To this end, developing routines for identifying/removing bias and resolving conflicting annotation cases would also significantly improve the quality of the respective generated textual annotations.

To conclude, further research needs to be conducted regarding the above-mentioned problems in order to make AI models more transparent, trustworthy and to boost their utilisation in critical domains (e.g., health care, self-driving cars, etc.).

The research leading to the results of this paper has received funding from the European Union’s Horizon Europe research and development programme under grant agreement No 101073924 (ONELAB). The authors would also like to thank Prof. Iraklis Varlamis for his valuable guidance and comments on formulating the above challenges and open issues.

Link:

[L1] https://onelab-project.eu

References:

[1] S. Jain, B. C. Wallace, “Attention is not explanation,” in North American Chapter of the Association for Computational Linguistics, vol.1, Jun. 2019, pp. 3543–3556.

[2] G. Joshi, R. Walambe, K Kotecha, “A review on explainability in multimodal deep neural nets,” IEEE Access, vol. 9, 2021, pp. 59800–59821.

[3] S., Waddah, C. Omlin, “Explainable ai (xai): a systematic meta-survey of current challenges and future opportunities,” Knowledge-Based Systems, vol. 263, 2023, doi: 110273.

Please contact:

Nikolaos Rodis, Harokopio University of Athens, Greece

Christos Sardianos, Harokopio University of Athens, Greece