by Christoforos Prasatzakis, Theodore Patkos and Dimitris Plexousakis (ICS-FORTH)

In this article, we present a new, easy-to-understand and flexible chatbot architecture, which banks on ease of use and modularity. It relies on the Event Calculus, in order to perform high-level reasoning on world events and agents’ knowledge, and can answer questions regarding the other agents’ belief state, in addition to what is happening in the chatbot’s world in general.

Human and computer interaction is a rather “hot” topic in modern computer science research. One of the many applications of human–computer communication technologies are chatbots, i.e., computer programs that engage in dialogue with humans, who ask questions in natural language and receive appropriate answers. The answers must not only be well formed and justified, but they must also be as close to the human’s intuition as possible. And while we try to fulfil the above goal, more questions arise as to the chatbot’s abilities. Such questions are: if the chatbot’s world is inhabited by more than one agent, what actions have been performed by each and what the other agents have observed? What does each agent believe about the world? Also, how does past interactions and/or beliefs affect what the agents perceive and communicate now? And finally, is there an easy-to-understand and intuitive way for the chatbot to represent the knowledge it receives?

To address all of the above issues, we are developing a chatbot architecture – part of the SoCoLa project [L1] – that can handle the above tasks in an easy-to-understand-and-handle manner. Our architecture makes use of Event Calculus (EC) [1] – implemented in Answer Set Programming (ASP) [2] – in order to describe events and fluents (situations that “hold” for a certain time frame) in the chatbot’s world. The architecture can use the state-of-the-art ASP reasoner Clingo [L2], in order to capture the dynamics of a given domain, infer causal relations, infer what has happened in past time points or what may happen in future time points in the chatbot’s world, as well as answer user questions on hypothetical scenarios (of the “What would happen if…” type). The chatbot makes use of Meta’s wit.ai [L3] natural language processing platform, in order to mark entities in user questions, as well as designate the question’s intent and type.

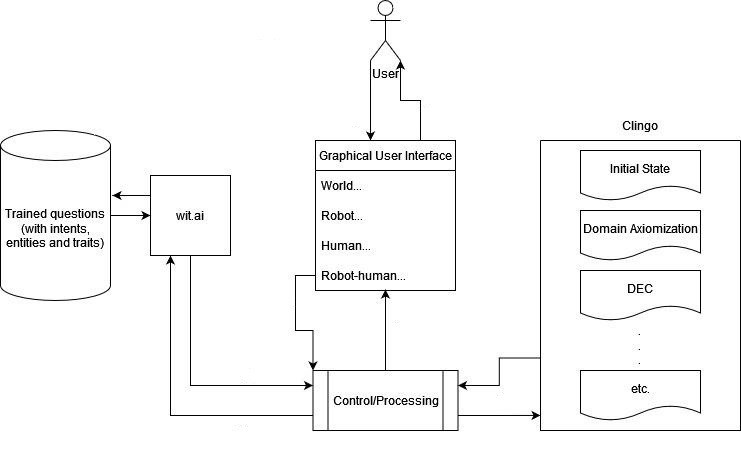

The architecture consists of several distinct components as seen in Figure 1. Clingo and wit.ai are off-the-shelf tools we properly parameterise for our needs. The control/processing unit is written entirely in Python and serves as a “proxy” for all other components, while at the same time handling the burden of answering the user’s question, and the graphical user interface (GUI) is built on Node.js, and sends the current world’s state to the controller, as well as the user’s question.

Figure 1: A block diagram showcasing the architecture and its workflow.

Our system is domain independent to the extent possible and supports a set of question types that we constantly expand. The current implementation supports: polar questions (e.g., “Did the human pick up the pen?”), which can be answered with either a “Yes” or “No” response; “where”-type questions, which ask the position of an agent or an object (e.g., “Where was the robot located at time 3?”); “when”-type questions, which capture temporal aspects of the domain (e.g., “When was the robot located at angle 180”); and “what-if”-type retrospective questions, which ask what would have happened – in a hypothetical setting – if an event occurred at a given time point (e.g., “What if the human picks up the pen at time 0?”).

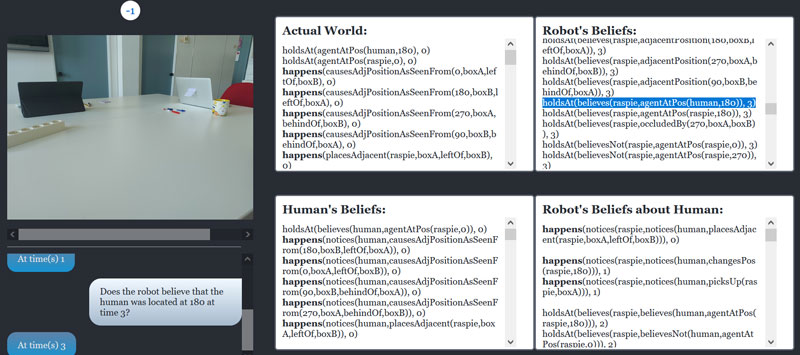

A distinctive feature of our chatbot is that while it can answer typical questions based on what is taking place in the world, it also has the ability to answer questions on what individual agents observe or believe, both about the actual world or about other agents. This question type is called epistemic. Two examples of epistemic questions are “Did the human notice that the robot picked up the pen?” and “Does the human believe that the robot is located at angle 180?”. Figure 2 shows an example of our system processing a sample epistemic question. In an environment, where more than one agents interact and modify the world, such questions can be of real value, since the agents may have incomplete or erroneous beliefs about that the current state of the world is or what has occurred. As such, understanding their beliefs can help explain better their behaviour.

Figure 2: An epistemic question answered by our system.

The layout of the GUI is as follows: the top-left pane shows Event Calculus-encoded knowledge about the chatbot’s actual world. The top-right pane shows the chatbot’s knowledge about agent “robot”. The bottom-left pane is the same for agent “human” and the bottom-right pane showcases what “robot” believes about “human”, thus, allowing us to ask questions on an agent’s knowledge about other agents, not just the environment.

The axiomatisation of a domain with an expressive formal theory, such as the Event Calculus, along with the ability to perform high-level reasoning on events that change the world or an agent’s perspective about the world, enables the chatbot to respond with replies that are based on intuitive, well-justified conclusions. Causal, temporal, epistemic and retrospective aspects are all highly relevant for building intuitive human–robot interaction systems. “Why” questions, which we currently work on, will further enhance the explainability of our chatbot, by exploiting the structured, interpretable chain of conclusions made by the reasoner. The challenge there is to offer explanations that are both sound and minimal, in terms of relevance to what the user is interested in understanding.

Overall, our chatbot architecture offers a high degree of modularity. It also has great potential for expansion and improvement. Currently, it can support nested epistemic questions up to two levels. Future revisions may add further levels of nesting, as well as support for more question categories. Adding new question categories may open up the door for greater flexibility and applications, allowing the architecture to be used in even more areas than initially designed.

Links:

[L1] https://socola.ics.forth.gr/

[L2] https://potassco.org/clingo/

[L3] https://wit.ai/

References:

[1] R. Kowalski and M. Sergot, “A logic-based calculus of events,” New Generation Computing, vol. 4, no. 1, pp. 67–95, 1986. doi:10.1007/BF03037383. ISSN 1882-7055. S2CID 7584513.

[2] V. Lifschitz, “What is answer set programming?,” in Proc. 23rd National Conference on Artificial Intelligence, AAAI Press, 2008, 3: pp. 1594–1597.

Please contact:

Christoforos Prasatzakis, ICS-FORTH, Greece

Theodore Patkos, ICS-FORTH, Greece