by Luigi Briguglio, Francesca Morpurgo and Carmela Occhipinti (CyberEthics Lab.)

How can clinicians be deemed responsible for basing their decisions on diagnoses generated by artificial intelligence and derived in a way that cannot be fully understood? How can patients rely and accept decisions if they are based on “black boxes” of data and algorithms? In the context of the MES-CoBraD project, CyberEthics Lab. defines a model for governing and assessing “Ethical Artificial Intelligence” (ETHAI).

Innovative technologies and approaches have enabled the evolution of many sectors and the increased well-being of our society, reducing time to produce and make available solutions to the public, and improving quality of life. Healthcare services have been a core part of this process, achieving impressive milestones in less than a hundred years. The COVID-19 pandemic has shown how reliable scientific research can be, producing vaccines in a relatively short time and under difficult working conditions [1].

Successful clinical care requires that all people have access to good-quality health care and that they can comply with recommended treatments. However, the ability to respond to and support the health demands of citizens depends on both the health system of a country and the economic and health structure of society (e.g. an ever-growing rate of ageing population means more attention).

This reflects in the global trend of innovating health care by introducing digitised and distributed “neighbourhood healthcare centres” capable of offering wider access to care for citizens. Delivering better health care means a more efficient and effective intervention in diagnosis, and thus prevention and early detection of non-communicable diseases can be better tracked. Ensuring health and promoting well-being for all and for all ages is set out as the Goal 3 of the 2030 Agenda for Sustainable Development of the United Nations [2].

In the digital era, healthcare (eHealth) and prevention actions will be characterised by: (i) preventive health care based on diffused remote monitoring through connected devices, that is, the Internet-of-Things (IoT) and predictive disease detection through computing capabilities, that is, Artificial Intelligence (AI); (ii) prevention actions, continuous contact with healthcare providers and smarter medication; (iii) remote and in-hospital assistance and surgery with dedicated robotics; (iv) asset- and intervention-management in hospitals and healthcare centres [3].

In this context, researchers of the Multidisciplinary Expert System for the Assessment & Management of Complex Brain Disorders (MES-CoBraD) project [L1] are working together to exploit the potential of data and AI to develop a common innovative protocol for the accurate diagnosis and personalised care of complex brain diseases, with a primary focus on improving the quality of life of patients, their caregivers, and the society at large.

To this aim, MES-CoBraD is developing an eHealth expert system that can be used during the diagnostic process to support clinicians’ decision making, analyse data and give access to a huge knowledge base through a secure data lake, where all data gathered in the hospitals participating in the research are placed.

Thanks to the fact that multidisciplinary teams are involved, MES-CoBraD is not only addressing the technological aspects that regard the definition of algorithms and data, but is also considering how to embed ethics, legal and social dimensions into this Multidisciplinary Expert System (MES).

Moving from the definition of a common framework of ethics as applied to AI and to the treatment through it of complex brain diseases, the concern here is twofold:

- Designing and developing a system that will behave “ethically” and that will not deliver biased results at scale or behave as a black box. This implies the necessity to understand and elicit requirements to be followed by the developers of the system, as well as to specify and implement the system in compliance with ethics principles (e.g. transparency and explainability)

- Helping the clinician, user of this system, to reach a decision that – based on the outcomes of the system – is ethically sound. In this context, the expert system may influence the prognosis of the clinician, impact the relationship between the clinician and patient, and impact the accountability and civil liability of the clinician. In this case, an ethical decision model has to be developed, discussed with all the involved stakeholders, and adopted.

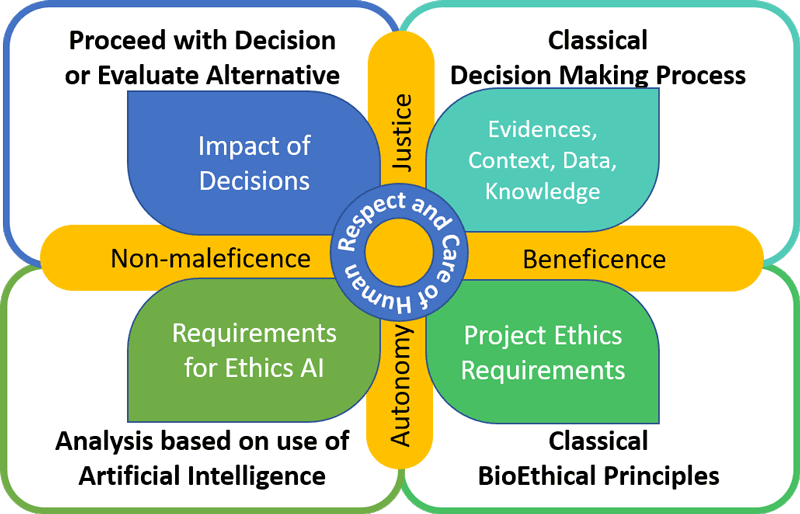

A set of ethical principles lay the foundation of requirements and the ethical decision model, and therefore the decision made by users of the expert system. The usual principles of bioethics (i.e. beneficence, non-maleficence, justice and autonomy) are the most widely used when dealing with any such models. However, these leave out a really important aspect of the latest approaches to health care, that, incorporating some inputs and hints coming from the feminist theoretical reflection, underline the importance – especially in the context of health systems – of the principle of care, adopting a more “humanistic” stance.

For this reason, in the Ethical Artificial Intelligence (ETHAI) model that MES-CoBraD researchers are developing, care is one of the most relevant principles. It lies at the heart of the system together with the other four principles. Bringing medicine back to its foundations, even when technologically enhanced in such a strong way, requires the respect and care of anything that is human (see Figure 1).

Figure 1: Graphical representation of the ETHAI model.

Beyond these respectful principles, it is also important to consider the lawful basis of regulations and standards representing the governance layer for this disruptive technology. Indeed, following the “EU Ethics Guidelines for Trustworthy AI” (2018), the European Commission unveiled a proposal for a new Artificial Intelligence Act (AI Act) in April 2021. On May 11 2023, the European Parliament adopted a draft negotiating mandate. This draft of the AI Act includes obligations for providers of AI foundation models who would have to guarantee robust protection of fundamental rights, safety and rule of law. Therefore, ethics and legal assessment frameworks, including methodologies and tools, will lay the foundation for any future AI development in the next years, after the entry in force of the AI Act, in order to assess and mitigate risks and comply with design, information and environmental requirements. Ethics and legal frameworks, including ethical decision-making models to which ETHAI belongs, will be necessary to proceed with the CE marking, mandatory for placing any AI-based systems in the EU market. At the same time, standardisation committees ISO/IEC JTC 1/ SC 42 “Artificial Intelligence” and CEN-CLC/JTC 21 focus on producing standards that address market (e.g. interoperability) and societal needs (e.g. ethics assessment), as well as underpinning EU legislation, policies, principles and values.

The ETHAI model defined in MES-CoBraD is moving towards a refinement and enhancement process, based on assessment that will be performed among four pilot use cases.

This work is part of a project that has received funding from the European Union's Horizon 2020 research and innovation programme under Grant Agreement No. 965422.

Links:

[L1] https://mes-cobrad.eu/

[L2] https://cyberethicslab.com

References:

[1] “History of flu (influenza): Outbreaks and vaccine timeline.” Mayo Foundation for Medical Education and Research (MFMER). https://www.mayoclinic.org/coronavirus-covid-19/history-disease-outbreaks-vaccine-timeline/flu (accessed 2023).

[2] “Health and population.” United Nations. https://sdgs.un.org/topics/health-and-population (accessed 2023).

[3] EPRS/STOA, “Privacy and security aspects of 5G technology,” 2022. Available at: https://doi.org/10.2861/255532

Please contact:

Luigi Briguglio, CyberEthics Lab., Italy