by Giacomo Iadarola, Fabio Martinelli (IIT-CNR) and Francesco Mercaldo (University of Molise and IIT-CNR)

Cybercriminals can use a device compromised by malware for a plethora of purposes. Malicious intentions include the theft of confidential data, using the victim's computer to perform further criminal acts, or data ciphering to ask a ransom. Recently, deep learning is widely considered for malware detection. The main problem in the real-world adoption of these methods is due to their “black box” working mechanism i.e., the security analyst must trust the prediction without the possibility to understand the reason why an application is detected as malicious. In this article we discuss a malicious family detector, providing a mechanism aimed to assess the prediction trustworthiness and explainability. Real-world case studies are discussed to show the effectiveness of the proposed method.

In recent years, we have focused on cybercrimes perpetrated by malware that can compromise devices and IT infrastructures.. To fight cybercrimes due to malware spread, researchers are developing new methodologies for malware detection, with a great focus on the adoption of artificial intelligence techniques. One of the biggest controversies in the adoption of artificial intelligence models regards a lack of a framework for reasoning about failures and their potential effects. Governments and Industries are assigning critical tasks to artificial intelligence, but how can we ensure the safety and reliability of these models? In response to this need, the research community is moving toward interpretable models (the Explainable-AI field), able to provide the meaning of their predictions in understandable terms to humans [1]. One of the main reasons the adoption of these models in the real world is held back for effectively fighting cybercrimes exploiting malware is the lack of interpretation of the decision made by these models, which causes a lack of reliability certification, essential for achieving wide adoption.

We introduce a deep learning model for detecting malicious families in malware represented as images, with some interesting ideas for exploiting the activation maps, and provide model interpretability. We contextualise the proposed model on the Android platform, but the proposed method is platform independent.

The aim is to train a model i.e., a Convolutional Neural Network, for malware detection. For activation map drawing, we resort to the Grad-CAM [2] algorithm, able to generate a localisation map of the important regions in the image.

This mechanism provides to the security analyst a way to understand the reason why the model outputs a prediction. This aspect is fundamental for malware detection; in fact, malware belonging to the same family share parts of the code, hence areas of the images will be similar. For this reason, the activation map help to understand the area of the image symptomatic of a malicious behaviour exhibited by a family, thus providing trustworthiness.

We tested the model with a dataset composed of six malware families: Airpush, Dowgin, FakeInstaller, Fusob, Jisut and Mecor [3], obtaining a 0.98 accuracy. To evaluate the classification trustworthiness, we exploited the activation maps. For each sample, we showed the greyscale image, the activation map and the picture generated from the overlap of the greyscale image and the activation map.

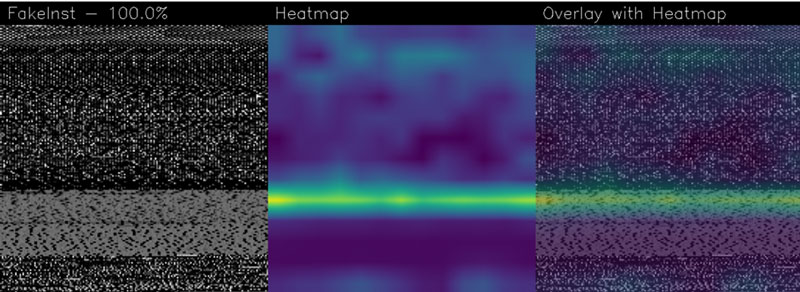

Figure 1 shows the activation map for a sample belonging to the FakeInstaller family, correctly detected.

Figure 1: Sample identified by 7ab97a3d710e1e089e24c0c27496ee76 hash.

The activation map exhibits an extended dark blue area (not of interest for the model) and several green and yellow areas (symptomatic of the activation).

By looking at the greyscale pictures in the left part of Figure 1, there is a characteristic grey area in the medium-low part of the images. The security analyst could make use of the activation maps to visualise which parts of the images were the most important for the classification.

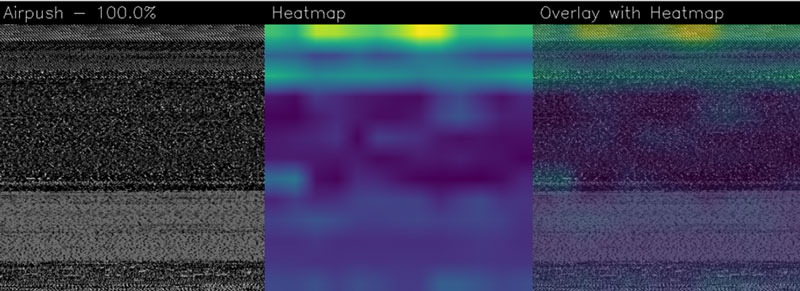

Figure 2 shows the activation map of a sample belonging to the Airpush family. The greyscale image may look similar to the samples of the FakeInstaller family because of the grey area in the middle-low part of the image.

Figure 2: Sample identified by 7728411fbe86010a57fca9149fe8a69b hash.

Figure 2 shows that the model focused attention on a different area of the malware with regard to the activation maps of the FakeInstaller sample; instead of focusing on the grey middle-low area, the activation map highlights the top area of the image, which seems to be the discriminating area for the Airpush family.

Artificial intelligence models are a powerful technology that will play a fundamental role in fighting cyber criminals. While the quantity of data to process keeps growing, artificial intelligence is becoming fundamental for protecting infrastructure and networks. Nevertheless, this technology suffers from bugs, inaccuracies and mistakes. We propose a malware detector by pointing out the importance of trustworthiness in deep learning. We suggest a technique whereby the security analyst is able to understand whether the prediction can be considered reliable. Future research plans will consider investigating the source code highlighted from the activation maps.

This work has been partially supported by EU E-CORRIDOR, EU SPARTA, EU CyberSANE project, and the RSE 2022 Cyber security and smart grids.

References:

[1] D. L. Parnas: “The real risks of artificial intelligence”, Communications of the ACM, vol. 60, no. 10, pp. 27–31, 2017.

[2] R. R. Selvaraju, et al.: “Grad-cam: Visual explanations from deep networks via gradient-based localization”, in Proc. of the IEEE international conference on computer vision, 2017, pp. 618–626.

[3] G. Iadarola, et al.: “Evaluating deep learning classification reliability in android malware family detection”, 2020 IEEE Int. Symposium on Software Reliability Engineering Workshops (ISSREW), 2020, pp. 255-260.

Please contact:

Giacomo Iadarola, IIT-CNR, Pisa, Italy,

Fabio Martinelli, IIT-CNR, Pisa, Italy,

Francesco Mercaldo, University of Molise & IIT-CNR, Campobasso, Italy,