by Serena Ivaldi (Inria)

Collaborative robots need to safely control their physical interaction with humans. However, in order to provide physical assistance to humans, robots need to be able to predict their intent, future behaviour and movements. We are currently tackling these questions in our research within the European H2020 Project AnDy. [L1].

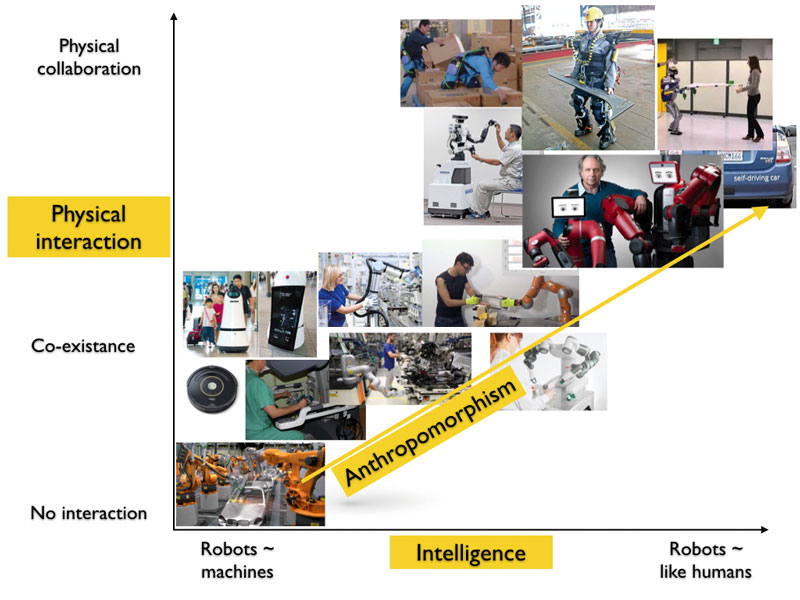

Collaborative robotics technologies are rapidly spreading in manufacturing and industry, in the lead platforms of cobots and exoskeletons. The former are the descendants of industrial manipulators, capable of safely interacting and “co-existing” (i.e., sharing the same workspace) with operators, while the latter are wearable robotics devices that assist the operators in their motions. The introduction of these two technologies has changed the way operators may perceive interaction with robots at work: robots are no longer confined to their own areas; instead, they are sharing space with humans, modifying workstations, and influencing gestures at work (see Figure 1).

The major concern when introducing these technologies was to ensure safety during physical interaction. Most of the research in co-botics over recent decades has focused on collision avoidance, human-aware planning and re-planning of robot motions, control of contact, safe control of physical collaboration and so on.. This research has been funded by the European Commission in several projects, such as SAPHARI [L2] and CODYCO [L3], and contributed to the formulation of ISO norms on safety for collaborative robots, such as the ISO/TS 15066:2016 [L4].

Figure 1: The recent trend in collaborative robotics technologies in industry: from industrial robots working separately from humans, to cobots able to co-exist and safely interact with operators. The advanced forms of cobots are exoskeletons, wearable devices that provide physical assistance at whole-body level, and more “anthropomorphic” collaborative robots that combine physical interaction with advanced collaborative skills typical of social interaction.

With the introduction of the new collaborative technologies at work, however, it has become clear that the problem of collaboration cannot be merely reduced to the problem of controlling the physical interaction between the human and the robot. The transition from robots to cobots, motivated largely by economic factors (increased productivity and flexibility) and health factors (reduction of physical stress and musculo-skeletal diseases), raises several issues from psychological and cognitive perspectives. First, there is the problem of technology acceptance and trust in the new technologies on the part of the operators. Second, there is the problem of achieving a more advanced form of interaction, realised with a multi-modal system that takes into account human cues, movements and intentions in the robot control loop, that is able to differentiate between work-related intentional and non-intentional human gestures, make appropriate decisions together with the human, and adapt to the human.

If we observe two humans collaborating, we quickly realise that their synchronous movements, almost like a dance, are the outcome of a complex mechanism that combines perfect motor control, modelling and prediction of the human partner and anticipation of our collaborator’s actions and reactions. While this fluent exchange is straightforward for us humans, with our ability to “read” our human partners, it is extremely challenging for robots.

Take, for example, two humans collaborating to move a big, bulky, heavy couch. How do the two partners synchronise to lift the couch at the same time, in a way that does not result in a back injury? Typically, the two assume an ergonomically efficient posture, ensure a safe haptic interaction, then use a combination of verbal and non-verbal signals, to synchronise their movement and move the couch towards the new desired location. While this collaborative action could be done in principle exclusively exchanging haptic cues, humans leverage their other signals to communicate their intent and make the partner aware of their status, intention and their upcoming actions. Visual feedback is used to estimate the partner’s current posture and effort, non-verbal cues such as directed gaze are used to communicate the intended direction of movement and the final position, speech is used to provide high-level feedback and correct eventual mistakes.

In other words, collaboration undoubtedly needs a good physical interaction, but it also needs to leverage social interaction: it is a complex bidirectional process that efficiently works if both humans have a good idea of the model of their partner and are able to predict his/her intentions, future movements and efforts. Such a capacity is a hallmark of the human central nervous system that uses internal models to plan accurate actions as well as to recognise the partner’s.

But how can these abilities be translated into a collaborative robotic system? This is one of the questions that we are currently addressing in our research, funded by the European H2020 project AnDy. AnDy involves several European research institutes (IIT in Italy, Inria in France, DLR in Germany, JSI in Solvenia) and companies (XSens Technologies, IMK automotive GmbH, Otto Bock Healthcare GmbH, AnyBody Technology). The main objective of the AnDy project is to create new hardware and software technologies that enable robots not only to estimate the motion of humans, but to fully describe and predict the whole-body dynamics of the interaction between humans and robots. The final goal is to create anticipatory robot controllers that take into account the prediction of human dynamics during collaboration to provide appropriate physical assistance.

Three different collaborative platforms are studied in AnDy: industrial cobots, exoskeletons and humanoid robots. The three platforms allow researchers to study the problem of collaboration from different angles, with platforms that are more critical in terms of physical interaction (e.g., exoskeletons) and more critical in terms of cognitive interaction (e.g., cobots and humanoids).

The main objective of exoskeletons is to provide physical assistance and reduce the risk of work-related musculo-skeletal diseases. It is critical that an exoskeleton is safe, assistive when needed, and “transparent” when not required. One of the challenges for an exoskeleton is the detection of current and future human activity and the onset of the kind of activity that requires assistance. While in the laboratories this can be easily detected by using several sensors (e.g., EMG sensors, motion tracker markers), it is more difficult to achieve in the field with a reduced set of sensors. Challenges for the acceptance of this kind of technology include a systematic evaluation of the effects of the exoskeleton on the human body, in terms of movement, efforts, ergonomics, but also on the perceived utility, trust towards the device and cognitive effort in using it. In a recent paper [1], we listed the ethical issues related to the acceptance of this technology.

For a collaborative robot (manipulator or more complex articulated robot such as a humanoid), the problems are similar in terms of physical interaction and safety. The cobot needs to be able to interact safely with the human and provide assistance when needed. Typically, cobots provide strength and endurance (e.g., they can be used to lift heavy tools and end-effectors) that complement human dexterity, flexibility and cognitive abilities in solving complex tasks. In AnDy we are focusing on the type of assistance that can help improve the ergonomics of the human operator at work. To provide suitable assistance, here the robot needs to be able to perceive human posture and efforts, to estimate the current task performed by the operator and predict future movements and efforts. Again, this is easily achieved in laboratory settings with RGB-D cameras, force plates and EMG sensors, but it is more challenging, if not impossible, to do in real working conditions such as in manufacturing lines with several occlusions and reduced external sensing. In AnDy, we exploited wearable sensors for postural estimation and activity recognition, which was also possible in a real manufacturing line [2]. For the problem of predicting the future intended movement, we proposed describing the problem as an inference over a probabilistic skill model given early observations of the action. At first we leveraged haptic information, but rapidly developed a multi-modal approach to the problem of predicting human intention [3]. Inspired by the way humans communicate during collaboration, we realised that anticipatory directed gaze is used to signal the target location for goal-directed actions, while haptic information is used to start the cooperative movement and eventually provide corrections. This information is being used as input to the robot controller, to take into account the prediction of human intent in the planned robot motions.

This research was performed with the humanoid robot iCub, an open-source platform for research in intelligent cognitive systems. iCub is equipped with several sensors, that make it valuable for conducting human-robot interaction studies. Humanoid platforms such as iCub may seem far from industrial applications; however, many collaborative robots are now being equipped with a “head” and sometimes have two arms, which makes them more and more anthropomorphic and very close to a humanoid (see Figure 1). In this sense, operators may be driven to interact with them in a different manner than from the one they use with cobots or manipulators: the simple addition of a head with a face displaying information about the robot status, or moving along with the human, may create the illusion of a more “intelligent” form of human-robot interaction that goes beyond physical assistance. Expectations may increase, both in terms of the complexity of the interaction and the capacity of the system to properly react to the human and communicate its status. When such interactions occur, and they involve collaborative tasks or decision-making tasks, we believe that it is important to take a human-centred approach and make sure that the operators trust the system, learn how to use it, provide feedback and finally evaluate the system. As roboticists, we often imagine that humans wish to interact with intelligent systems that are able to anticipate and adapt, but our recent experiments show that when humans see the robot as a cognitive and social agent they tend to mistrust it [4]. Our take-home message is that we need to develop collaborative robotics technologies that are co-designed and validated by the end-users, otherwise we run the risk of developing robots that will fail to gain acceptance and adoption.

Links:

[L1] www.andy-project.eu

[L3] www.codyco.eu

[L2] www.saphari.eu/

[L4] https://kwz.me/htK

References:

[1] P. Maurice, et al: “Ethical and Social Considerations for the introduction of Human-Centered Technologies at Work”, IEEE ARSO, 2018.

[2] A. Malaisé, et al: “Activity recognition with multiple wearable sensors for industrial applications”, in Proc. 11th Int. Conf. on Advances in Computer-Human Interactions (ACHI), 2018.

[3] O. Dermy, F. Charpillet, S. Ivaldi: “Multi-modal Intention Prediction with Probabilistic Movement Primitives”, in: F. Ficuciello, F. Ruggiero, A. Finzi A. (eds) “Human Friendly Robotics”, Springer Proc. in Advanced Robotics, vol 7. Springer, 2019.

[4] I. Gaudiello et al.: “Trust as indicator of robot functional and social acceptance. An experimental study on user conformation to the iCub’s answers”, Computers in Human Behavior, vol. 61, pp. 633- 655, 2016.

Please contact:

Serena Ivaldi

Inria, France

+33 (0)354958475

https://members.loria.fr/SIvaldi/