by Amedeo Cesta, Gabriella Cortellessa, Andrea Orlandini and Alessandro Umbrico (ISTI-CNR)

Effective human-robot interaction in real-world environments requires robotic agents to be endowed with advanced cognitive features and more flexible behaviours with respect to classical robot programming approach. Artificial intelligence can play a key role enabling suitable reasoning abilities and adaptable solutions. This article presents a reseach initiative that pursues a hybrid control approach by integrating semantic technologies with automated planning and execution techniques. The main objective is to allow a generic assistive robotic agent (for elderly people) to dynamically infer knowledge about the status of a user and the environment, and provide personalised supporting actions accordingly.

Recent advances in robotic technologies are fostering new opportunities for robotic applications. Robots are entering working and living environments, sharing space and tasks with humans. The co-presence of humans and robots in increasingly common situations poses new research challenges related to different fields, paving the way for multidisciplinary research initiatives. On the one hand, a higher level of safety, reliability, robustness and flexibility is required for robots interacting with humans in environments typically designed for them. On the other hand, a robot must be able to interact with humans at different levels, i.e., behaving in a “human-compliant way” (social behaviours) and collaborating with humans to carry out tasks with shared goals.

Artificial intelligence (AI) techniques play an important role in such contexts providing suitable methods to support tighter and more flexible interactions between robot and humans. In this very wide area, there are several research trends, including social robots, assistive robots and human-robot collaboration, which focus on the co-presence and non-trivial interactions of robots and humans by taking into account different perspectives and objectives.

The Planning and Scheduling Technology (PST) Laboratory [L1] at the CNR Institute for Cognitive Science and Technologies (ISTC-CNR), has considerable know-how on this important research topic. The group has worked on several successful research projects that represented good opportunities to investigate innovative AI-based techniques for a flexible and safe human-robot interaction. Specifically, two research projects warrant a mention: (i) GiraffPlus [1, L2] is a research project (2012-2014) aimed at the development of innovative services for long-term and continuous monitoring of senior people using sensor networks, intelligent software and a telepresence robot (the Giraff robot). PST developed novel techniques to provide personalised healthcare services through the system to support seniors with different needs directly in their home. (ii) FourByThree [2, L3] is a recently ended H2020 research project [2014-2017] whose aim was to develop novel software and hardware solutions (from low level contro to multi-modal interaction) for safe human-robot collaboration in manufacturing scenarios. In this project, the PST group developed and successfully applied a planning and execution framework called PLATINUm [3] for coordinating collaborative assembly processes between a lightweight robot and a human worker in a fence-less robotic cell.

Building on top of such experience, PST-ers started a research initiative called KOaLa (Knowledge-based cOntinuous Loop) to enhance the capabilities and the autonomy of an assistive robot, such as the Giraff robot. Targeting the GiraffPlus scenarios, a sensor network monitors the activities inside a senior’s house and provides a continuous flow of data about both environmental features and some physiological parameters of a person that the carers would like to monitor. Such a rich set of data can be used to detect the activities a person is performing or the events occurring inside the house. KOaLa aims to make use of semantic technologies and Web Ontology Language (OWL) to endow an assistive robot with the cognitive capabilities needed to reason on the available data. Semantic technologies allow an assistive robot to build an internal abstraction of the environment which can be dynamically analysed to understand what is happening inside the house and make decisions accordingly.

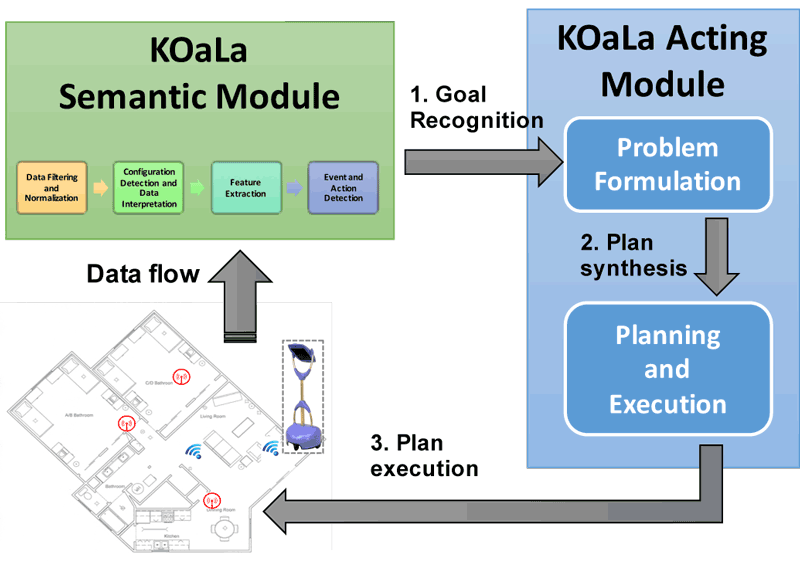

Figure 1: The KOaLa control approach.

Figure 1 depicts the KOaLa approach. It proposes a cognitive architecture capable of integrating two types of knowledge inside a unified hybrid control process: (i) knowledge about the environment and the events or activities that can be recognised; (ii) knowledge about the functional capabilities of a robot that determine the actions a robot can perform inside the considered environment. The envisaged hybrid control approach integrates knowledge reasoning, automated planning and execution technologies to allow a robot to autonomously analyse the environment and proactively execute actions. Specifically, the semantic module is in charge of interpreting sensor data and processing the resulting information to infer knowledge about the environment. It leverages a dedicated ontology (the KOaLa ontology) which defines a clear semantics for data coming from the environment. The KOaLa ontology is defined by evolving the standard Semantic Sensor Network ontology (SSN) and the foundational DOLCE Ultra Light ontology (DUL). The acting module is in charge of synthesising and executing the robot actions to achieve a desired caring objective. A goal triggering process puts the semantic module in contact with the acting module operating as a background process which continuously analyses the knowledge about the environment in order to recognize relevant situations requiring a proactive execution of tasks (i.e., implementing goals that respond to specific user needs) by the Giraff robot.

The key point of KOaLa is the integration of heterogeneous AI techniques within a unified monitoring and control process. The pursued tight integration of these AI techniques provides a robot with the cognitive capabilities needed to generate knowledge from sensing functions and reason on such knowledge to make decisions and dynamically adapt its behaviours.

Links:

[L1] http://www.istc.cnr.it/it/group/pst

[L2] http://www.giraffplus.eu/

[L3] http://fourbythree.eu/

References:

[1] Coradeschi et al.: “GiraffPlus: Combining social interaction and long term monitoring for promoting independent living”, 6th International Conference on Human System Interactions (HSI), Sopot, 2013, pp. 578-585, 2013 doi: 10.1109/HSI.2013.6577883

[2] I. Maurtua et al.: “FourByThree: Imagine humans and robots working hand in hand”, IEEE 21st International Conference on Emerging Technologies and Factory Automation (ETFA), Berlin, pp. , 2016,. doi: 10.1109/ETFA.2016.7733583

[3] A. Umbrico et al.: “PLATINUm: A New Framework for Planning and Acting”, in: F. Esposito et al. (eds) AI*IA 2017 Advances in Artificial Intelligence. , AI*IA 2017. Springer LNCS, vol 1064, 2017.

Please contact:

Amedeo Cesta, ISTC-CNR, Italy