by Christophe Ponsard, Marie d’Udekem-Gevers (University of Namur) and Gaelle De Cupere (NAM-IP)

Today, digitalisation has nearly achieved global coverage, but it still falls short of ensuring fair and inclusive access for all citizens, regardless of origin, age, or gender. Here, we provide a historical perspective that highlights how major biases have emerged over time in computing, how they are being addressed, and barriers that remain to overcome.

Digitalisation has transformed nearly every aspect of contemporary life, reshaping the way we communicate, learn, work, travel, entertain ourselves. With infrastructure near-global, one would expect digital inclusiveness, i.e. fair and effective access to digital technologies for everyone, regardless of their origin, social background, age, or gender. Yet, this promise remains only partially fulfilled due to structural and historical biases embedded in computing systems that systematically and unfairly discriminate against certain individuals or groups of individuals in favour of others. In digital contexts, bias can be categorised according to its origin: pre-existing in society (e.g. cultural, racial, gender, impairment), technologically induced (by algorithms, decision making processes or underlying data sets) or emerging from the evolving usage context (e.g. typically for user interfaces and interactions).

If unchecked, such biases can feed themselves through self-reinforcing loops: AI models trained using dataset specific to some culture (e.g. white face recognition) will transfer the bias in the model behaviour. It is thus important to look at pre-existing bias, to see when they were acknowledged, how they evolved over time, possibly in response to past measures. We adopt here an historical perspective provided by experts from our computer museum [L1]. Based on this, effective actions can be analysed to break free from those biases and achieve a more inclusive digital future. To highlight our approach, let us analyse three cases of biases.

First, a strong pre-existing bias is ethnicity. It is evident from the early history of punch card technology, the Hollerith machine from 1890 collected census information about possible Black ancestry up to the third generation. Starting in the late 1960s credit scoring systems exhibited clear discrimination bias with racial weighting. Although banned in the 1970s by an act in the US, the computerized credit files enabled more complex and opaque statistical scoring methods that could infer such information. A recent study confirms that credit discrimination is still observed in many countries due to biased datasets or machine learning algorithms, affecting specific groups such as women, Black people, and Latinos [1].

A second pre-existing bias is gender bias, which has deep cultural and historical roots impacting technology and information systems. Social norms have often shaped the perception of who is “fit” for a certain work, e.g. in science, technology, engineering and mathematics (STEM). Even when women excelled, their contributions were often overlooked or stolen. This is known as the Mathilda effect [2] which led to late recognition (honours, Wikipedia updates). Actually, in the early days of computers, women often coded, but it was considered secondary until gaining interest as “software engineering”, a term coined by pioneering woman Margaret Hamilton. Women’s involvement grew until the mid-1980s when the microcomputer reinforced the male “geek” stereotype, reducing their presence as students and educators in those fields [L2]. Tackling the issue requires investment not only in early education, but also in wider societal and professional initiatives. From childhood, girls should be encouraged to explore STEM through mentorship, inclusive curricula, and visible role models.

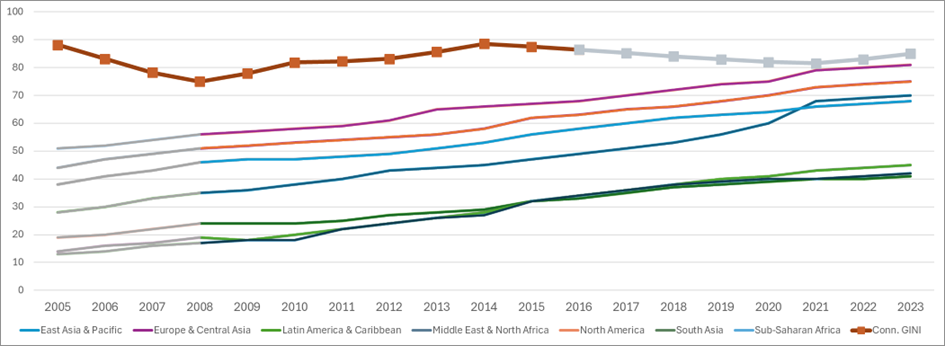

Third, access bias is technologically induced and has emerged with the rise of microcomputers since the 1970s, rooted in geography and socio-economic status. Although originally rooted in social goals, early microcomputers were primarily accessible to urban and well-off Western populations, leaving rural and developing regions largely excluded. Consequently, the design focus was on Western culture and language, an emerging bias that worsened accessibility. With the development of the Web in the 1990s, these disparities deepened: connectivity, infrastructure, and digital skills remained concentrated in cities and wealthier nations, while rural areas and much of the developing world lagged behind. Affordable mobile devices helped to bridge the access gap but not totally, because of limitations in functionality, data costs, and required digital skills. The seven bottom curves of Figure 1, from the Digital Planet Report 2025 [L3], show a rise in global digital parity but persistent regional disparities. The top curve has a different scale and focus solely on technology. It reveals short-term increases in disparities during the broadband rollout in the early 2000s and rural high-speed mobile deployment after 2014 [3]. Even global technologies like satellite constellations do not guarantee equal access, as they remain controlled by commercial corporations.

Figure 1: Evolution of digital parity from 2005 to 2023 (higher=better, grey data extrapolated).

The key takeaway is that technology itself is not the root problem; instead, underlying inequities must be addressed at social, economic, and policy levels, through measures such as education, support for entrepreneurship, and digital policies for universal access. Our museum is involved in several concrete local actions with the University of Namur. We are especially proud of our STEM workshops where boys and girls from primary and secondary school can discover the inner workings of a computer, learn about careers in computing without gender preconceptions, learn to spot media produced by generative AI, and stay secure online. This is complemented by an exhibition and guided tour highlighting women role models. All these activities are led by a team diverse in gender, age, and culture, and inclusive of people with disabilities.

Links:

[L1] https://www.nam-ip.be

[L2] https://cacm.acm.org/research/dynamics-of-gender-bias-in-computing

[L3] https://digitalplanet.tufts.edu/wp-content/uploads/2025/dei/Digital-Evolution-Index-2025.pdf

References:

[1] A. C. B. Garcia et al., “Algorithmic discrimination in the credit domain: What do we know about it?,” AI & Society, vol. 39, no. 4, 2023.

[2] M. W. Rossiter, “The Matthew/Matilda effect in science,” Social Studies of Science, vol. 23, no. 2, pp. –, 1993.

[3] M. Hilbert, “The bad news is that the digital access divide is here to stay: Domestically installed bandwidths among 172 countries for 1986–2014,” Telecommunications Policy, vol. 40, no. 6, pp. –, 2016.

Please contact:

Christophe Ponsard

NAM-IP, University of Namur, Belgium