by Akira Campbell (Inria), Thomas Kleinbauer (Saarland University), Marc Tommasi (Inria) and Emmanuel Vincent (Inria)

‘Cost-effective, Multilingual, Privacy-driven voice-enabled Services’ (COMPRISE) is a Horizon 2020 project that provides tools to facilitate the deployment of conversational AI while maintaining the European values of privacy, accountability and inclusiveness. A major aim of the project is to provide the means for app developers to not only add voice-based interaction to their apps but also to facilitate the improvement of the underlying AI models in various European dialects and languages while maintaining a high level of data privacy.

In the past 10 years a shift has occurred in how average users interact with software/services. Rather than monitors, keyboards and mice, the public now often uses smaller interfaces such as smartphones, home appliances and smart speakers that include a voice interaction method. Once limited to writers, translators, pilots or the physically handicapped, voice interaction is used by the general public with little to no training. In many cases, the average consumer thinks that the ‘effort-reward’ ratio is better than traditional interfaces. This is thanks to hardware and communication infrastructure improvements, but also to improved Speech-to-Text (STT) and Natural Language Understanding (NLU) models that result in improved understanding of the user’s query and, as a result, better replies from the dialogue manager.

This improvement has a cost: the amount of annotated data needed to train STT and NLU models has increased by up to 10,000 hours or more for STT. Data is not only required in one language, but preferably each language and each dialect or accent. Furthermore, domain-specific expressions and task-specific language details need to be understood. To create and improve voice-based systems, it is essential to obtain a broad range of in-domain data and include all categories of the population to minimise the digital gap. This is typically achieved by storing all user queries, manually annotating some of them, and using them as training data.

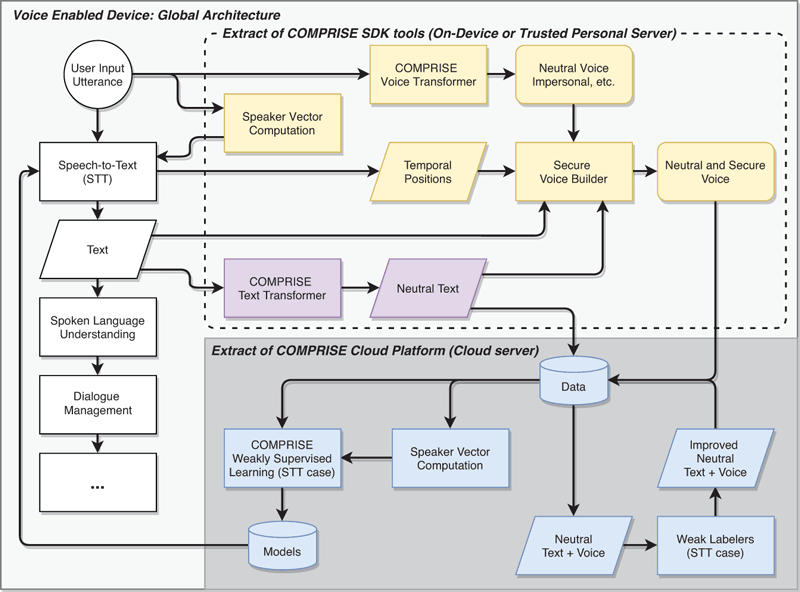

This requirement raises two major concerns: cost and privacy. Hiring annotators to annotate the collected data leads to huge costs. Protecting the privacy of users is also a major concern. To counter this, the COMPRISE [L1] solution is designed to reduce cost and preserve privacy by design (Figure 1).

Figure 1: Overview of the global architecture. Privacy is ensured by running all computations on the user's device or a trusted personal server, with only the anonymised data uploaded to the COMPRISE Cloud Platform.

Privacy and utility are a fine balance. There is no point in creating an anonymisation framework if the resulting data is unusable. Voice data not only requires to be transcribed and annotated, but it also needs to maintain its acoustic and linguistic characteristics to use it for training STT and NLU models. Furthermore, voice interaction uses data that is not only biometric but also contains details that can be used to profile the user, such as gender, profession, religion, race, place of origin, sexual orientation, medical details, mental health, etc. These details should be kept for the language model training but altered to guard user privacy, for example, by mapping the voice to another pseudo speaker to keep the speaker traits but disconnect them from the real identity of the speaker. Hence the creation of the COMPRISE Voice Transformer and the COMPRISE Text Transformer.

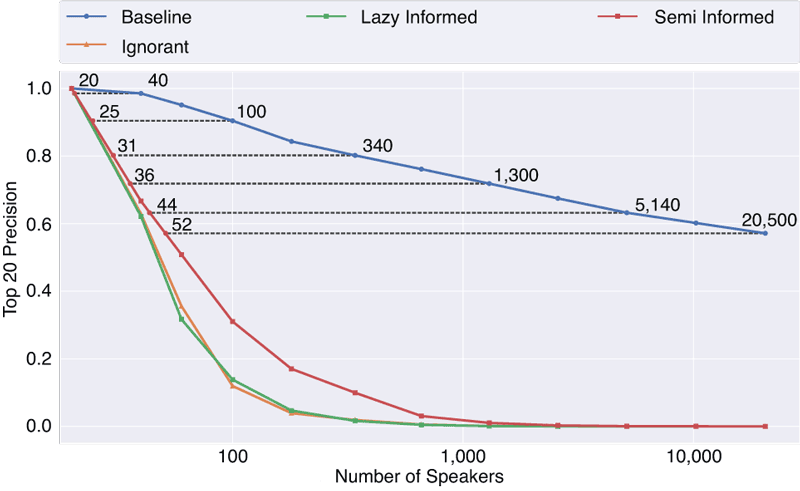

The Voice Transformer replaces the characteristics that make the user’s voice identifiable with a different, random speaker while keeping the original words and prosody.[1] It uses a method called x-vector based voice conversion [2], which preserves the diversity of speech and provides comparable results to the original non-transformed data when used to train STT models. Importantly, it reduces the precision of user identification among a population of 100 users by approximately 60–80% when compared to non-transformed data. Increase the population size to 1,000 user voices and the precision is reduced to almost zero (Figure 2).

Figure 2: Top-20 precision of speaker identification for different attackers as a function of the number of speakers in the population. The numbers of speakers needed before anonymisation (N on blue curve) and after anonymisation (n on red curve) to achieve an equivalent drop in precision are highlighted.

The Text Transformer replaces sensitive words, such as names, places and organisations with benign alternatives to de-identify text [3]. It offers different replacement strategies, which users can select. For instance, the replacement could be an abstract symbol akin to the blackening of words as seen in official documents. A more sophisticated strategy is to replace problematic contents with randomly chosen words of the same type, say, a person's name with another person's name. A number of NLU tasks have shown to perform on par with untransformed data even when trained on data privacy-enhanced by the Text Transformer [3].

Using these open-source tools, with privacy at their core, we are able to better align with the core European principles while providing a means to localise voice-enabled technology. On top of that, the COMPRISE Cloud Platform provides the means to annotate, manage, and train models from the anonymised data collected. The COMPRISE Weakly Supervised STT and Weakly Supervised NLU tools help automate some of the annotation, and train STT or NLU models by learning the difference between the manual and automatic labels. This lowers the cost, and the potential risk of data breaches by reducing the amount of data that need human intervention.

COMPRISE has had the privilege of contributing to the scientific community through scientific publications, and providing an open-source platform and a software development kit that can continue to be developed as a whole, and also as individual tools. Furthermore, the above-mentioned Voice Transformer and Text Transformer can be found within the European Language Grid (ELG) platform. [L2] We believe that the tools created have provided a means to be accountable towards the privacy of voice-enabled devices, and prevent a new digital gap occurring from our voice that is unique and natural to us.

Links:

[L1] https://www.compriseh2020.eu

[L2] https://live.european-language-grid.eu/

References:

[1] B. M. L. Srivastava, et al.: “Evaluating Voice Conversion-based Privacy Protection against Informed Attackers”, ICASSP 2020, IEEE Signal Processing Society, hal-02355115v2.

[2] B. M. L. Srivastava, et al.: “Design Choices for X-vector Based Speaker Anonymization”, INTERSPEECH 2020, ISCA. hal-02610447v2

[3] D. Adelani, et al.: “Privacy guarantees for de-identifying text transformations”, INTERSPEECH 2020, ISCA. hal-02907939

Please contact:

Emmanuel Vincent

Inria Nancy – Grand Est, France