by Dimitrios Kosmopoulos (University of Patras), Evanthia Papadopoulou (Archaeological Museum of Thessaloniki), Antonis Argyros (FORTH-ICS)

The SignGuide project has developed an interactive museum guide system for deaf and hard-of-hearing visitors using mobile devices to promote inclusion for this social group. The system is capable of understanding visitors’ questions in sign language and provides additional content also in sign language using AI methods. The system has been deployed in the Archaeological Museum of Thessaloniki.

SignGuide: Making Museums Accessible for Deaf and Hard-of-Hearing Visitors

Museums are designed to educate, inspire, and preserve cultural heritage, yet for deaf and hard-of-hearing (DHH) visitors, they often remain inaccessible. Traditional museum experiences heavily rely on text-based descriptions and audio guides, which can pose challenges for those who primarily communicate in sign language rather than written language. Research has shown that many deaf individuals struggle with written content because sign language has a different grammatical structure from spoken languages, making direct translations inadequate for full comprehension [1]. As a result, DHH visitors often miss out on the depth of information available to hearing visitors, leading to an unequal experience.

The SignGuide project addresses these accessibility barriers by providing an interactive guidance system through a mobile application. Unlike conventional accessibility solutions that rely on captions or text summaries, SignGuide prioritizes sign language as the primary mode of communication, offering sign language videos and avatars to enhance engagement with museum exhibitions. This approach ensures that DHH visitors can explore exhibits autonomously, ask questions in sign language, and receive responses in their preferred communication mode.

A Real-World Use Case

Maria, a deaf visitor, enters the Archaeological Museum of Thessaloniki and notices a digital display announcing the availability of sign language guidance. She downloads the SignGuide app and is welcomed by a sign language introduction explaining how to use the app.

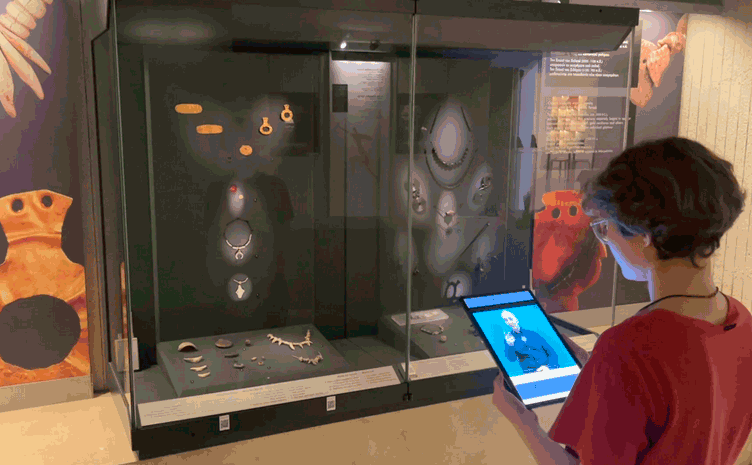

As she walks to the first exhibit, she notices a SignGuide logo on the display. She opens the app, points her camera at the exhibit, and within seconds, the app recognizes the artifact using augmented reality and displays a sign language video explaining its historical background [2].

Curious to learn more, Maria taps on the “Ask a Question” button. She selects a pre-recorded question in sign language asking about the exhibit’s cultural significance. The app instantly retrieves a video response in sign language, providing her with more detailed insights (see Figure 1). Later, Maria visits another exhibit and chooses to record her own question using the front camera. The system processes her query, finds a matching pre-recorded answer, and delivers it in sign language. For the first time, Maria feels truly engaged in a museum experience, enjoying an equal level of access to information as hearing visitors.

Figure 1: As soon as the app visually recognizes an exhibit, it can respond to user queries in sign language and retrieve related content in the same format.

The consortium

SignGuide was funded through the Research Create Innovate action in Greece (2020-2023), T2EDK-00982, by the consortium of the University of Patras (coordinator, sign language understanding, sign language linguistics), Foundation for Research and Technology - Hellas (human motion tracking, avatars), Bioassist SA (system integration) and the Archaeological Museum of Thessaloniki, which hosts the exhibition. The application is available on Apple Store and on Google Play [L1].

Interactive Q&A in Sign Language: One of the most innovative features of SignGuide is its interactive Q&A system, which allows visitors to ask questions in sign language and receive responses in the same format. Visitors can either:

- Select from a list of pre-recorded questions in sign language;

- Record their own question using the phone’s front-facing camera.

Once recorded, the app processes the visitor's query using AI-powered sign language recognition. The system tracks hand movements using a skeleton-tracking algorithm, extracts hand pose sequences, eventually extracts visual summaries and then feeds them into a Transformer-based neural network. This deep learning model matches the recorded sign language query to a set of known questions. After confirming the correct translation, the app retrieves a pre-recorded video response or generates an answer using a sign language avatar. Currently, the system supports over 300 possible questions for the most popular exhibits in the Archaeological Museum of Thessaloniki.

Impact and Reception: The SignGuide project has been warmly received by the DHH community and has been designed after careful consideration of their needs. Many schools for the Deaf have visited the Archaeological Museum of Thessaloniki specifically because of this accessibility feature. For many students, it was their first fully accessible museum experience, highlighting the importance of inclusive cultural engagement. By bridging the communication gap between museums and DHH visitors, SignGuide not only enhances accessibility but also promotes a deeper appreciation of cultural heritage among underserved communities.

Future Plans: We plan to significantly upgrade the provided services by: (a) leveraging large language models and avatars to synthesize dynamically the retrieved content in sign language, (b) keeping up with the advancements in video and sequential data processing, (c) providing information before, as well after the visit, including educational content and games, and (d) involving more sign languages – currently the Greek Sign Language is supported.

Link:

[L1] https://www.signguide.gr

References:

[1] M. Nikolaraizi, I. Vekiri, and S. Easterbrooks, “Investigating deaf students’ use of visual multimedia resources in reading comprehension,” Am. Ann. Deaf, vol. 158, no. 4, pp. 363–377, 2013.

[2] D. Koulouris, et al., “Utilizing AR and hybrid cloud-edge platforms for improving accessibility in exhibition areas,” in Proc. IFIP Int. Conf. Artif. Intell. Appl. Innov., Springer, 2023, pp. 123–134.

[3] E. G. Sartinas, E. Z. Psarakis, and D. A. Kosmopoulos, “A 3D wrist motion-based sign language video summarization technique,” Pattern Recognit. Lett., vol. 195, pp. 45–53, 2025.

Please contact:

Dimitrios Kosmopoulos

University of Patras, Greece