by Dimitrios Koukopoulos (University of Patras, Greece), Christos A. Fidas (University of Patras, Greece), Marios Belk (Cognitive UX GmbH, Germany)

An innovative Mixed Reality application, funded under the EU Erasmus+ CREAMS project, combines immersive technologies and large language models to revolutionise the way we experience and interact with virtual art exhibitions.

In recent years, the cultural heritage (CH) domain has shown growing interest in exploiting advanced AI-driven tools to enrich user engagement and provide personalized user experiences. This is driven by the increasing interest of museums, art galleries, and other cultural institutions in attracting diverse audiences and offering deeper learning opportunities through digital innovations. However, research into the utilisation of Large Language Models (LLMs) in Mixed Reality (MR) cultural settings remains relatively underexplored.

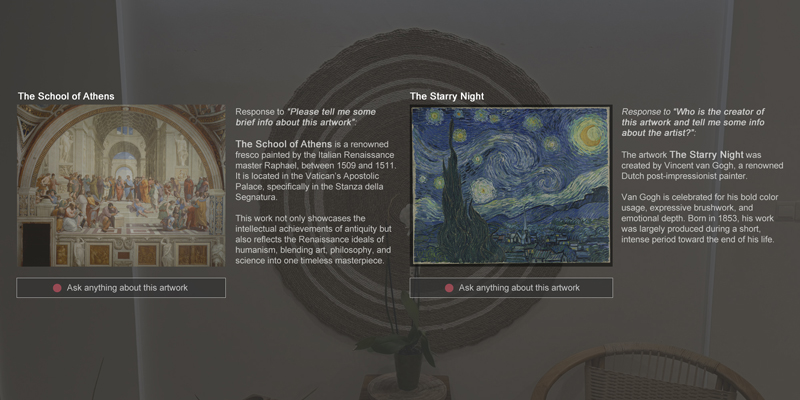

CulturAI addresses this gap by presenting an MR application that displays holographic artworks while integrating a conversational cognitive assistant. Users can directly ask questions about an artwork’s creator, its historical context, or the stylistic details that make it unique. The cognitive assistant responds based on LLM-generated information, delivering immersive storytelling and interactive learning. Figure 1 illustrates how digital artworks are displayed, along with textual responses provided by the cognitive assistant in response to user queries. This dialogue-based approach can open up new possibilities for visitor engagement, extending beyond merely viewing an exhibition to actively engaging in conversation with an AI-driven virtual guide.To achieve this functionality, CulturAI employs a client-server architecture. On the client side, the MR application, built for the Microsoft HoloLens device, visualizes digital artworks within virtual exhibitions and interprets the user’s voice queries. The user simply gazes at a holographic painting, for instance, and utters questions like, “Who was the artist behind this artwork?” or “Which historical event inspired this painting?” The system’s user interface is designed to be intuitive and unobtrusive, allowing users to focus on the artwork rather than the technology and device controls. On the server side, a Python-based web service hosts the digital artwork content, processes voice recordings, and uses an LLM to generate responses in near real-time. These responses are then transmitted back to the MR application and displayed as augmented holographic text, providing context-aware insights into artists, historical periods, and stylistic nuances. As a result, visitors can learn about an artist’s background, cultural influences, or even the story behind the artwork’s creation without pausing their immersive experience.

Figure 1: CulturAI application displaying digital artworks along with textual responses provided by the cognitive assistant in response to user queries. Left image depicts “The School of Athens” by Raffaello Sanzio da Urbino (public domain via Wikimedia Commons); Right image depicts “The Starry Night” by Vincent van Gogh (public domain via Wikimedia Commons).

A preliminary evaluation study was conducted with 39 volunteers who participated and interacted with virtual art exhibitions within an ecologically valid mixed reality context. The study aimed to assess user satisfaction, overall experience, and the perceived trustworthiness of an AI-driven virtual guide. Participants were split into groups, comparing a conventional MR tour (i.e., static text descriptions of artworks) with the interactive, voice-based LLM approach. Analysis of results provided initial evidence of the positive aspect of integrating LLMs in MR applications in terms of user experience and perceived trust. Many participants noted that the responses from the cognitive assistant deepened their engagement, as they could pose spontaneous follow-up questions and receive compelling, context-specific explanations.

This research work is the outcome of a close collaboration between the University of Patras (Greece) and Cognitive UX GmbH (Germany), combining expertise in Mixed Reality development, AI, and user experience design. The research was partially funded by the Erasmus+ CREAMS project (Project ID: KA220-HED-E06518FA) under the call KA220-HED - Cooperation partnerships in higher education (Greek State Scholarships Foundation (IKY) [L1]).

This research was published as a demo paper [1] at the ACM User Modeling, Adaptation and Personalization (ACM UMAP) conference 2024, where it received the Best Demo Award. The positive reception underscores the project’s innovation and its potential impact on interactive experiences in extended reality contexts.

Looking ahead, future work entails integrating more artwork collections and tailoring interactive features for museums and heritage sites worldwide, thereby making CulturAI a more comprehensive platform. On the technical side, the project team envisions improving speech recognition accuracy and exploring advanced user modelling to deliver personalised and adaptive interactions. Another research direction involves investigating the system’s effectiveness in enhancing user engagement and retention over extended periods, potentially informing museums about how best to structure digital tours for different demographics. The team also plans to refine the suggested LLM-driven approach to create dynamic narratives that adapt to individual user models by considering the unique characteristics, preferences, and interests of the end-users. For instance, a history enthusiast might receive more detailed political context, whereas an art student might be guided through stylistic analysis and brushwork techniques.

By bridging Mixed Reality displays, real-time LLM-driven dialogue, and cultural storytelling, CulturAI demonstrates how technological innovation can expand access to the arts and transform learning experiences in museum and heritage contexts.

Links:

[L1] https://creams-project.eu

Reference:

[1] N. Constantinides, et al., “CulturAI: Exploring Mixed Reality Art Exhibitions with Large Language Models for Personalized Immersive Experiences”, .in Adjunct Proc. of the 32nd ACM Conf. on User Modeling, Adaptation and Personalization (UMAP Adjunct ‘24), ACM, 102–105, 2024. https://doi.org/10.1145/3631700.3664874

Please contact:

Dimitrios Koukopoulos

University of Patras, Greece

Christos A. Fidas

University of Patras, Greece