by János Hollósi, Rudolf Krecht, and Áron Ballagi (Széchenyi István University)

Deploying traffic cones has never been smarter or safer. This article unveils a cutting-edge autonomous robot platform equipped with advanced GNSS, AI-driven vision, and precise robotic manipulation to revolutionize traffic cone placement and retrieval. With a design tailored for efficiency, safety, and centimeter-level accuracy, this innovation tackles monotony and danger head-on, setting a new benchmark in automated traffic management.

Autonomous systems have consistently demonstrated their efficacy in tackling tasks that are inherently dirty, dull, and perilous across various sectors. Among these tasks, the deployment of temporary road signs and other traffic management devices distinctly embodies these three characteristics. Consider the repetitive chore of setting up traffic cones—often executed under challenging weather conditions and dangerously close to high-speed vehicular traffic. Moreover, certain specialized activities, such as setting up racetracks or vehicle testing tracks, demand placement of traffic cones with centimeter-level accuracy.

To address the need for both monotony and precision in traffic cone deployment and collection, the Vehicle Industry Research Center [L1] and the Autonomous and Intelligent Robotics Laboratory [L2] of Széchenyi István University introduce an innovative autonomous mobile robot platform designed specifically for this application. This robust platform combines a skid-steer robot base with an integrated robotic manipulator. Positioning is achieved through a Real-Time Kinematic Global Navigation Satellite System (GNSS-RTK). In case of collection, traffic cone detection utilizes a blend of camera systems and a bespoke artificial intelligence-driven machine vision solution. Additionally, the platform is equipped with a safety laser scanner for detecting obstacles.

Operation of this autonomous platform is facilitated through a tailored web-based user interface. Users can designate the precise locations for cone placement on a digital map. Subsequently, the robot systematically places the cones using GNSS coordinates. In a similar manner, the collection of traffic cones is also automated, utilizing both GNSS data and inputs from the machine vision system, thereby streamlining the process and enhancing safety and efficiency on the roads.

For the precise manipulation of traffic cones at locations specified by GNSS, the development of a robust, heavy-duty mobile robotic platform is indispensable (Figure 1). This platform must be equipped to transport approximately 30 traffic cones, collectively weighing around 150 kilograms, in addition to housing all the necessary apparatus for obstacle avoidance, as well as the automated placement and retrieval of traffic cones. Given the paramount importance of load capacity and stability, coupled with the requirements for controllable low-speed maneuverability, the decision was made to engineer a four-wheel drive, skid-steer robot platform outfitted with air-inflated rubber tires. The platform is powered by onboard batteries [1].

Figure 1: Our robot platform under development.

The architectural framework of the platform's onboard systems is organized into six fundamental categories: power management, propulsion, manipulation, machine vision, low-level control, and high-level control. Further enhancing its functionality, the platform incorporates a modular low-level control system. This system receives input data from a sophisticated high-level computational system composed of two computers operating under ROS 2 (Robot Operating System 2). One of these computers is dedicated to task-specific functions related to machine vision, whereas the other serves as the central controller, orchestrating the integration of all systems within the robot platform. For traffic cone manipulation, a collaborative robotic arm equipped with a custom electric gripper is used. This collaborative robotic arm was chosen based on two considerations: the platform's potential future use for other tasks necessitates a multipurpose manipulator, and the need to operate safely near human workers, where collaborative functions provide the safest solution for mobile applications.

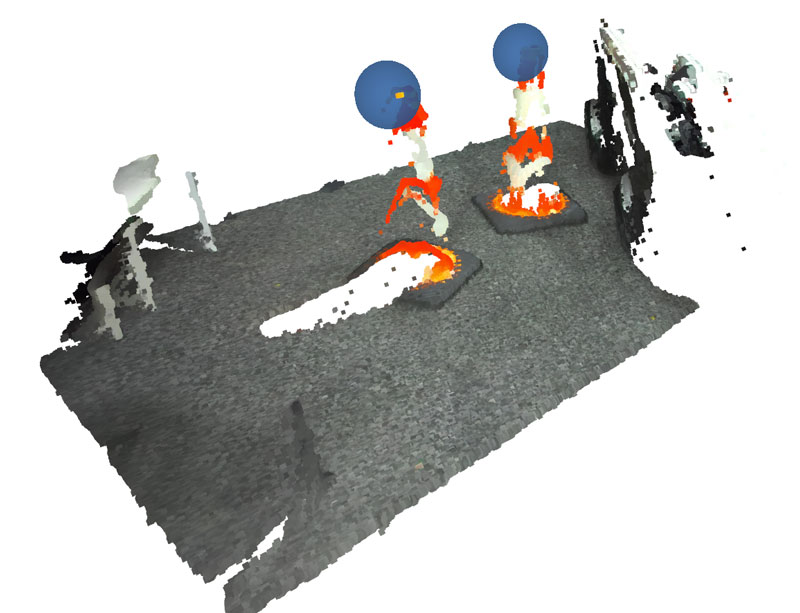

A key element of our mobile robot platform is the artificial intelligence-based machine vision system. This module is designed to extract crucial information about the robot's surroundings through the analysis of data from various sensors, such as cameras and lidars (Figure 2). It does this using advanced artificial intelligence technologies, such as custom-developed neural networks, along with established image processing and machine vision methods. The objective of these intelligent software modules is to enable the detection of objects, segmentation of the environment, and estimation of distances and spatial positions of obstacles in front of the robot, based on sensor data. The purpose of this system is to provide valuable input to the other components of the robot. This includes, for instance, the robot's route planning algorithms, or the control software of the robot arm integrated on the platform.

Figure 2: Visualization of the top of the detected cones in the space seen by the stereo camera.

Our current development is focused on enabling the robot to recognize roadside traffic cones for automatized collection (Figure 3). To this end, an artificial neural network has been developed which is optimized for our target hardware, which is a Connect Tech Rudi-AGX Embedded System with NVIDIA® Jetson AGX Xavier™. We have considerable experience in the development of systems of this nature, which has enabled us to create an effective solution to this problem.

Figure 3: The robot arm, equipped with a stereo camera, scans the robot platform’s environment and positions itself to collect the cone.

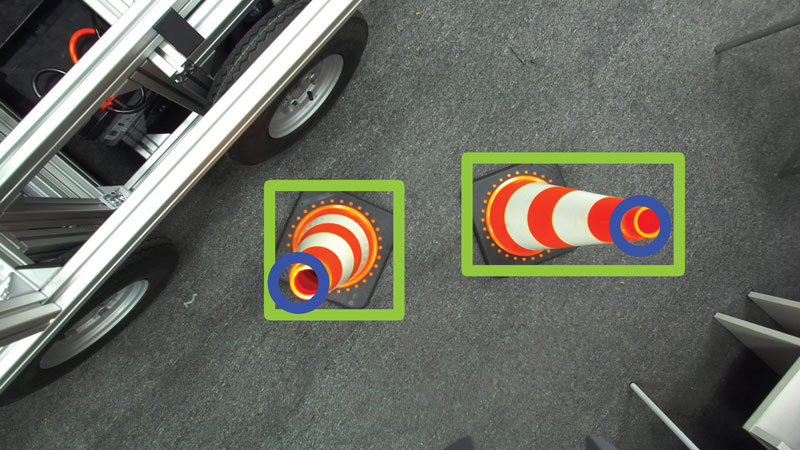

During the cone collection process, the robot arm is positioned above the cones, allowing the camera integrated into the robot arm to provide a top view (Figure 4) of the cones. In order for the neural network to be able to recognize the cones from such a view, a custom dataset had to be developed, as no such solution is currently available on the market. Our custom-built dataset contains diverse top-view images of traffic cones in varying environmental conditions, where each image is individually labelled for training AI models. Our neural network was trained using this unique dataset and is thus able to detect the cones with high efficiency [2].

Figure 4: The cones and their tops detected by our AI model.

The research was supported by the European Union within the framework of the National Laboratory for Artificial Intelligence. (RRF-2.3.1-21-2022-00004).

Links:

[L1] https://jkk-web.sze.hu/?lang=en

[L2] https://szolgaltatas.sze.hu/en_GB/autonomous-and-intelligent-robotics-laboratory

References:

[1] R. Krecht, Á. Ballagi, “Machine Design of a Robot Platform for Traffic Cone Handling” [“Tesztpálya kiszolgálására alkalmas autonóm robotplatform gépészeti tervezése”] in Conference Proceedings of Mobility and environment – Furure-Shaping Automotive Research [“Mobilitás és környezet – Jövőformáló Járműipari Kutatások”], 2022.

[2] J. Hollósi, N. Markó, and Á. Ballagi, “Position and Orientation Determination of a Traffic Cone Based on Visual Perception” [“Forgalomtechnikai terelő bója vizuális észlelésen alapuló pozíció és orientáció meghatározása”] in Conference Proceedings of Digital Automotive Research at Széchenyi István University [“Digitális Járműipari Kutatások a Széchenyi István Egyetemen – Konferenciakiadvány”], 2021.

Please contact:

Áron Ballagi

Széchenyi István University, Hungary