by Enrico Barbierato and Alice Gatti (Catholic University of the Sacred Heart)

Cultural heritage represents societies' collective memory and identity, encompassing both tangible and intangible assets passed down through generations. As technology advances, Artificial Intelligence (AI) emerges as a powerful tool for preserving, analysing, and making heritage accessible to a wider audience. However, the environmental cost of AI, particularly the high energy demands of large-scale models, raises concerns about sustainability.

The project “linea di intervento D3.2, "Impatti scientifici ed etici delle applicazioni basate sull'Intelligenza Artificiale" (Scientific and Ethical Impacts of AI-Based Applications), developed internally across different departments within the Catholic University of the Sacred Heart in Brescia, was aimed at analysing the rapid development of Information Technology and its transformative impact on society, particularly in finance, healthcare, and industry. Initiated in 2019, the project spanned 24 months. It focussed on the role of AI, especially deep learning, in extracting knowledge from large datasets while addressing its interpretability and ethical implications. The project evaluated AI models not only for accuracy but also for their ethical considerations. One line of investigation focussed on the influence of large language models, such as ChatGPT, Google's Gemini, and Meta's LLaMA, which have demonstrated significant success. However, their carbon footprint is projected to be unsustainable in the long term.

The increasing energy consumption and environmental impact of AI models have led to a divide between "Red AI" and "Green AI," a concept extensively analysed during the project. Specifically, Red AI refers to resource-intensive AI models that require enormous computational power, leading to high energy consumption and carbon emissions. In contrast, Green AI aims to optimise efficiency and reduce the environmental footprint by using smaller datasets, more efficient training techniques, or sustainable energy sources.

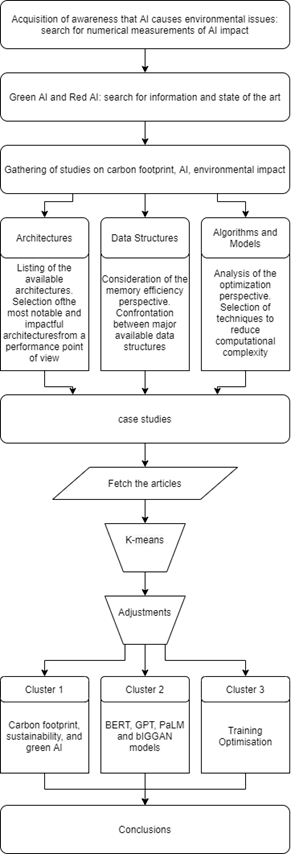

Figure 1 depicts the structure of a part of the project where we delved into a comprehensive, scientific survey [1] of the literature on Red and Green AI, categorising existing research into three clusters: the first study quantifies the environmental cost of Red AI and proposes Green AI as a solution; the second focuses on the carbon footprint of large deep learning models, and the third introduces methods to mitigate the computational cost of AI models. Our research highlights the trade-off between model performance and sustainability, questioning whether AI models can maintain high accuracy while reducing energy consumption. A major concern is that deep learning models, such as GPT and BERT, require massive computational resources, often leading to diminishing returns—where increased complexity does not always translate into significantly better performance. Our study also explores the hardware and algorithmic factors that contribute to Red AI, such as computer architectures, inefficient data structures, and costly training methods. We review various techniques that improve efficiency, including pruning, quantization, and knowledge distillation, which help reduce model size and energy use while preserving accuracy. Additionally, the article presents case studies related to Natural Language Processing (NLP) models, such as BERT, GPT-3, and Google's Bard, emphasising their energy-intensive training requirements.

Figure. 1: Motivation of the study.

We claim that similar considerations apply to AI used for cultural heritage. AI models such as ChatGPT and generative AI can analyse, generate, and curate content related to historical artifacts, manuscripts, and artworks. One application is the digital restoration of ancient texts and paintings, where AI-powered image processing algorithms reconstruct damaged portions of manuscripts or art, restoring faded texts and pigments with remarkable accuracy. Moreover, AI-driven NLP models enable the translation and interpretation of ancient scripts, making historical documents accessible to a broader audience. Generative AI further extends these capabilities by reconstructing lost or fragmented cultural artifacts, generating historically accurate depictions of ancient cities, or simulating the voices of long-lost languages.

Digital exhibitions allow global audiences to explore artifacts without physical constraints, fostering accessibility and inclusivity. The British Museum, for instance, offers a virtual tour of its extensive collection, leveraging AI to provide personalised recommendations based on user preferences. The Hereford Map [L1], an ancient cartographic masterpiece, serves as an example of how AI enhances historical cartography. By digitizing and analysing such maps, AI models can reconstruct historical geographical landscapes, provide interactive overlays comparing past and present terrains, and even predict lost historical sites based on textual references and spatial analysis. Another important application is the automation of metadata extraction in archival projects such as the Vatican’s digitisation efforts, where deep learning techniques analyse vast collections of historical letters and religious manuscripts, enabling scholars to explore and contextualize these texts with unprecedented efficiency [L2].

The computational cost of training large AI models for cultural heritage applications is a critical consideration. The training of large language models such as ChatGPT-4 required approximately 25,000 Nvidia A100 GPUs for 90 to 100 days. This scale of operation translates to an estimated 50 gigawatt-hours (GWh) of energy consumption, underscoring the significant resources required for such advanced AI models. The environmental impact of such training procedures is substantial, as AI models necessitate high-powered computing clusters, often operating continuously for weeks. Compared to AI models in domains such as healthcare or finance, cultural heritage AI models might exhibit a unique computational complexity. Cultural heritage models engage in a mix of NLP, generative image synthesis, and 3D modelling, requiring a broader set of computations across multiple modalities. Consequently, the training complexity for AI applied to cultural heritage is likely to be higher than for traditional NLP tasks but lower than for high-dimensional reinforcement learning models.

Despite the energy demands, optimising AI for cultural heritage can follow principles of Green AI to ensure sustainable implementation. Techniques such as transfer learning, where pre-trained models are fine-tuned on smaller datasets, can significantly reduce computational requirements. Additionally, leveraging low-energy AI architectures, such as neuromorphic computing and quantised models, could further enhance efficiency. While AI models for cultural heritage do require extensive training, they can be optimised to minimise their carbon footprint while maximising their societal and educational impact.

Links:

[L1] https://www.themappamundi.co.uk/

[L2] https://blogs.loc.gov/law/2020/10/collections-and-digitization-projects-of-the-vatican-apostolic-library/

Reference:

[1] E. Barbierato and A. Gatti, "Toward Green AI: A methodological survey of the scientific literature," IEEE Access, vol. 12, pp. 23989-24013, Jan. 31, 2024.

Please contact:

Enrico Barbierato,

Catholic University of the Sacred Heart, Italy