by Peter Kieseberg, Simon Tjoa (St. Pölten UAS) and Andreas Holzinger (University of Natural Resources and Life Sciences Vienna)

Artificial intelligence (AI) and especially machine learning offer a plethora of novel and interesting applications and will permeate our daily lives. Still, this also indicates that many developers will use AI without possessing deeper knowledge of the security caveats that might arise. In this article, we present a practical guide for the procurement of secure AI.

Data-driven applications are becoming increasingly important and are permeating more and more parts of our daily lives. In the coming years, AI-based systems will become ubiquitous and will be used in everyday applications [1]. However, in addition to all the opportunities they create, these systems pose several security-related problems, especially in terms of lack of transparency, including the so-called explainability problem. Especially in critical infrastructures, this problem caused by emergence can create major security gaps.

The AI Act (“Proposal for a Regulation laying down harmonized rules on Artificial Intelligence”) [2] therefore embodies the future strategy of the European Union with respect to securing intelligent systems, both in terms of the use of AI in a wide range of applications and with a special focus on applications in critical domains. However, for many popular algorithms, it is not clear how the transparency and security requirements called for in the AI Act can even be guaranteed. Furthermore, defining the boundaries of what exactly constitutes AI is non-trivial. The AI Act in the current draft version uses a very broad definition, which in itself causes several problems, as most software would fall under this definition. Still, even for the methods that are indisputably belonging to area of AI, several issues arise, as testing them for security vulnerabilities is much more complex than for “classical” algorithms, even if all important parts like training data, evaluation data, algorithms and models are available [3].

However, this will often not be the case in the future: pre-trained models, model training as a service, but also complete API-driven black box solutions will be the means of choice for many developers to be able to use low-threshold AI, sometimes even unconsciously – without realising that AI is inside. In this context, not only IT-security becomes a problem, but also prejudices (often erroneously) fixed in the data, the so-called “bias”. Therefore, these topics are also becoming increasingly important in procurement, especially since many suppliers currently offer AI systems for critical areas without incorporating security considerations into their products.

Based on an exploratory scenario analysis to analyse important driving factors in the development, but especially the use, of AI-based systems, we identified a set of open research questions regarding security and security testing, not only reduced to current technologies and applications, but including concerns arising from foreseeable developments. As development in the AI sector is currently very fast paced, these issues need to be taken into consideration for ongoing procurements. In addition, the examination of classical approaches in the field of security testing, especially penetration testing, and the strategies and techniques used, such as fuzzy testing, yielded a set of very distinctive issues when applied to many state-of-the-art algorithms, especially from the field of reinforcement learning, requiring additional considerations.

Based on these gaps and possible mitigation strategies, we developed a procurement guide for secure AI. The aim of this guide is purely to triage the products on offer: the guide can decide neither whether a system is safe, nor whether the use of AI is even the right strategy for a problem. It does, however, provide a set of questions that make it possible to determine (i) whether a product is based on fundamental security considerations, (ii) how essential issues such as control over data and models, patching, etc. are handled, and (iii) whether a suitable contact person is available to answer such questions in a meaningful and correct manner. In this way, the procurement process in the initial selection phase, but also in a later evaluation phase, can be made much more streamlined and efficient, and unsuitable systems and/or contact persons can be eliminated from the selection process at an early stage.

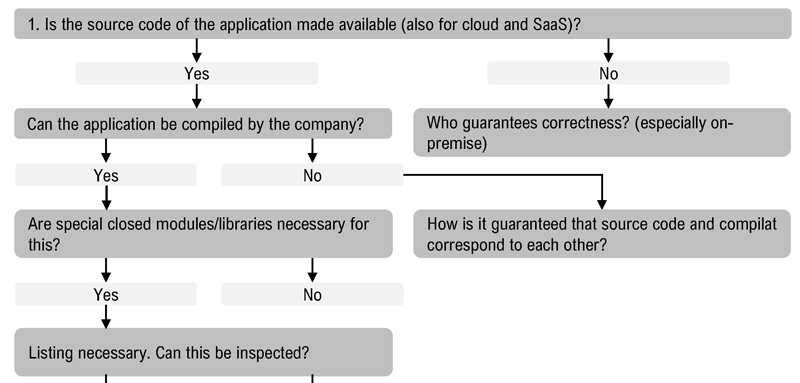

The guide is structured into so-called “aspects” that focus on a specific field that may or may not be relevant for a specific application and is related to a set of indicators. This includes issues like privacy, audit and control or control over source code. For each aspect a set of questions with sub-questions is provided that cover important security and control-related aspects and can be used during an interview or when designing a tender. Figure 1 shows part of such a question block focusing on source code availability and/or reproducibility of the compiled program. Other aspects especially focus on control over data and models and contain a small amount of redundancy for better and simpler use, without introducing large overheads in the preparation phase for an interview.

Figure 1: Example questions regarding source code availability.

The guide is of course available for download free of charge and without financial interest [L1]. Currently we are working on a new version that already contains a lot of additional input that became important due to the increasing capabilities of chatbots and other new applications, as well as regulatory developments. Still, as the AI Act is currently still in draft form, we expect several changes in these sections in the future.

Link:

[L1] www.secureai.info

References:

[1] P. Kieseberg, et al., “Security considerations for the procurement and acquisition of artificial intelligence (AI) systems”, in 2022 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), pp. 1–7, IEEE, 2022.

[2] European Commission, “Laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain union legislative acts”, Eur Comm, 106, pp.1–108, 2021.

[3] A. Holzinger, et al., “Digital transformation for sustainable development goals (sdgs)-a security, safety and privacy perspective on AI”, in International cross-domain conference for machine learning and knowledge extraction, pp. 1–20, Springer, 2021.

Please contact:

Peter Kieseberg, St. Pölten University of Applied Sciences, Austria