by Joan Baixauli, Mickael Stefas and Roderick McCall (Luxembourg Institute of Science and Technology)

Is it time to stop thinking about just augmented reality (AR) or virtual reality (VR)? Should we explore how we can connect different realities together to allow seamless experiences in real-time for work and pleasure?

User experiences in the metaverse are often viewed as taking place in one environment at a time e.g. virtual or augmented reality both of which form part of the reality-virtuality continuum [1]. However, increasingly, users may want to inhabit and interact between different realities, perhaps even at the same time. For example, in a game one user may interact in an immersive VR world together with a player at the real location using AR. During this experience they may be able to solve problems, place objects and play together to complete the game. Similar techniques may also be relevant for urban-planning-type situations, where a remote team using VR can co-design aspects of the urban space in real-time with users at the scene using AR. Therefore, connecting different realities together offers potentially rich experiences for end users both in entertainment and work.

Such experiences also offer the potential to explore at a more abstract level how people can potentially inhabit multiple realities at the same time, and how they can share experiences between them. For example, in the longer term, how to bring an object from reality, through to AR and then share it with others in VR. There are also interesting questions on how the nature of physical and social presence may change. At the time of writing there is a growing interest in the concept of connecting different realities with a workshop being planned by others at ISMAR 2023 [L1].

Luxemverse

The Luxemverse prototype was developed to explore how we can support real-time collaboration between virtual and AR environments. The objective is for two users to learn more about how certain design decisions impact on environmental factors in an urban space. One uses a VR HMD (virtual reality head-mounted display) which lets a user gain a 3D overview of an urban location, while the other user is at the real location. Both users can place and move objects in real-time and see how the environment changes, for example placing certain types of trees or street lamps. The AR user is also represented by an avatar in the 3D virtual world.

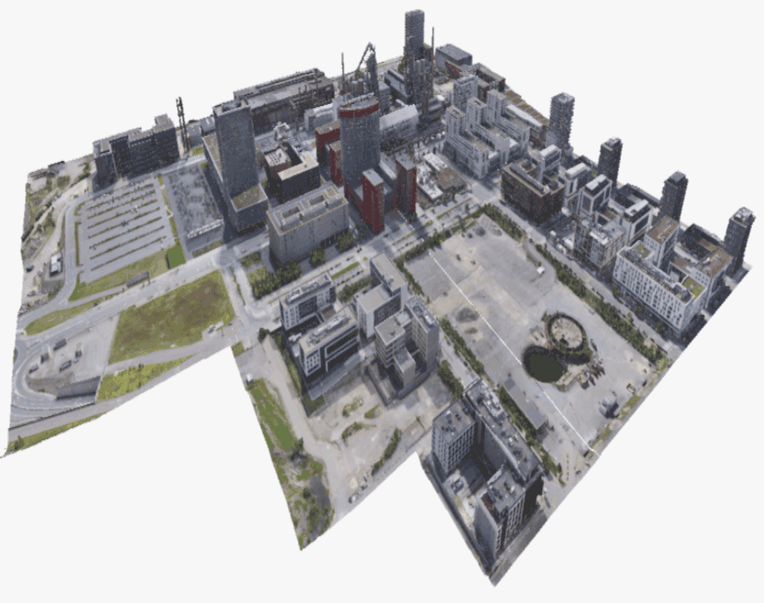

The starting point for Luxemverse is a 3D model (see Figure 1) captured by a drone of Belval, a location in the South of Luxembourg. This is a relatively new area of formerly industrial land which has been reclaimed and transformed. Belval now has apartments, a shopping mall, research centres and a university. Despite environmental improvements being built-in to Belval, there is a strong interest in remaining at the forefront of environmental practices. Luxemverse is an early prototype designed to improve decision-making and awareness of environmental issues, in particular through relatively low-cost approaches.

Figure 1: Belval 3D model.

System Architecture and Implementation

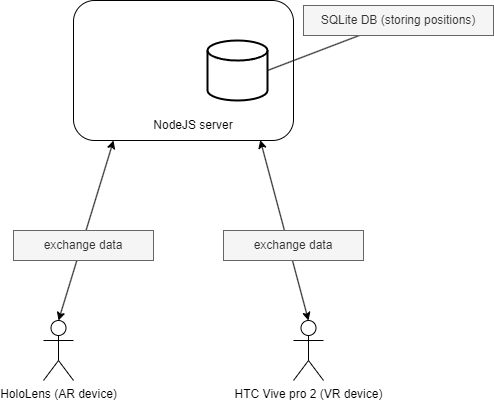

The architecture of Luxemverse is described in Figure 2. A nodeJS server is deployed as middleware and broadcasts messages (containing objects and their locations or shared interactions, users’ positions) between the AR and VR systems. The VR application is built using Unity and the HTV Vive SDK [L2]. Users can interact with the 3D Belval model using HTC VIVE controllers. Interactions supported include the ability to add/remove scene elements which are selected from a menu. An information panel is displayed which lets the user see the impact of their design decisions (e.g. the objects they have added or removed) on the environment.

Figure 2: Architecture diagram.

The AR application is also developed in Unity and uses MRTK [L3]. Users can interact with 3D objects that are displayed in the HMD (such as moving/dropping them, displaying information). The object location in the real world corresponds to their location in the 3D virtual reality model.

Object and user locations are implemented using Azure anchors. AR Azure anchors are placed at specific positions in the real world, while the equivalents are placed in the VR model. All the object or user positions in both AR/VR scenes are placed by comparing their positions to anchor positions.

Example

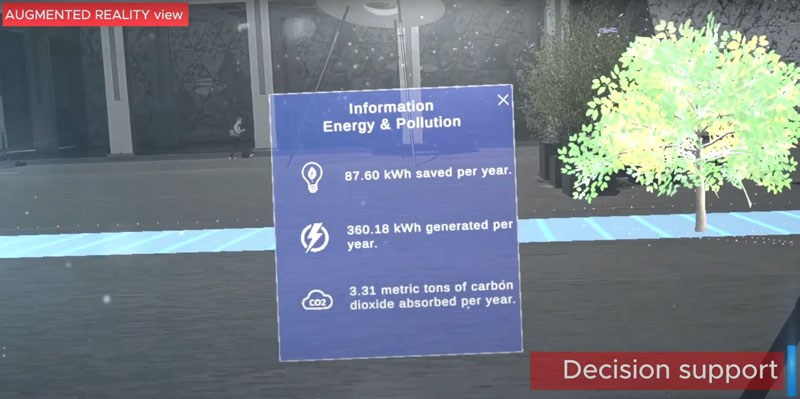

In Figure 3, we see the VR user placing a virtual object, in this case a tree. The AR user can see the same object onsite (see Figure 4). Both users can see information about the impact of such changes (see Figure 5). Finally, the AR user waves goodbye to the VR user (see Figure 6) and the VR user sees a 3D character reproducing the movements (see Figure 7).

Figure 3: User can place virtual object in VR.

Figure 4: User can see the same object in AR.

Figure 5: Both users can see information about the impact of their changes directly.

Figure 6: AR user waving to user in VR.

Figure 7: 3D character acting as the AR user in VR mode, reproducing his movements.

Future Work

The Luxemverse prototype explored interaction across realities and development is ongoing. Among the areas of improvement planned are to the avatar, the nature of collaboration and interaction between users across realities in real-time, to enhance presence, engagement and involvement. This work will allow us to undertake more extensive user studies, and to explore potential within the entertainment and other sectors.

Luxemverse was funded by The Luxembourg Institute of Science and Technology. We would like to thank Mael Cornil and others who assisted.

Links:

[L1] https://www.cross-realities.org

[L2] https://developer.vive.com/eu/support/sdk/

[L3] https://github.com/MixedRealityToolkit/MixedRealityToolkit-Unity

Reference:

[1] P. Milgram, et al., “Augmented reality: A class of displays on the reality-virtuality continuum”, Telemanipulator and telepresence technologies, vol. 2351, Spie, 1995.

Please contact:

Roderick McCall, Luxembourg Institute of Science and Technology

Joan Baixauli, Luxembourg Institute of Science and Technology

Mickael Stefas, Luxembourg Institute of Science and Technology