by Philipp Brauner, Alexander Hick, Ralf Philipsen and Martina Ziefle (RWTH Aachen University, Germany)

With the advent of ChatGPT, LaMD, and other large language models, we wanted to find out what the public expects from artificial intelligence (AI). For a vast number of topics, we measured where the expected likelihood of occurrence is (im)balanced with the evaluation. The resulting criticality map can inform researchers and policy makers about areas in need of particular action.

As AI becomes prevalent in our personal and professional lives through automated decision support, voice assistance, ambient assisted living and large language models (e.g. ChatGPT and LaMDA), understanding public perception of AI is crucial to ensure that the development and deployment of AI and AI-based technologies is well aligned with our norms and values.

We recently published a study with 122 people in the age range from 18 to 69 years in Frontiers in Computer Science that measured and mapped the social acceptance of AI technology [1]. We asked laypeople to assess the social acceptability of various AI-related statements to identify areas for action.

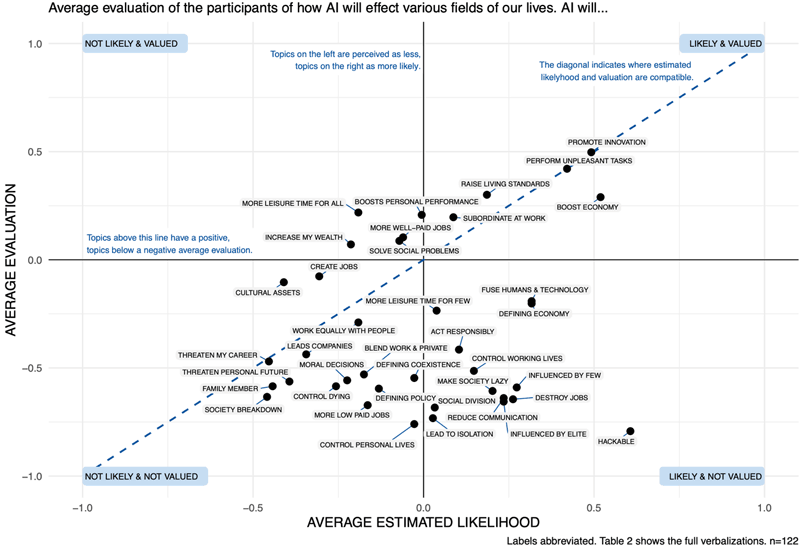

The resulting graph is shown in Figure 1. It can be read as a criticality map with four sections. The upper left contains positive but unlikely statements, while the upper right shows positive and likely statements. Negative but likely statements are in the lower right, and negative and unlikely statements are in the lower left. Points on the diagonal show consistent perceptions, while points off the diagonal show divergent expectations and evaluations. Three sets of points are worth examining closely. Firstly, those in the bottom half of the graph, as they are perceived negatively by participants, and future research should address these concerns. Secondly, those in the upper left quadrant, as they are considered positive but unlikely, revealing where AI implementation falls short of participants' desires. Finally, items with significant gaps between likelihood and assessment (off the diagonal) are likely to cause more uncertainty in the population.

Examples of likely and positive statements are “promote innovation” and “do unpleasant activities”, while unlikely and negative statements include “occupy leading positions in working life” and “threaten my professional future.” Examples of unlikely and positive statements are “create cultural assets” and “lead to more leisure time for everyone”, while probable and negative statements are “be hackable” and “be influenced by a few”.

Figure 1: Criticality map showing average evaluation and average estimated likelihood of how AI will affect various areas of our lives.

The results show that participants had mixed feelings towards AI, with positive and negative evaluations, and varying perceptions of likelihood. Hence, participants had a nuanced view of the impact of AI, instead of a binary one. Notably, there were areas of disagreement between expectation and evaluation, which are important to consider for social acceptance of AI.

We conclude that this visual map helps to identify criticality topics with increased need for either research or governance. Further, we argue that the early integration of the social sciences in research, development and deployment of AI-based technologies could improve these systems. It would facilitate the early identification of potential barriers to acceptance and consequently their mitigation. Overall, this contributes to responsible research and innovation and ensures that the resulting systems are more useful and better aligned with our norms and values.

References:

[1] P. Brauner, A. Hick, R. Philipsen, and M. Ziefle, “What does the public think about artificial intelligence? – A criticality map to understand bias in the public perception of AI”, Frontiers in Computer Science, vol. 5, 2023. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fcomp.2023.1113903/full

Please contact:

Philipp Brauner, Human-Computer Interaction Center, RWTH Aachen University, Germany