by Mihály Héder (ELKH SZTAKI and Budapest University of Technology and Economics)

This paper reports on a novel curriculum, the Human-Centered AI Masters Programme (HCAIM). HCAIM is launched this year in four countries. It incorporates humanities, social sciences and law into the education of Artificial Intelligence in an unprecedented amount and depth.

The oncoming new wave of ethics recommendations and regulation for technology will present an unprecedented intellectual challenge to software and systems developer teams worldwide, but particularly in Europe where consumer protection and the social control of technology is especially stringent. This challenge will require surmounting the cultural and sometimes even cognitive barriers between engineering and the humanities.

The challenge comes in the form of interdisciplinary language and content in normative documents and in the resulting need for professionals who can read and interpret such texts. For instance, the IEEE Ethically Aligned Design document starts out with a presentation of the Aristotelian concept eudaimonia; the IEEE P7000 standard series draft includes several categories from moral theory like deontological and consequentialist ethics. The upcoming AI regulation of the European Union heavily relies on the concepts of transparency and trustworthiness, an epistemic concept and a virtue; the EU High-Level Expert Group’s Ethics Guidelines for Trustworthy AI borrows a page from contemporary social science and replaces the profit motive of the corporation with chasing a wellbeing metric instead. And the list continues with the concepts of power asymmetry, social fairness, biases and so on [1] in the OECD, UNESCO, etc. regulation, the discussion sometimes going as far as the metaphysics of machines [2], which was unthinkable before in the subculture that engineers create for themselves, based on mathematics, physics and a strong sense of down-to-earth systems thinking.

The normative documents of engineering activities were never this interwoven with philosophical and political language. Yet, all of these elements are the result of public consultations – the self-regulation of professional societies and the influence of elected representatives. In other words, we can take these documents as expressions of society’s need for social control of technology [3]. If so, this need may reflect the discovery of the hidden power of engineers, especially those who develop software (AI included). The special property of software is that it is a machine that can be manufactured with almost zero marginal cost once the design is finalised [3, p. 127] – in fact it is not even called manufacturing, just copying.

As a result, software is prone to create technological lock-in situations, because the incentives are extremely high for the reuse of pre-existing software instead of writing new if the licensing and modularity is also right (a great example is the dominance of the Linux kernel). Software-as-a Service (SaaS) enhances this effect further, by minimising the marginal cost of serving one more request through economics of scale. Our contemporary software tools for productivity, collaboration, AI and even science reflects this: unless there are prohibiting factors (i.e., software needs to run on-board) we tend to use SaaS. The ethical recommendation and regulation tsunami is the reaction to this extreme lock-in potential.

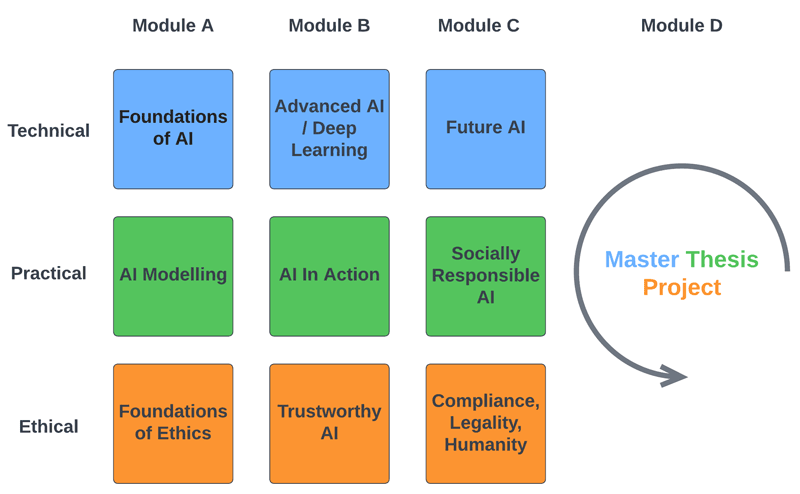

To tackle these challenges, the software and systems engineer of the 21st century needs to be a person with deep knowledge about the humanities and social sciences. Efforts need to be undertaken to properly educate these engineers, but this requires a common language and understanding first among the educators. The Human-Centered AI Masters [L1] is such an effort. The curriculum is created by an interdisciplinary team involving four universities and five companies and is taught in (in alphabetical order) Budapest, Dublin, Napoli and Utrecht from 2022. While the curriculum is AI-specific, its structure is not far from what ethics for any kind of software needs. Unlike other engineering curriculums, it includes a significant amount – around 25% – of outright humanities, broadly construed, including legal, economic and social aspects.

Moreover, the more technology-specific subjects and all case studies also are aware and make use of foundations of ethics, social sciences and law. The development of the curriculum required ground-breaking work on the incorporation of topics such as social responsibility, transparency and explainability; value-based design; and bringing social fairness into these re-thought technical subjects.

Figure 1: The overview of the HCAIM program: after interdisciplinary studies the students write a synthetising master’s thesis.

Work done in the field of engineering education for the previous wave of regulation, mostly about privacy and data governance, is also incorporated. As a result, privacy-preserving machine learning, algorithmic justice and the prevention of bias may be learned, along with forward-looking topics such as future AI topics including singularity, robot rights movements, and human-machine biology are also discussed.

The Human-Centered AI Masters programme was Co-Financed by the Connecting Europe Facility of the European Union Under Grant №CEF-TC-2020-1 Digital Skills 2020-EU-IA-0068.

Link:

[L1] https://humancentered-ai.eu/

References:

[1] M. Héder, “A criticism of AI ethics guidelines”, in Információs Társadalom XX, no. 4 (2020): 57–73. https://dx.doi.org/10.22503/inftars.XX.2020.4.5

[2] M. Héder, “The epistemic opacity of autonomous systems and the ethical consequences”, in AI & Society (2020). https://doi.org/10.1007/s00146-020-01024-9

[3] M. Héder, “AI and the resurrection of Technological Determinism”, in Információs Társadalom XXI, no. 2 (2021): 119–130. https://dx.doi.org/10.22503/inftars.XXI.2021.2.8

Please contact:

Mihály Héder, ELKH SZTAKI and Budapest University of Technology and Economics