by Sónia Teixeira, José Rodrigues (Faculty of Engineering of University of Porto and INESC TEC), Bruno Veloso (Portucalense University and INESC TEC) and João Gama (Faculty of Economics of University of Porto and INESC TEC)

This Portuguese project compares the classification of AI risks and vulnerabilities performed by humans and performed by the computing algorithms.

There has been an increase in the use of Artificial Intelligence (AI) technologies, whether at home, in public spaces, in social organisations, or services. The growing adoption of these systems, in particular the data-driven decision models, has called attention to risks arising from the use of technology, from which ethical problems may emerge. However, despite all the research, the definition of concepts and nature inherent to those risks/vulnerabilities is not consensual. Therefore, categorising the vulnerability type of those systems will facilitate an ethical design.

In this project, we compare the classification of AI risks/vulnerabilities performed with two different approaches: the classification performed by humans and the classification performed by the machine. This comparison puts into perspective the similarities and differences between the classification in both approaches. The main goal of this work is to understand which types of AI risks/vulnerabilities are ethical and which are technological, as well as to identify the differences between human versus machine classification. This initial step may bring insights to analyse the bias or other challenges related to software development in the future and the human aspects involved in the decision to be considered in designing AI-based systems.

Considering the published journals that mention the risks, vulnerabilities, and challenges of AI, we assume technological risks as those focused on the technical issues, and ethical risks as those that arise in the outcome, focusing on the non-technical issues.

Our approach [1] considers a literature review and a selection of articles from three experts from different areas in the first stage. The second stage involved carrying out a survey with questions for classification by humans, in which we included the risk concepts identified in the first stage. Finally, the third stage involved using an algorithm recognised in the literature as a good baseline for text classification using machine classification. In this step, we used the papers selected from the literature review, from which we extracted the risk concepts used in the second stage for the algorithm classification. Once having the results of the classification by humans and machines, we resort to i) Descriptive Data Analysis, ii) Multiple Factor Analysis (MFA), and iii) Clustering for the analysis of these classifications.

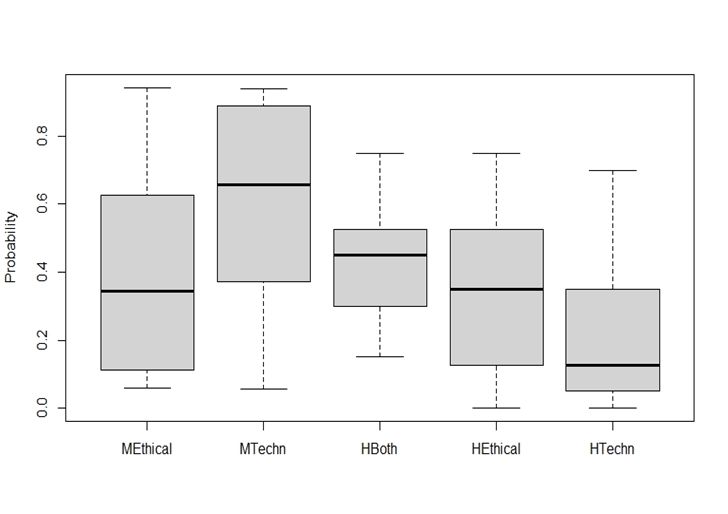

In this project, we observe that machine and human classification distribution (Figure 1) seem to be different in classifying technological concepts and papers. However, in the case of classifying concepts and papers as ethical, the median of human and machine classifications is very close. The results show that we have three clusters. Cluster one comprises six of the ten risks/vulnerabilities in which humans and machines did not reveal consensus. Cluster one corresponds to the concepts: bias, interpretability, protection, explainability, semantic, opacity, completeness, accuracy, data quality, and reliability. Cluster two consists of eight risk concepts in which humans and machines agreed in their classification, a classification of a technological nature. This cluster includes concepts such as extinction, transparency, fairness, manipulation, safety, and security. Finally, cluster three includes risk concepts essentially classified as ethical. This is the case for six risks, which humans and machines rated as ethical. Vulnerabilities such as moral, power, diluting rights, responsibility, systemic, liability, accountability, and data protection belong to cluster three. In the case of humans, the classification of vulnerability concepts is carried out at a more abstract level, and in the case of the machine, it is carried out at a more contextual level. I.e., even with different levels of detail for the classification, the classification of vulnerabilities is in agreement in most cases.

Figure 1: Distribution of the Machine(M) and Humans(H) classifications.

In the future, we intend to deepen our research to understand better the sensitivity of human classification, the machine model, and the resulting bias.

This project is part of a PhD thesis in Engineering and Public Policy, started in 2019, at the Faculty of Engineering of the University of Porto, with the host of the Laboratory of Artificial Intelligence and Decision Support from INESC TEC. The research reported in this work was partially supported by the European Commission funded project “Humane AI: Toward AI Systems That Augment and Empower Humans by Understanding Us, our Society and the World Around Us” (grant \#820437). The support is gratefully acknowledged.

Reference:

[1] S. Teixeira, et al., “Ethical and Technological AI Risks Classification: A Human vs Machine Approach”, in ECML PKDD 2022 Workshops.

Please contact:

Sónia Teixeira, INESC TEC, Portugal