by Tamás Cserteg, Anh Tuan Hoang, Krisztián Kis, János Csempesz (SZTAKI) and Zsolt János Viharos, (SZTAKI and John von Neumann University)

The combination of cognitive AI and robotics has already had a significant impact on the way we live. Novel developed solutions not only cover industrial applications, but are widely spread in the field of social robotics as well. To bring cognitive AI and robotics closer to people not exposed to these technologies on a day-to-day basis, a portrait-drawing demonstrator, called Piktor-O-Bot has been developed at SZTAKI. Over the past year and a half, portraits of more than a thousand people have been drawn, which shows great interest in the demonstrator and that people are genuinely interested in cognitive robotic applications.

As cognitive AI and robotics technology continues to advance and becomes more affordable, it is likely that we will see an even greater spread of cognitive robotics applications and use-cases. It is important to educate those who do not have frequent exposure to robots and AI applications to get a better understanding of these technologies, so they can welcome new generations of robotic applications.

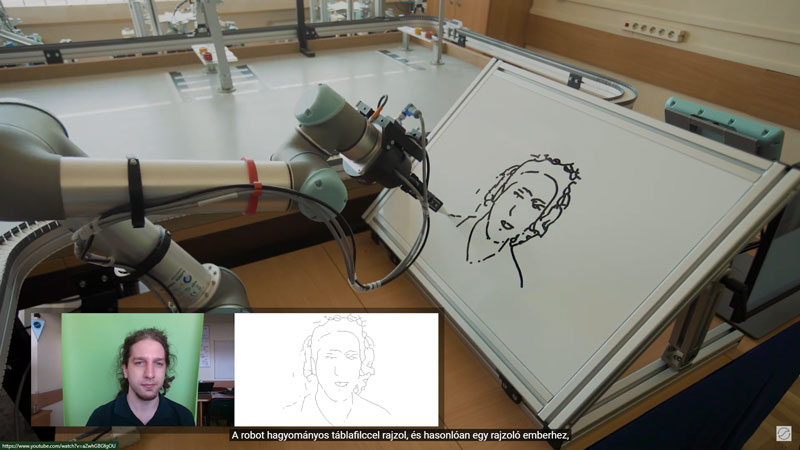

The Piktor-O-Bot portrait-drawing demonstrator developed at SZTAKI provides a unique and entertaining experience for visitors, while demonstrating state-of-the-art AI and robotic technologies [L1]. It features machine-learning-based image processing for generating the to-be-drawn image, optimal path planning for quick drawing, and force-feedback robot control for precise execution. The camera takes a picture of a visitor, then our specialised image-processing algorithm replicates the person's facial features as a set of lines (Figure 1.). The drawing time can be minimised by specifying the order of the lines. Ordering is carried out with a sequence-planning algorithm based on a VRP (vehicle routing problem) solver. The drawing itself takes place on a calibrated board, with a simple brush pen and no additional instruments. The cobot is programmed to draw each line individually while maintaining a constant force between the board and the pen. To draw as many portraits as possible even on busy days at a fair, the process overall is designed to be quick – an average drawing takes about two minutes to draw.

Figure 1: From the real face through the vector-graphic until the final drawing realised by the Piktor-O-Bot demonstrator of SZTAKI.

The demonstrator gained substantial attraction since the beginning of its development [L2]. It has been featured at many exhibitions including conferences and fairs across Hungary and even at the 2nd Stuttgart Science Festival in Germany [L3], reflecting high interest of people in cognitive robotics and insights into the fields of artificial intelligence and robotics (Figure 2).

Figure 2: High interest of people for cognitive robotics presented in various public fairs and events.

The portrait-drawing process flow of Piktor-O-Bot is a much more complex process than it would be thought on first impression. It consists of seven image-processing steps (numbers of the steps reflect the numbering in Figure 3.), but two steps are realised with the same artificial neural network model, so their index is the same.

Face detection: It is the first step of the portrait drawing process, which includes both face recognition and localisation. For this purpose, RTNet [1] was used, which is a pre-trained, convolution-based, multi-level, pyramidal neural network that can recognise human faces in the image in real time with high accuracy:

- Background removal: A pre-trained neural network called MODNet [2] removes irrelevant objects from the portrait point of view from the image, leaving only the human face and human body.

- Contour highlighting: A modern state-of-the-art, convolution-based neural network, DexiNed [3] was used to efficiently highlight facial contours.

- Face segmentation: Segmenting different parts of the face, e.g., eyes, mouths, and noses is also a difficult task, which was solved again by the RTNet.

- Iris detection: The eye is a relatively sensitive part of portrait drawing, thus iris and pupil detection were integrated into the process yielding to create an even more lifelike portrait.

- Edge thinning: The Zhang-Suen thinning method was used for edge thinning, which is used to reduce the thickness of edges.

- Vectorisation: The raster facial contour images obtained (as a result of the process) are transformed into a set of mathematically describable lines and curves by the AutoTrace program.

Links:

[L1] Robotic Face Drawing: https://www.youtube.com/watch?v=8ULIP_5nEJ0

[L2] Merry Christmas with the robot | SZTAKI: https://www.youtube.com/watch?v=NhOibxWIpH0

[L3] 2nd Stuttgart Science Festival in Germany: https://www.facebook.com/watch/?v=562239958892035

References:

[1] Y. Lin, et al., “RoI Tanh-polar transformer network for face parsing in the wild”, in Image and Vision Computing, 2021.

[2] Z. Ke, et al., “MODNet: Real-Time Trimap-Free Portrait Matting via Objective Decomposition,” in Association for the Advancement of Artificial Intelligence, 2022.

[3] X. Soria, E. Riba, A. Sappa, “Dense Extreme Inception Network: Towards a Robust CNN Model for Edge Detection”, IEEE Winter Conference on Applications of Computer Vision (WACV), 2020.

Please contact:

Tamás Cserteg, SZTAKI, Hungary

Figure 1: From the real face through the vector-graphic until the final drawing realised by the Piktor-O-Bot demonstrator of SZTAKI.

Figure 2: High interest of people for cognitive robotics presented in various public fairs and events.

Figure 3: The portrait drawing process flow of Piktor-O-Bot consists of seven image-processing steps; two of them apply the same model.