by Konstantinos Papoutsakis (FORTH-ICS), Maria Pateraki (National Technical University of Athens & FORTH-ICS)

Visual monitoring of human behaviour during work activities is a key ingredient for task progress monitoring and fluency in human-robot collaboration as well as for supporting worker safety in industrial environments. The proposed framework employs low-cost, fixed sensors in a realistic manufacturing environment for the real-time observation of work postures and actions during car door assembly actions, as part of the FELICE project. This task aims to estimate workers’ states and potential ergonomic risks in order to initiate robot collaboration with a specific worker, delegate tasks to the robot and ease the physical burden, while also contributing to the well-being of the worker.

The recent surge of interest in the manufacturing sector in technological advances related to collaborative robots is directly linked with the fast-growing demands for increasing production efficiency and flexibility in assembly processes and for lowering operating costs. Hence, apart from enhancing competitiveness, the introduction of collaborative robots in the workplace can also positively impact the human workforce and safety. Delegating ergonomically stressful and repetitive tasks to robots or recruiting cobots to aid workers during such strenuous tasks based on tool handover or other collaborative actions, eases physical burden on humans and contributes to their occupational health (e.g., avoid muscle strains and injuries). FELICE project was initiated in 2021 [L1] with the aim to address one of the greatest challenges in robotics, i.e., coordinated interaction and combination of human and robot skills with the application priority area of agile production. It envisages adaptive workspaces and a cognitive robot collaborating with workers in assembly lines uniting multidisciplinary research in collaborative robotics, AI, computer vision, IoT, machine learning, data analytics, cyber-physical systems, process optimisation and ergonomics. Its overarching goal is to deliver a modular platform that integrates an array of autonomous and cognitive technologies in order to increase the agility and productivity of a manual assembly production system, ensure the safety, and improve the physical and mental well-being of workers.

As part of the FELICE system, a vision-based framework is introduced for real-time monitoring of 3D human motion and classification of ergonomic work postures during assembly tasks [1]. Contributions of the related developments and outcomes are: i) realising visual perception and cognitive capabilities, which will allow the system to assess ergonomic risks for physical strain of workers towards the prevention of work-related musculo-skeletal disorders (WMSD); ii) advancing human-robot collaboration by enabling the cobot to operate safely and to promptly intervene during assembly actions of high ergonomic risk to humans, sharing and reallocating tasks between them in an efficient and flexible manner and; iii) providing potential input to a manufacturing digital twin for the real-time worker motion, actions and physical state.

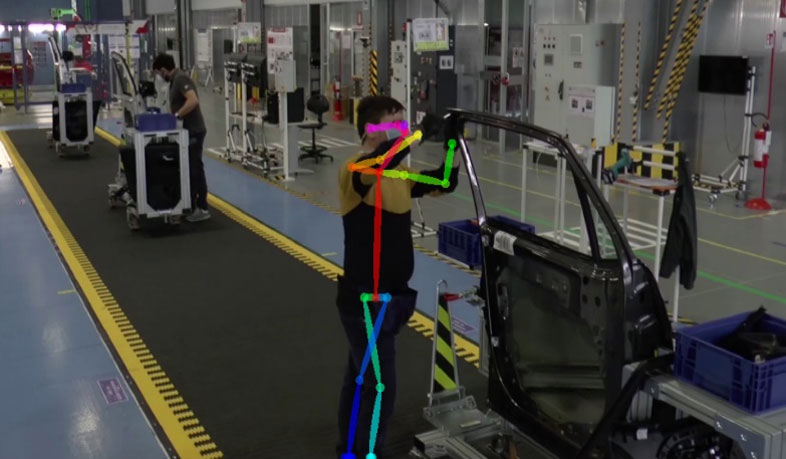

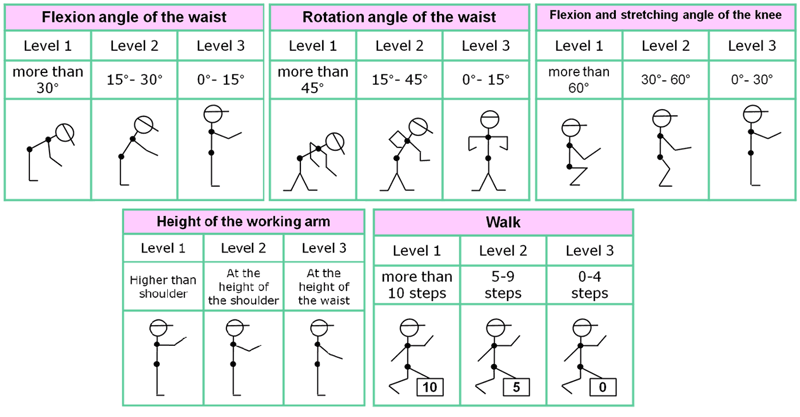

Our pilot case study addresses the car manufacturing industry in a real workplace located at the CRF-SPW Research & Innovation department of the Stellantis group in Melfi, Italy. We focus on workers that work in shifts, each assigned to a single workstation of a simulated car-door assembly line (Figure 1) to repeatedly execute a set of sequential actions, noted as a task cycle (total duration 4 to 5 minutes). In this context, we aim to estimate the 3D skeleton-based body motion in the presence of severe long-term occlusions for the automatic classification of five classes of ergonomically unsafe work postures during assembly actions (Figure 2). Posture classes are selected according to the postural grid of the MURI risk analysis method – a generic and widely used tool for efficiency evaluation and screening of physical ergonomics in workstations in different production contexts, according to the World Class Manufacturing strategy (WCM). Each class features three postural deviations of increasing risk for physical strain imposed to specific body parts that range from high (“Level 1”) to low (“Level 3”) risk level. It is known that Level 1 and Level 2 postural deviations lead to increased physical discomfort and stress in the context of particular work activities and serve as risk indicators for WMSD [2].

Figure 1: The car-door production line at the CRF workplace, which comprises three assembly workstations. A line worker is assigned to each workstation. The human body pose of a worker is estimated and overlaid (colour-coded skeletal body model). Image courtesy of Stellantis – Centro Ricerche FIAT (CRF) / SPW Research & Innovation department.

Figure 2: Five classes of ergonomic body postures during work tasks according to the MURI risk analysis approach. Image courtesy of Stellantis – Centro Ricerche FIAT (CRF) / SPW Research & Innovation department.

For each workstation, visual information (RGB image sequence) is acquired using two camera sensors installed in the actual workplace. Using the popular OpenPose method [2] the 2D body pose is estimated per frame. Subsequently, the MocapNet2 method [3] is employed to efficiently regress a view-invariant 3D skeleton-based pose based on ensembles of deep neural networks. Our non-invasive solution for estimating 3D human motion alleviates the need for the installation of special expensive equipment and wearable suits/reflectors (i.e., a motion-capture system). The 3D skeletal data sequence is forwarded to a Spatio-temporal Graph Convolutional Network model (ST-GCNs) for learning efficient discriminative representations of the spatio-temporal dependencies of the human joints (Figure 3). The ST-GCN model represents the locations of the human skeletal joints, as intra-graph edges of graph-based CNN per frame, whereas inter-graph edges connecting the same joints between consecutive graph models model the spatial temporal dynamics of the human motion. The soft Dynamic Time Warping approach (softDTW) is used to estimate the minimal-cost temporal alignment between sequences as differentiable loss function and as classification metric.

![Figure 3: An overview of the proposed approach for vision-based classification of work postures given 3D skeleton-based body pose sequences captured during manufacturing activities [1]. Figure 3: An overview of the proposed approach for vision-based classification of work postures given 3D skeleton-based body pose sequences captured during manufacturing activities [1].](/images/stories/EN132/papoutsakis3.png)

Figure 3: An overview of the proposed approach for vision-based classification of work postures given 3D skeleton-based body pose sequences captured during manufacturing activities [1].

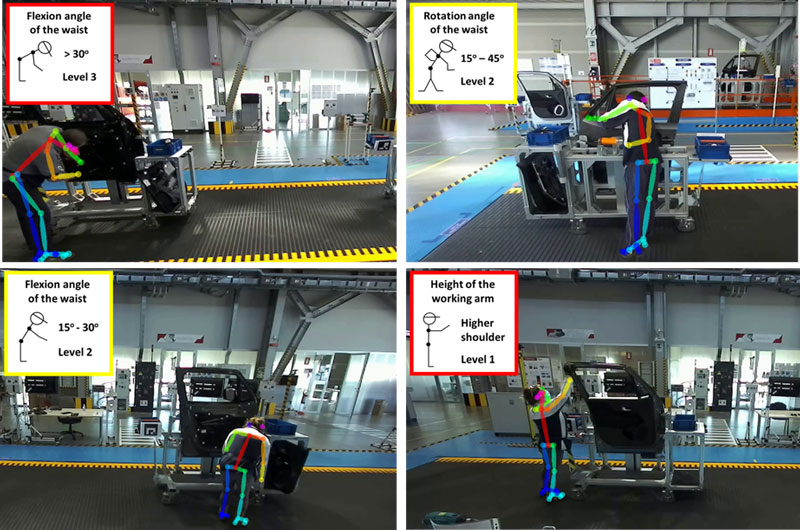

Figure 4: Snapshots of car-door assembly activities captured in a real manufacturing environment. Experimental results of the estimated 3D human body poses (overlaid as colour-coded skeletal body model) and of the classification of working postures that are associated with the ergonomic risk for increased physical strain are illustrated (text and sketch overlaid on the top left).

A video dataset of assembly activities was compiled [L2] to facilitate the implementation and evaluation of the proposed method. Annotation data for assembly actions (310 instances) and work postures performed by two different workers during 12 task-cycle executions were provided by experts in manufacturing and ergonomics. The efficiency of the proposed deep-learning based classifier was measured based on the F1 scores per posture type using trimmed videos (Figure 4). The mean performance score is 68% of correctly classified postures, which is considered satisfactory considering the challenging conditions for acquiring high-quality estimation of the 3D human motion in a real-world manufacturing environment. Future work includes the development of a novel methodology for joint recognition of assembly actions and unsafe work postures and deployment of the FELICE system in the real workplace for evaluation using trained line workers.

The authors would like to acknowledge the consortium partners Stellantis – Centro Ricerche FIAT (CRF) / SPW Research & Innovation department in Melfi, Italy, for their valuable feedback in the design, implementation, visual-data acquisition and annotation involved in this study. This work has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No. 101017151 (FELICE).

Links:

[L1] https://www.felice-project.eu/

[L2] https://zenodo.org/record/7043787

References:

[1] K. Papoutsakis et al., “Detection of Physical Strain and Fatigue in Industrial Environments Using Visual and Non-Visual Low-Cost Sensors”, in MDPI Technologies Journal, 2022. https://doi.org/10.3390/technologies10020042

[2] Z. Cao et al., “OpenPose: Real-time Multi-Person 2D Pose Estimation Using Part Affinity Fields”, in IEEE Transactions on PAMI, 2021. https://doi.org/10.48550/arXiv.1611.08050

[3] A. Qammaz and A.A. Argyros, “Occlusion-tolerant and personalized 3D human pose estimation in RGB images”, in IEEE ICPR 2020. https://doi.org/10.1109/ICPR48806.2021.9411956

Please contact:

Konstantinos Papoutsakis, ICS-FORTH