by Christos Anagnostopoulos (ISI, ATHENA R.C), Gerasimos Arvanitis (University of Patras), Nikos Piperigkos (ISI, ATHENA R.C), Aris S. Lalos (ISI, ATHENA R.C) and Konstantinos Moustakas (University of Patras)

Robot behaviour affects worker safety, health and comfort. Enhancing operator physical monitoring reduces the risk of accidents and musculoskeletal disorders. Within CPSoSAware, we propose a multi-stereo camera system and novel distributed fusion approaches that are executed on accelerated AI platforms, continuously monitoring the posture of the operators and assisting them to avoid uncomfortable and unsafe postures, based on their anthropometric characteristics and a real-time risk assessment standard methodology.

In manufacturing, the work of the robots is usually performed as a sequence of actions that are already known and pre-defined (it is not safe yet for the robots to improvise). A parameter that can affect the robot's movement is a characteristic of the operator's body (i.e., height). This parameter does not change the trajectory of the robot’s action but can be used to select the best adjustment of the robot's position and configuration in order to optimise the ergonomics assessment of the operator. The adjustment happens only one time at the beginning of the robot's operation since, for security reasons, it is not suitable to have a dynamic adjustment.

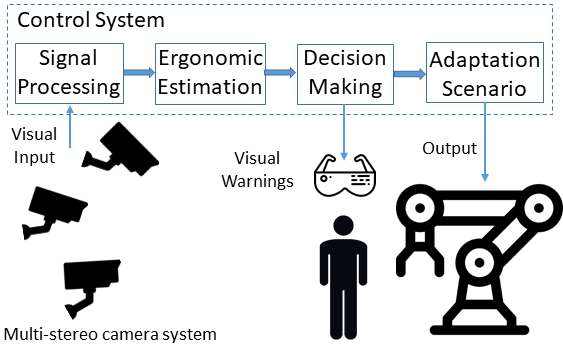

In the proposed architecture (Figure 1), three stereo cameras are utilised in order to cover the most visible area of the working space, so as to increase the possibility to have a good capture of the operator pose, with the most suitable direction, at least from one of the cameras, while the operator is moving in different directions and positions. Each stereo camera is used to extract the 3D pose landmarks of the operator for the height estimation and to calculate the current anthropometric state (based on the RULA score [L1]), in real-time. Each RGB camera of the stereo set is used to monitor the human's actions. A pose estimation algorithm is running to extract the posture 2D landmarks that are used, then for the 3D landmark, estimation is based on a Direct Linear Transform (DTL) triangulation approach [1], [L3].

Figure 1: Proposed concept architecture.

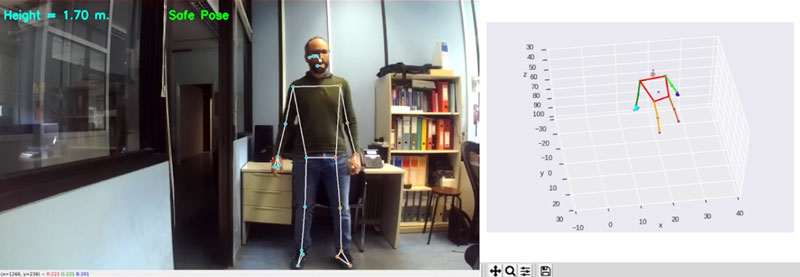

Based on the ergonomic information, a decision-making module finds the right set of actions in order to minimise strain and ergonomic risk factors. Finally, the robot control receives the control information that the wanted set of actions is carried out. A total of three different classes are used based on the operator’s height, leading to three adaptable robot responses (adaptation scenarios) to allow the human to work in an optimal ergonomic state. The algorithmic framework identifies the operator’s class, based on their height, and the corresponding selected scenario configures the cooperating robot's movement parameters to adapt to the environment in a way that is ergonomically most comfortable for the interacting user. We assume the existence of three different classes based on the operator’s height. More specifically, class 1 consists of operators with a height < 175 cm, in class 2 the operator’s height is between 175 and 185 cm, and finally in class 3 the operators are taller than 185 cm. Additionally, a real-time joint angle estimation is performed to estimate the RULA score. Based on this value, the appropriate warning messages are sent to the operators, informing them if their working pose is ergonomically correct or not (Figure 2). Simulations in a virtual environment were also used for further ergonomic analysis in order to optimise the workstation (Figure 3), so as to obtain a trajectory of the robot, in a real environment, as much as possible adapted to the human and additionally to be used as a validation framework since the ground truth information of the 3D landmarks is known.

Figure 2: (a) Height estimation and safe pose identification, (b) Warning for wrong pose.

Figure 3: Examples of the simulator in different configurations (height of the operator), for ergonomic analysis in a safe virtual environment.

For the reliable height estimation of the operator, we have to select the best possible landmarks configuration from the involved cameras. Due to varying camera viewpoints and operator’s movement, it is expected that landmarks, detected from different cameras, will not be quite the same, and nor will their accuracy. To this end, it is required to design a fusion approach that couples the multicamera system in order to provide as output the final group of anthropometric landmarks of the operator. For this task, we create an undirected graph, consisting of cameras and landmarks as nodes and cameras along with detected landmarks as edges. In order to take advantage of this graph topology formulation, we apply the Graph Laplacian Processing technique [3] using as anchor points the average landmark from each camera configuration, for re-estimating landmarks’ positions in an optimal manner.

For the seamless integration of the components and the algorithms described above, we chose the Robotic Operating System (ROS) as our middleware. ROS is an open-source framework that contains a set of tools and libraries for developing distributed applications. Programs run on isolated nodes that can communicate using a publish-subscribe model. We have developed one ROS node for every camera used in the experiment. Each node captures a frame of the camera with a frequency of 10 Hz, extracts and processes the landmarks, and finally publishes them to a relevant ROS topic. The decentralised approach of ROS enables us to connect the cameras to different physical machines, like the Jetson TX2 embedded device. A different node subscribes to the topics published by the cameras and extracts an optimal landmark set that will be used subsequently for the calculation of the posture of the operator.

CPSoSaware is an H2020-funded program, which targets shaping the future of Smart Mobility and Smart Manufacturing by developing autonomic, secure, cognitive cyber-physical system of systems that will support operators and users using artificial intelligence, dynamic reconfiguration and augmented reality technologies. The CPSoSaware solution aims to create self-aware cyber-physical systems of systems (CPSoSs) that detect and respond to physical and cyber environment changes, helping to avoid information overload, and reduce their management complexity by the human administrators in the automotive and manufacturing domains.

Link:

[L1] https://cpsosaware.eu/

[L2] https://ergo-plus.com/rula-assessment-tool-guide/

[L3] https://en.wikipedia.org/wiki/Direct_linear_transformation

Reference:

[1] N. Piperigkos, et al., “Cooperative Multi-Modal Localization in Connected and Autonomous Vehicles”, 2020 IEEE 3rd Connected and Automated Vehicles Symposium (CAVS), 2020, pp. 1-5. https://doi.org/10.1109/CAVS51000.2020.9334558

Please contact:

Aris S. Lalos, Industrial Systems Institute, ATHENA Research Center, Greece