by Paulo Carvalho (CGIE – Centre de gestion informatique de l’éducation - Luxembourg), Patrik Hitzelberger (LIST – Luxembourg Institute of Science and Technology – Luxembourg)

Tabular structures (e.g. Excel, CSV) are often used to represent and store information. Unfortunately, user error can result in the valuable and diverse data stored in such structures being lost or overwritten. This can lead to major problems, depending on how and why the data is intended to be re-used. We propose a visual solution to help users analyse and detect problems in tabular data.

The year 2020 has been difficult worldwide. Many sectors globally – from education and health through to national economies – have been feeling the impacts of the COVID-19 pandemic. Countries are collecting daily data to measure the number of infected people, the affected regions, and other information. This information is precious: it can help us understand the phenomenon and maybe to predict its evolution.

The challenge of understanding and exploiting this data is real. The data has huge potential to help governments to minimise the effects of the pandemic. However, all its value can disappear if we lack efficient systems to assure its quality and to permit its reusability.

Data of this type is often stored in a tabular file: a way to organise information using rows and columns. The data is stored in cells – a cell being the intersection of a row with a column. Each cell may contain a value of a certain type and often, all the cells present in a column are or should be of the same type. Having a tool to detect disparities in data types in a column may be useful.

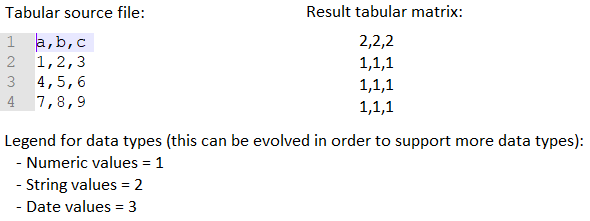

We have been developing a visual solution to help users detect problems in their tabular data. The first step in our approach is to help the user understand the type of data (e.g. numeric, date, string) that is present in a given cell. To do this, each cell of the tabular file is checked. From this analysis, a tabular matrix, the same size of the source file, is build. Each cell of this new tabular matrix has a data type code that depends on its related cell value in the source file (Figure 1).

Figure 1: From analysed tabular source file to tabular data type matrix.

Now that the data type matrix exists, three more steps are applied. These steps are necessary because the efficiency of visual solutions increases along the area to be analysed decreases [1]:

1. Group de rows of the matrix that share the same structure (i.e. rows with the same data type in the same position). The result’s name is “PiledRowsMatrix” (Figure 2 - Step 1).

2. Group the columns of the “PiledRowsMatrix” that share the same structure (i.e. columns with the same data type in the same position). The result’s name is “PiledColumnsMatrix” (Figure 2 - Step 2).

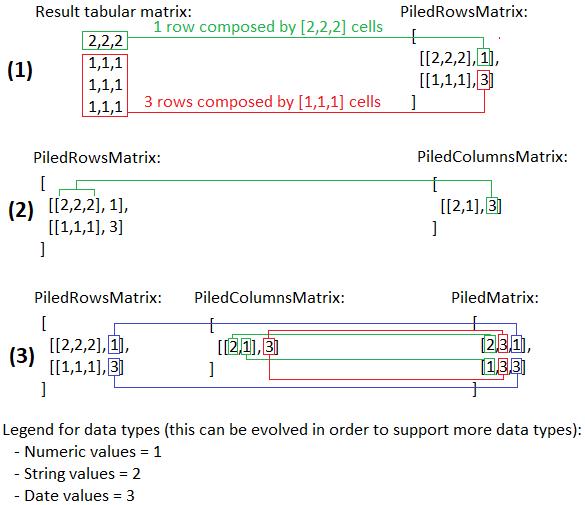

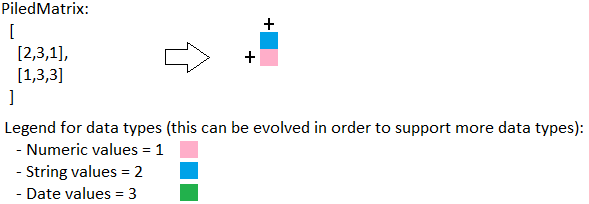

3. From both the “PiledRowsMatrix” and the “PiledColumnsMatrix”, build the “PiledMatrix”. This matrix is a reduced structure of the data types contained in the analysed tabular file. This can be an important tool to detect potential error – e.g. a cell containing the code for a string value when the user is expecting to view the code type for a numeric one (Figure 2 - Step 3).

Figure 2: Flow from the data type structure to the “PiledRows” structure.

What is the point of the PiledMatrix? From a tabular structure of 12 cells comprising three columns and four rows (a data type matrix), a tabular structure with the same information with only one column and two rows (two cells) was obtained (Figure 3).

Figure 3: From the PiledMatrix we can obtain the original structure.

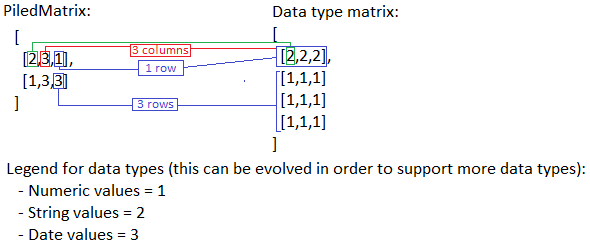

The user needs to be able to view the PiledMatrix in order to understand and analyse the data in an effective way. Colours are essential in the field of visualisation [2]. Each PiledMatrix cell is set to a colour, based on its data type, to efficiently distinguish and identify values. A coloured cells matrix is shown instead of a matrix with numbers (Figure 4).

Figure 4: PiledMatrix – The result matrix: Grouping the PiledRowsMatrix with the PiledColumnsMatrix.

The “plus” is used to expand grouped rows/columns to see the values of each cell. When a row or column is expanded, a “minus” signal appears, to reverse the operation.

Conclusion

This work, which started in 2014, encompasses two major fields: data analysis and data quality/data cleansing. This paper presents the first part of the solution to understand and reuse tabular data, which we do not have many information about its structure and quality. In this time of COVID-19, governments manage huge volumes of data each day, and data quality check tools are vital. The example described here involves a short tabular file with only a few rows and columns, but the potential of such solution is bigger when applied to large files. The gain of space to analyse could be enormous – and this could lead to more effective data analysis. This approach also has a drawback: it works if the data source has a regular structure, i.e., a structure with many rows and/or columns with the same data type in the same index; however, it will not work with irregular structures.

Beyond what we’ve outlined here, we are also working on providing other operations: correct errors, complete missing values and delete rows/columns manipulating the PiledMatrix.

The large amount of information about the pandemic that is being amassed is valuable: it can be used to better understand and manage the disease (and others into the future). This is only possible, however, if the data files are managed correctly – ensuring that high quality and reusable data are available to be analysed using techniques such as machine learning, algorithms and simulation scenarios. This is where our solution can make a difference.

I would like to express my deep gratitude for the help in this work to Patrik Hitzelberger and Prof. Gilles Venturini.

References:

[1] T. von Landesberger, A. Kujper, T. Schreck, et al.: “Visual analysis of large graphs: state‐of‐the‐art and future research challenges”, in: Computer graphics forum. Oxford, UK : Blackwell Publishing Ltd, 2011. p. 1719-1749.

[2] L.E. Holmquist, T. Skog: “Informative art: information visualization in everyday environments”, in Proc. of the 1st int. conference on computer graphics and interactive techniques in Australasia and South East Asia, 2003, p. 229-235.

Please contact:

Paulo Carvalho, CGIE – Centre de gestion informatique de l’éducation - Luxembourg