by Péter Bauer, Antal Hiba, Bálint Daróczy, Márk Melczer, Bálint Vanek (MTA SZTAKI)

The Institute for Computer Science and Control (SZTAKI) of the Hungarian Academy of Sciences (MTA) continues active research in the field of aircraft sense and avoid since six years. The sense (see) and avoid capability is a crucial ability in integrating unmanned aerial vehicles (UAVs) into the national airspace. SZTAKI focuses on the development of a monocular camera based on-board system, which processes image data in real-time and initiates collision avoidance if required. Capabilities of the system are continuously tested in real flight applying small UAVs testbeds.

Sense and avoid (S&A) capability is a crucial ability for future unmanned aerial vehicles (UAVs). It is vital to integrate civilian and governmental UAVs into the common airspace. At the highest level of integration, Airborne Sense and Avoid (ABSAA) systems are required to guarantee airspace safety. In this field, the most critical question is the case of non-cooperative S&A for which usually complicated multi-sensor systems are developed. However, in case of small UAVs the size, weight and power consumption of the onboard S&A system should be minimal. Monocular vision based solutions can be cost and weight effective therefore especially good for small UAVs. These systems basically measure the position (bearing) and size of intruder aircraft (A/C) from the camera image without range and intruder size information. This scale ambiguity makes the decision about the possibility of mid-air collision (MAC) or near mid-air collision (NMAC) complicated. However, [1] points out that the relative distance of an intruder from an own A/C (when it crosses the camera focal plane), called closest point of approach (CPA), well characterizes the possibility of collision.

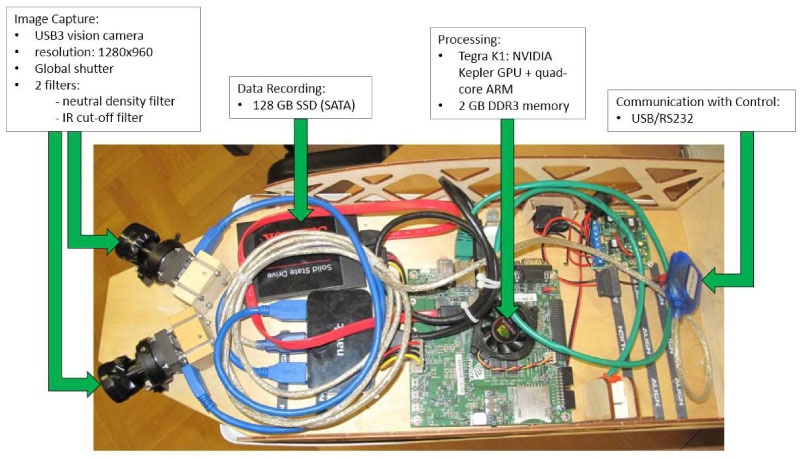

The starting point of our research was to develop a relatively small onboard camera system, which can make all calculations onboard and in real-time to decide about the possibility of collision. The decision information can be sent to the autopilot of the aircraft to make an evasive maneuver if required. The developed system equipped with two HD cameras and a Tegra TK1 GPU board can be seen in Figure 1.

Figure 1: The onboard vision system.

After constructing the hardware system, the goal of theoretical developments was to estimate the time to the closest point of approach (TTCPA – the time remaining until aircraft reach the closest point), closest point of approach (CPA), and the direction of the intruder aircraft at CPA from a series of measured parameters of the intruder image in the camera, such as size and position. CPA is understood as the ratio of the smallest intruder distance relative to the own aircraft and the characteristic size of the intruder. As the absolute distance cannot be estimated from monocular images because of the scale ambiguity of perspective projection, this relative ratio should be used to decide about the possibility of collision. In practice, this relative CPA value is enough to decide because one can avoid any intruder coming closer than a given multiple of its characteristic size. The details of the theoretical developments can be found in [2].

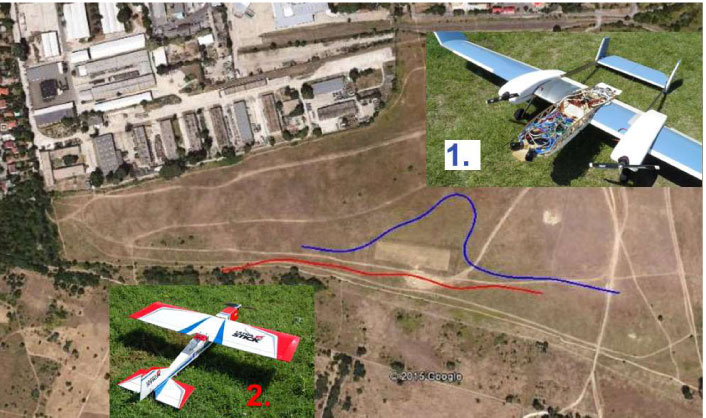

After the theoretical developments and hardware-in-the-loop test runs, real flight demonstrations are done in a critical UAV to UAV situation where the intruder aircraft (aircraft 2 in Figure 2.) is a small (about 1m wingspan) one. This makes detection and identification extremely difficult and decreases the time available to make a decision. The current state of real flight-testing is to make collision encounters with parallel aircraft trajectories. In this case there is definitely a point when the aircrafts are closest to each other. Several real flight tests have been conducted for these cases setting 15m or 30m distance between the trajectories. The 15m case is the NMAC where avoidance is required (and is shown by the blue trajectory in Figure 2.), the 30m is the clear case. Decision thresholds for TTCPA and CPA are set to distinguish NMAC and clear situations. About 25 NMAC and 25 clear flight runs have been done. Currently the system works with homogeneous sky background with a success rate of 80-100% regarding avoidance in NMAC and a false alarm rate of 40-50% in the clear case. This latter means unrequired avoidance when the intruder is far from us and that the tuning was done focusing on safety. Ground-based and onboard video recordings of the flight tests can be seen on our Youtube channel [L1].

Figure 2: Aircraft photos and trajectories plotted over the airfield.

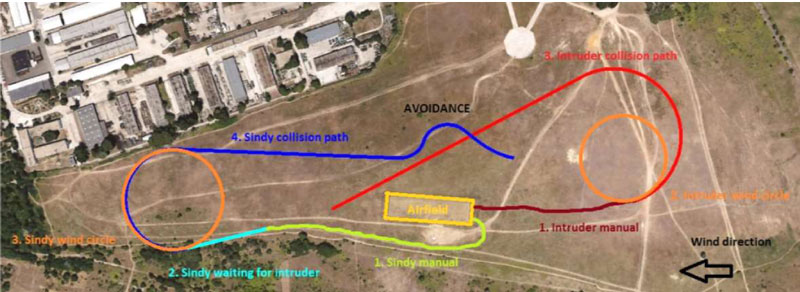

The case of non-parallel trajectories requires a special design algorithm, which guides the aircraft along the required trajectories to meet at the same point at the same time. This task has two challenges: first, the starting points of the aircraft are uncertain as they take-off under manual control, and the autopilot is started only later. Second the wind disturbance should be measured and the designed tracks corrected accordingly. Currently successful hardware-in-the-loop (HIL) simulations are carried out resulting in collision tracks with and without wind. Video recordings of these experiences are shown on our Youtube channel [L1]. Illustrative non-parallel trajectories are plotted in Figure 3 showing manual take-off, wind measuring circles, designed trajectories and the avoidance also based-on HIL simulation.

Figure 3: Non-parallel collision trajectory design.

The future goal is to test the non-parallel collision trajectories in real flight, which first requires the implementation of safe and guaranteed communication between the aircraft as they have to exchange data (for example the autopilot starting points and trajectory parameters).

Link:

[L1] https://www.youtube.com/channel/UCQMpnOuOMCiodDKQw8_hf5A

References:

[1] S. D. B: “Reactive Image-based Collision Avoidance System for Unmanned Aircraft Systems”, Master’s thesis, Australian Research Centre for Aerospace Automation, May 2011.

[2] P. Bauer, A. Hiba, J. Bokor: “Monocular Image-based Intruder Direction Estimation at Closest Point of Approach”, in Proc. of ICUAS’17, Miami, FL, USA, June 2017.

Please contact:

Peter Bauer, Senior Research Fellow, Project Coordinator

MTA SZTAKI,