by Josiane Zerubia (Inria), Sebastiano B. Serpico and Gabriele Moser (University of Genoa)

In a joint project at Inria and the University of Genoa, we have designed novel multiresolution image processing approaches to exploit satellite image sources in order to classify the areas that have suffered the devastating impacts of earthquakes or floods.

Natural disasters are one of the most critical disruptive factors in modern societies. Most countries are exposed to one or more natural disaster risks, the most frequent and consequential being earthquakes and floods. Hence, civil protection agencies invest substantial public funds into creation and maintenance of satellite imaging missions that enable timely identification, assessment and appropriate responses to minimise the consequences of these events. Computer vision algorithms give us information about the Earth’s surface and help us to assess the ground effects and damage of natural disasters, which in turn facilitates quicker response times. Remote sensing allows elements and areas at risk within a monitored area to be identified and their vulnerability evaluated. After an event, the prior information can be combined with results of multi-temporal remote sensing image analysis and any further ancillary information available to estimate damage.

Efficient fusion of heterogeneous Earth observation data is crucial to developing accurate situation assessments. One typical scenario is the availability of multiple optical images of the same area (from the same or different satellite missions), that are characterised by different spatial resolutions and spectral representations. These are usually made available to governmental agencies shortly after a natural disaster. In addition to these classical optical images, radar imagery, acquired using the synthetic aperture radar technology that ensures high spatial resolution, may be available [1]. The latter has rapidly grown in popularity and accessibility in the last decade due to its inherent advantages that include robustness to weather and illumination conditions.

We use multiple satellite image sources to classify the areas that have suffered devastating impacts of earthquakes or floods. We have designed several image processing approaches that target classification applications on multi-resolution, multi-sensor, and multi-temporal imagery. The core ingredient of these approaches is the use of hierarchical Markov models. These are born as a hybrid of Markov random fields formulated on a tree hierarchical structure [2]. They allow several key concepts to be incorporated: local dependencies, resolution-robustness, and Markovian causality with respect to scale.

It is highly beneficial that such techniques allow seamless fusion of multiple image sources into the single classification process. In particular, if we consider a scenario when the two (or more) input images are acquired at distinct spatial resolutions, then a quad-tree topology allows an integration of these images at different levels, up to wavelet-transforming in order to fit into the quad-tree structure. Once the hierarchical tree with the input data is constructed, the classification process is performed based on iterative Bayesian algorithms in accordance with the hierarchical Markov model. From a methodological viewpoint, the proposed approaches perform supervised Bayesian classification on a quad-tree topology and combine class-conditional statistical and hidden Markov modelling for pixel-wise and contextual information-extraction, respectively [3]. Multi-sensor data can be incorporated into this framework through advanced multivariate probabilistic approaches, such as copula functions, or by defining case-specific topologies composed of multiple trees associated with the data from different input sensors and related by suitable conditional probabilistic models.

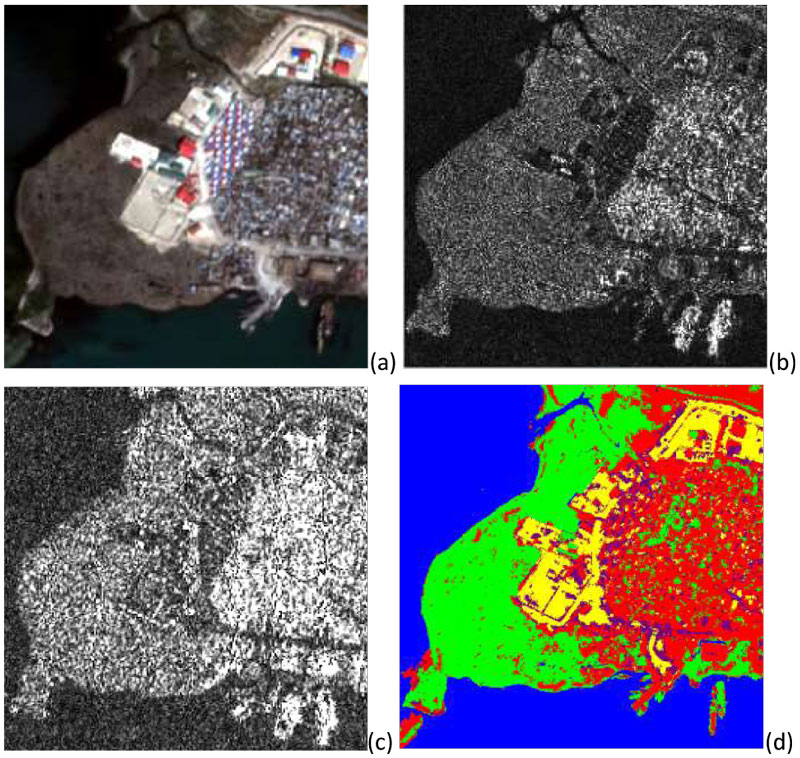

Figure 1: Port-au-prince, Haiti, example of multi-resolution and multi-sensor fusion of remote sensing imagery: (a) Pléiades optical image at 0.5m resolution (Pléiades, © CNES distribution Airbus DS, 2011); (b) COSMO-SkyMed radar image at 1m pixel spacing (© ASI, 2011); (c) RADARSAT-2 radar image at 1.56 m pixel spacing (© CSA, 2011); and (d) multi-sensor and multi-resolution land cover classification result amongst the urban (red), water (blue), vegetation (green), bare soil (yellow), and containers (pink) classes.

An example is reported in Figure 1, in which the site of Port-au-Prince, Haiti, which was affected by a major earthquake in 2010, is considered, and the opportunity to jointly exploit optical imagery from the Pléiades mission of the French Space Agency (CNES; Figure 1(a)) and multi-frequency radar images from the COSMO-SkyMed (X-band, Figure 1(b)) and RADARSAT-2 (C-band, Figure 1(c)) missions of the Italian and Canadian Space Agencies (ASI and CSA), respectively, is addressed. The discrimination of the considered land cover classes is especially challenging due to the very high spatial resolutions involved and the similar spectral responses of some of the classes (e.g., ‘urban’ and ‘containers’). Nevertheless, quite accurate discrimination is obtained (Figure 1(d)) when all three input sources are used through the developed approaches, with substantial improvements over the results that could be obtained by operating with the individual input images or with subsets of the available sources. These results point to the potential of advanced image modelling approaches to benefit from complex multi-sensor and multi-resolution data to optimise classification results, and further confirm the maturity of these approaches not only for laboratory experiments but also for the application to real-world scenarios associated with disaster events.

This research has been conducted together with a PhD student co-tutored in cooperation between the University of Genoa and the Université Côte d’Azur, Inria (Dr Ihsen Hedhli) and a post-doc from the University of Genoa in collaboration with Inria (Dr Vladimir Krylov), and has been partly funded by CNES and Inria. Further research on these topics is expected to be conducted within the Idex UCA_Jedi (Academy 3) of the Université Côte d’Azur in collaboration with the University of Genoa.

References:

[1] V. Krylov, G. Moser, S. B. Serpico, J. Zerubia: “On the method of logarithmic cumulants for parametric probability density function estimation”, IEEE Trans. Image Process., 22(10):3791-3806, 2013.

[2] J.-M. Laferté, P. Pérez, F. Heitz: “Discrete Markov image modeling and inference on the quadtree”, IEEE Trans. Image Process., 9(3):390-404, 2000.

[3] I. Hedhli, G. Moser, J. Zerubia, S. B. Serpico: “A new cascade model for the hierarchical joint classification of multitemporal and multiresolution remote sensing data”, IEEE Trans. Geosci. Remote Sensing, 54(11): 6333-6348, 2016.

Please contact:

Josiane Zerubia

Université Côte d’Azur, Inria, France

Gabriele Moser

University of Genoa, Italy