by Valérie Maquil, Lou Schwartz and Adrien Coppens (Luxembourg Institute of Science and Technology)

As part of the ReSurf project running at the Luxembourg Institute of Science and Technology, we are working on the next generation of remote collaboration systems using wall-sized displays. The project aims at studying the collaborative behaviour of team members interacting with such displays to solve decision-making problems. Through our observations, we will develop and assess visual indicators for better supporting awareness of collaborators’ activities when moving to a mixed-presence scenario involving two distant wall-sized displays.

The 21st century is facing highly complex societal and intellectual challenges that can only be solved when professionals with distinct abilities and resources join their efforts and collaborate. Interactive wall-sized displays provide large benefits for information visualisation and visual data analysis. Besides allowing for a better presentation of large amounts of data, they support collocated collaboration, as multiple users can access and view content at the same time and easily follow each other’s actions. Such an “up-to-the-moment understanding of another person’s interaction with a shared space” is called workspace awareness [1].

However, in many situations (e.g. sanitary reasons or geographical barriers) face-to-face collaboration is not feasible and needs to be replaced by remote collaboration. Conventional tools used to support such collaboration strongly limit non-verbal awareness information, leading to communication difficulties and additional efforts for staying engaged. This lack of awareness is increasingly relevant in the context of decision-making at interactive wall displays, where collaborators are naturally making use of a large number of body movements and hand gestures like pointing [2].

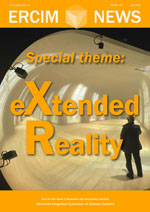

Figure 1: A snapshot of the scenario developed as part of the ReSurf project to study and improve collaborative decision-making using wall-sized displays.

To better mediate awareness information and facilitate communication, previous work suggests adding additional visual cues into the common workspace or the live video stream. While such visual cues have been proposed for smaller workspaces like tabletops, they haven’t been investigated in the context of remote collaboration across two or more wall displays, which is the reason behind the ReSurf project [L1], supported by the FNR.

To be able to study, enhance and evaluate such cues in our context, we developed a decision-making scenario (snapshot shown in Figure 1) involving different types of data on a wall-sized display. This scenario involves four participants, each having a distinct role, collaboratively solving a stock management problem during a crisis. The problem is divided into four subtasks: i) estimating the stock needs, ii) selecting the type of equipment for which fixing the shortage is the most urgent problem, iii) selecting an offer from different suppliers that may fulfil that need, and iv) selecting a delivery method. For each stage, participants are provided with different types of data that they need to manipulate, in order to find the appropriate solution. This involves slider values, graph visualisations, tables, maps, but also news articles. We further impose constraints on them through private information that participants receive based on their assigned role (e.g. “as the head of intensive care unit, I need the missing item delivered within eight days”).

Our first course of action was to run a collocated user study where participants would go through that scenario while interacting from the same room, using the same wall-sized display. This allowed us to analyse their behaviour and identify gestures they naturally and frequently relied on.

We then studied the main issues observed during remote collaboration via a simple (baseline) setup involving only an audio-video link between the two sites, similar to what can nowadays be expected from videoconferencing systems. We organised a focus group where participants went through the decision-making scenario with the aforementioned baseline setup so that they could identify issues resulting from the sole reliance on the audio-video link. Participants could then discuss the issues they noticed and envision solutions that could serve as cues to solve the encountered issues.

We organised their ideas of awareness cues into three categories: environmental, action, and attention cues:

- Environmental awareness cues relate to all elements that can give information about the environment of the distant group, such as the configuration of their wall-sized display and which participants are present in the distant space.

- Action awareness cues concern the information that explains an action, such as the nature of the action, the artefacts it affects, but also who perpetuated it and from/to which location.

- Attention awareness cues give information about the attention of the participants, i.e. where they are looking.

The next step in the project will be to design, implement, and evaluate some of these workspace awareness cues. We will indeed run further user studies with different groups to understand their usage of such cues and whether/how they are beneficial to their collaboration (e.g. in terms of correctness, efficiency, or workload).

This innovative project will ultimately generate empirical knowledge on the optimal design of awareness support in remotely connected wall-sized displays. Moreover, it will contribute to the next generation of remote decision-making tools, where people can collaborate smoothly, and enjoy an experience that is as close as possible to a collocated situation, appropriately balancing between displaying more information and avoiding distractions.

Links:

[L1] https://www.list.lu/en/informatics/project/resurf/

References:

[1] C. Gutwin, S.A. Greenberg, “Descriptive framework of workspace awareness for real-time groupware,” Computer Supported Cooperative Work (CSCW), vol. 11, pp. 411–446, 2002. https://doi.org/10.1023/A:1021271517844

[2] V. Maquil, et al., “Establishing awareness through pointing gestures during collaborative decision-making in a wall-display environment”, in Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, pp. 1–7), 2023.

Please contact:

Valérie Maquil, Luxembourg Institute of Science and Technology, Luxembourg