by Marco Di Benedetto, Giulio Federico and Giuseppe Amato (CNR-ISTI)

The power of Generative AI is employed towards a detailed reconstruction of large environments, overcoming short-range and sparsity of common techniques with spatio-temporal 3D diffusion models.

In the rapidly evolving landscape of Extended Reality (XR), the Social and hUman ceNtered (SUN) XR project [L1] emerges as a pioneering endeavour to redefine intuitive 3D data acquisition solutions, promising digitally immersive experiences with unprecedented realism. Spearheaded by a collaborative effort involving esteemed institutions across Europe, SUN aims to set new benchmarks in the creation of physically convincing digital 3D models while facilitating seamless integration with real-world objects.

The SUN XR project boasts a consortium of leading research institutions including the Italian National Research Council (CNR), University of Amsterdam, ETH Zurich, University College London, and the University of Rome Tor Vergata. Taking place across various locations in Europe, this ambitious initiative represents a concerted effort to address the pressing need for advanced 3D data acquisition techniques in the realm of XR.

At its core, the research endeavours of SUN XR are fuelled by a quest to bridge the gap between virtual and physical realities, ushering in a new era of immersive experiences that blur the lines between the two realms. The project commenced its ground-breaking work with a comprehensive vision to revolutionise XR applications through cutting-edge advancements in 3D data acquisition.

Central to the mission of the SUN XR project is the development of intuitive solutions that enable the acquisition of 3D models with unparalleled fidelity and realism. By incorporating crucial physical properties information and devising automated mechanisms for seamless integration with real-world objects, SUN seeks to empower creators with powerful tools to unleash their creativity in XR content creation.

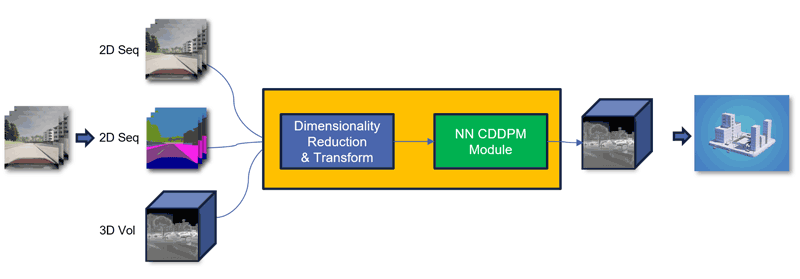

The techniques employed within the SUN XR project represent a convergence of state-of-the-art methodologies from computer vision, machine learning, and sensor fusion domains. In this context, the Artificial Intelligence for Media and Humanities (AIMH) laboratory of CNR-ISTI [L2] proposes a ground-breaking 3D diffusion technique [1] that will integrate in an XR session a 3D reconstruction of a real environment that will transcend the limitations of traditional depth cameras and lidars. By harnessing the temporal information encoded in sequences of environmental images and leveraging spatial cues extracted from initial sparse reconstructions (e.g. a 3D point cloud consisting of features extracted from a SLAM execution), this innovative approach promises to overcome short-range acquisition challenges while mitigating the sparsity inherent in long-range lidar data. The fusion approach of temporal and spatial information will lead to the definition of a modular 3D denoising diffusion probabilistic model, able to generate missing parts of the scene by hallucinating data that has been precedently learned in a similar scenario (see Figure 1).

Figure 1: Data pipeline of our spatio-temporal diffusion architecture. Inputs represent an ordered sequence of colour images of the environment, along with a sparse reconstruction of photogrammetric data. Our module will fuse incoming data and hallucinate a 3D representation of the environment with a fine-tuned 3D diffusion generation pipeline.

To this end, we used a sophisticated third-party driving simulation engine, meticulously crafted to replicate real-world scenarios with high fidelity. The engine is capable of generating 3D colour images, capturing the nuances of light, texture, and perspective to imbue virtual environments with a sense of palpable realism. These images served as the foundation upon which our 3D diffusion model would be trained, providing rich visual data essential for understanding the intricacies of the virtual world

Complementing the visual data were Signed Distance Field (SDF) volumes [2], a powerful representation of spatial information that formed the backbone of our 3D mesh generation process. By encoding the distance from each point in space to the nearest surface, these volumes facilitated the creation of detailed, geometrically accurate mesh models, ensuring that our simulations faithfully captured the physical attributes of the environments they sought to emulate. The final dataset will be soon made available to the general public.

The innovative technique presented heralds a transformative leap in the realm of XR experiences, promising to revolutionise the way we interact with virtual environments. At its core lies the capability to reconstruct the surrounding environment in near real time, leveraging a fusion of cutting-edge technologies to capture intricate details and seamlessly hallucinate missing elements. The end result is a virtual structure of high fidelity and completeness, meticulously crafted to mirror its real-world counterpart with as much accuracy as possible.

Looking ahead, the future activities of the SUN XR project are poised to build upon its early successes, with a roadmap that encompasses further refinement of 3D data acquisition techniques, extensive validation through real-world deployment scenarios, and strategic collaborations with industry stakeholders to drive technology transfer and commercialisation.

In conclusion, the SUN XR project stands at the forefront of innovation in 3D data acquisition for XR, poised to redefine the boundaries of digital immersion and pave the way for a future where virtual experiences rival, and perhaps even surpass, their physical counterparts. With a multidisciplinary approach, unwavering commitment, and collaborative spirit, SUN XR is poised to shape the XR landscape for years to come, leaving an indelible mark on the fabric of digital reality.

Links:

[L1] https://www.sun-xr-project.eu/

[L2] https://aimh.isti.cnr.it

References:

[1] J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” arXiv, 2020.

[2] J. Shim, C. Kang, and K. Joo, “Diffusion-based signed distance fields for 3D shape generation,” in Proc.s of the CVPR, 2023.

Please contact:

Marco Di Benedetto, CNR-ISTI, Italy