by Simone Gallo, Sara Maenza, Andrea Mattioli, and Fabio Paternò (CNR–ISTI)

We present an approach to supporting human control in green smart homes. The approach is based on a set of tools for allowing end users to create automations through innovative interaction modalities, execute them, and receive relevant explanations about the resulting behaviours and their relevance for the user goals, in particular those that are connected to sustainability principles.

The increasing availability of sensors, connected objects and intelligent services has made it possible to obtain smart homes able to support automations involving the dynamic composition of objects, services and devices. Since each household has specific needs and preferences that can also vary over time, it is crucial to empower its users to directly control such automations, even when people have no programming experience.

Automations can support goals aligned with circular economy principles, like energy saving and waste recycling. We have designed and prototyped various tools that can be deployed in real-home environments. These tools include meta-design tools for creating and controlling home automations, innovative control methods using conversational agents and augmented reality, and tools for simulations and "what if" analyses to evaluate potential impacts on aspects relevant to the circular economy.

We have also developed AI-based techniques that can be used to suggest recommendations to users by indicating elements judged appropriate in specific situations to complete the automations being created.

Specific techniques have also been developed to highlight potential errors detected while users specify rules or issues existing in their current rule sets (e.g. conflicts between rules): in particular, clear and understandable explanations are provided to users, describing the problem and why a specific modification would be needed to resolve it.

The Approach

Smart homes feature connected objects and sensors coordinated through automations. Users typically configure these automations using trigger-action programming (TAP), which follows an "if/when...then" structure. Despite this, users often find it challenging to understand and manage the behaviour of these environments, particularly when multiple automations are active simultaneously.

We propose a novel solution, ExplainTAP, whose objective is to support users in detecting and understanding possible problems between TAP rules, such as conflicts and unexpected direct and indirect rule activations. ExplainTAP provides users with a tool to simulate and analyse the context in which rules could activate, and with explanations to make the behaviour of the smart space more transparent [2]. It also considers the possible long-term user goals, identifies inconsistencies between automations and these goals, and proposes improvements for the automations. This tool is also integrated into a larger platform, including a digital twin for allowing users to view and monitor the home's energy consumption in real-time [1].

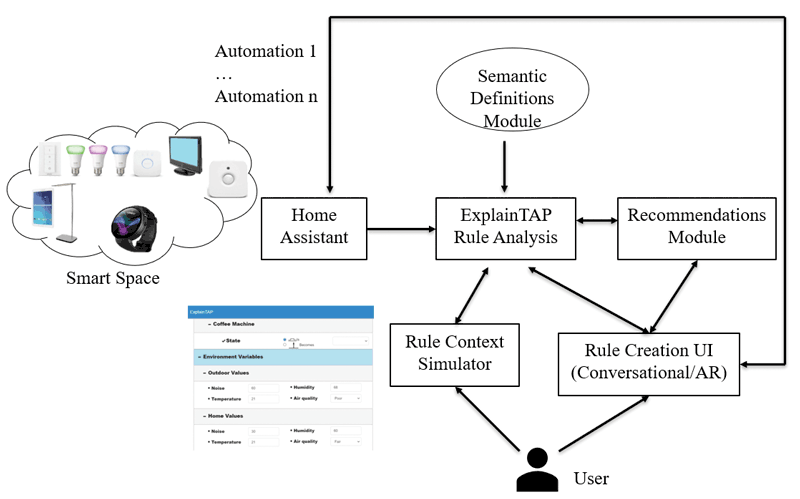

ExplainTAP is connected with Home Assistant (see Figure 1), which is the most widely used open-source tool for managing automations, involving a rich and active community of amateurs [3]. In this way, ExplainTAP can receive real-time information on the state of the environment and of the automations currently active in it.

Figure 1: The proposed platform.

The ExplainTAP Rule Analysis backend exposes features to make the automated environment more transparent to the user:

- Rule Context Simulator: It builds a logical organisation of the contextual aspects of the spaces where the platform is deployed (i.e. which objects in which room, the services they expose, and the values of environmental variables such as temperature, humidity or air quality). Then, it checks which triggers are satisfied by the specified context data (detected or inserted by the user).

- Direct/indirect Rule Chain Detection: Starting from the trigger’s activation, it checks whether these changes lead to new activations. Another function monitors whether the activation of a rule may indirectly cause another one to activate, exploiting the semantic definitions module. For instance, a rule to automate the air circulation in the house may unexpectedly activate another rule that starts the heating when the temperature is under a value, leading to energy waste.

- Why/Why Not Analysis: It stores the triggers that verify or do not verify in the context, and the specific pieces of context that lead to the activations/not activations, also generating a corresponding textual explanation.

- Conflict Analysis: It detects rules whose actions operate on the same object or whether there is a containment relation between the objects in the rules, detects whether the action’s values are incompatible, and detects periods when both can be activated at the same time, in this case notifying the user.

- Goal-Based Rule Analysis: It inspects the automations and detects whether they can conflict with the user-selected goal. It also provides suggestions for modifying the automations according to these goals. For instance, if the energy-saving goal is selected, ExplainTAP can suggest an alternative action for an automation with the same effect but with less impact on energy consumption.

The Goal-Based Rule Analysis relies on the semantic definitions module, the current indoor and outdoor environmental values retrieved from Home Assistant (which can be modified by the user to simulate specific situations), and user preferences.

The semantic definitions module contains information about the objects that can act on the environment commonly found in smart home installations. For each object, this information includes: which triggers it can activate, with a description of the reason; a list of goals on which a specific state of the object can have a positive or negative effect, including the reasons; the list of the variables that it can increase/decrease when a specific state of the object is active, with associated the level of confidence for such prediction.

The recommendations module aims to make the creation of automations easier through suggestions that take into account the users’ preferences, the automations they have already made, and the partial rule they are currently entering. This module is also connected with the Rule Analysis backend. In this way, recommendations that conflict with user goals are discarded, and the user is warned if these may cause conflicts with the already created automations.

Links:

[L1]: https://hiis.isti.cnr.it/lab/home

[L2]: https://hiis.isti.cnr.it/eud4gsh/index.html

References:

[1] Simone Gallo, et al., “An architecture for green smart homes controlled by end users,” in Proc. of ACM Advanced Visual Interfaces (AVI ’24). ACM, 2024.

[2] S. Maenza, A. Mattioli, F. Paternò, “An approach to explainable automations in daily environments,” in: T. Ahram, et al., (eds), Intelligent Human Systems Integration (IHSI 2024): Integrating People and Intelligent Systems, AHFE (2024) Int. Conf., AHFE Open Access, vol. 119. AHFE International, USA, 2024.

[3] B. R. Barricelli, et al., “What people think about green smart homes,” in Proc. of the 8th Int. Workshop on Cultures of Participation in the Digital Age (CoPDA 2024), 2024.

Please contact:

Fabio Paternò, CNR-ISTI, Italy