by László Tizedes, András Máté Szabó, and Anita Keszler (HUN-REN SZTAKI)

Automated road defect detection is a key component in the development and maintenance of smart cities, leading to safer, more efficient and sustainable urban environments. An artificial intelligence-based system capable of detecting potholes, speed bumps and manhole covers, among other road hazards, was developed as a result of the cooperation between D3 Seeron startup company and HUN-REN SZTAKI, and was supported by ESA Spark Funding. The proposed system combines the low computational demands of vision-based object detection with the precise distance measurement of LiDAR technology.

As smart transportation technology evolves, the need for greater integration of digital devices in vehicles has increased, particularly in automated environmental sensing and vehicle control. In a smart city, integrating automated road defect detection systems is essential to enhance the quality of transportation by providing real-time feedback on road conditions. This feedback allows vehicles to adjust their routes and driving behaviours dynamically, ensuring safer and more reliable travel.

Moreover, automated road anomaly detection contributes significantly to the overall infrastructure management of a smart city. By continuously monitoring and reporting the state of the roads, these systems provide valuable data to traffic control centres that can be used to reduce traffic congestion and to plan cost-efficient road maintenance. In this way, automated road defect detection not only supports safer driving but also enhances the efficiency and sustainability of urban transportation networks.

The Project

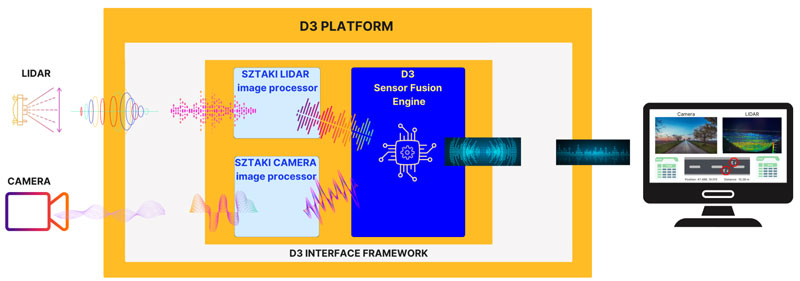

We aimed to develop a system that uses the vehicle's environmental sensor data (camera and LiDAR) to provide the driver or the self-driving vehicle's control system with information about the presence of obstacles on the road surface (see Figure 1). The automation of vehicle control heavily depends on the vehicle's ability to sense its surroundings, which relies on processing data from various sensors, each with its own unique characteristics. By leveraging these diverse sensor inputs, the developed system aims to deliver comprehensive and reliable environmental awareness, enhancing both the safety and efficiency of autonomous vehicle operations.

Figure 1: Our platform contains a Sensor Fusion Engine and a Framework. It processes information from the sensors and provides feedback to the driver/control system of the vehicle. If necessary, the information can also be forwarded to the traffic control centre.

Unlike the majority of previous solutions that focus on road quality assessment, this method doesn’t rely on post-processing of the recorded data. Therefore, the output of our system is not only useful for reporting issues to traffic centres, but it can also provide vital information for the control system of a self-driving vehicle immediately.

The six-month-long project [L1] ended in 2024 and was a joint effort of D3 Seeron and the Institute for Computer Science and Control (HUN-REN SZTAKI) [L2]. In the past decades D3 Seeron has developed applications that have provided a solution to challenges from large-scale data processing to image processing to the application of artificial intelligence. The mission of HUN-REN SZTAKI includes pursuing focused, basic and applied research on areas defined by the mainstream of world trends and the domestic requirements and challenges.

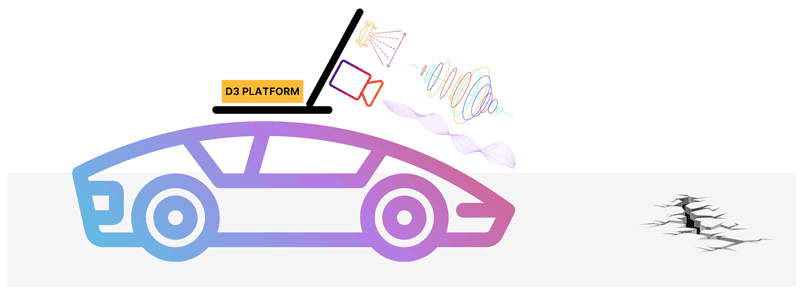

The developed D3 platform contains a Sensor Fusion Engine and a Framework providing a robust and flexible solution. HUN-REN SZTAKI contributed to the project with the image processing and space-technology-based LiDAR point cloud processing algorithms. These algorithms were the result of combining a previous ESA-funded research project (DUSIREF [L3]) and technology developed with the support of the Hungarian National Lab for Autonomous Systems. The detection system with the D3 platform is illustrated in Figure 2. It uses camera imagery captured by a RGB camera to detect road surface anomalies. In order to modify the speed or the trajectory of the vehicle, the control system requires accurate location estimation regarding the detected objects on the road. The distance is measured using LiDAR technology. The fusion of RGB camera and LiDAR is used to determine the object’s position. The proposed setup leverages the low computational demands of vision-based object detection methods and integrates them with the precise distance measurement capabilities of LiDAR technology. Given the critical need for rapid data processing in controlling an autonomous vehicle, our system utilises the Robot Operating System and the algorithms run locally on an embedded NVIDIA Jetson AGX Xavier device. However, the data provided by the method can not only be used on-board but can be stored in a cloud for further smart maintenance planning.

Figure 2: The aim of the project was to develop a system that uses the vehicle's environmental sensor data (camera and LiDAR) to provide the self-driving vehicle's control system with information about the presence of obstacles on the road surface.

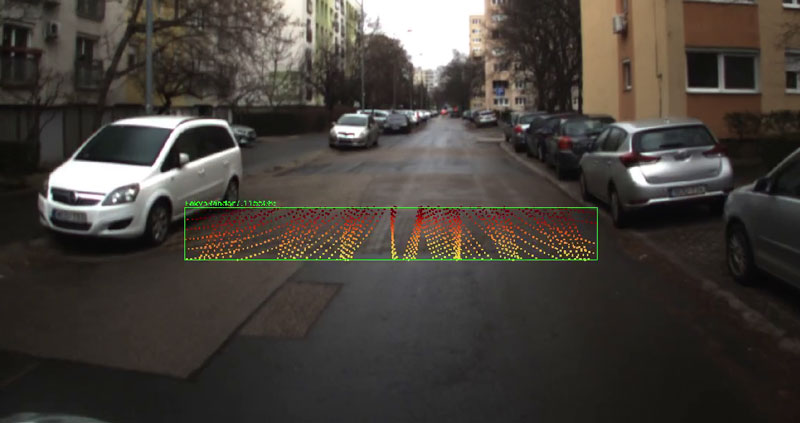

The road hazards are identified by a deep learning algorithm utilising a convolutional neural network, processing images from the camera. The neural network was trained by using a combination of publicly available datasets and our own recordings. The dataset included the following road hazard classes: pothole, road drainage, manhole cover, bicycle mark, and speed bump. Potholes and speed bumps are of particular interest because they require the vehicle to slow down to prevent accidents. However, the other classes also play a crucial role in the detection system. Including these additional road hazard classes in the training data enhances the overall recognition accuracy for the more critical classes. To make the identification process more robust, a tracking algorithm was also developed that follows the detected hazard through multiple frames. A detected speed bump is shown in Figure 3.

Figure 3: Sample output (detected speed bump) of the system: the merging of the camera’s image and the LiDAR’s point cloud. The camera provides the input for the deep learning-based object detection algorithm, while the LiDAR provides the location information of the detected objects.

Two LiDARs with distinct scanning patterns were tested. The Ouster LiDAR has a more mainstream circular pattern, while the Livox AVIA LiDAR has a non-repetitive rosette scanning pattern. The objective was to determine if the scanning pattern significantly impacts the results or if the method is sufficiently general to function with both. Our findings indicated that the system can effectively integrate both scanning technologies.

Conclusion

With the development of driver support systems and self-driving vehicles, the amount of information integrated into them is bound to expand. Users will expect not only the autonomous navigation of predefined routes but also a higher level of comfort. The road anomaly data we collect can contribute to a more comfortable travelling experience and can also aid road maintenance teams in planning future repairs. Due to the low resource and hardware requirements of the developed tool, it can be integrated into existing driver support / self-driving systems.

Links:

[L1] https://sztaki.hun-ren.hu/en/innovation/projects/road-defect-detection

[L2] https://sztaki.hun-ren.hu/en/science/departments/mplab

[L3] https://sztaki.hun-ren.hu/tudomany/projektek/dusiref

References:

[1] J. Kocić, N. Jovićić, and V. Drndarević, “Sensors and sensor fusion in autonomous vehicles,” 26th Telecommunications Forum (TELFOR), 2018, pp. 420–425.

[2] A. Börcs, B. Nagy, and C. Benedek, “Instant Object Detection in Lidar Point Clouds,” in IEEE Geoscience and Remote Sensing Letters, vol. 14, no. 7, pp. 992-996, July 2017. https://www.doi.org/10.1109/LGRS.2017.2674799

[3] L. K. Suong and K. Jangwoo, “Detection of potholes using a deep convolutional neural network,” Journal of Universal Computer Science, vol. 24, no. 9, pp. 1244–1257, 2018.

Please contact:

Anita Keszler, HUN-REN SZTAKI, Hungary