by Frank Klefenz

The human auditory system processes very complex audio signals and deduces meaningful information like speech and music. Conventional speech processors for cochlea implants use mathematically based information-coding strategies. In a new approach being investigated by researchers at the Fraunhofer Institute for Digital Media Technology (IDMT), the human auditory system is digitally modelled as naturally as possible. This leads to a better understanding of the neural representation of sounds and their subsequent processing.

A cochlea implant is controlled by its dedicated speech processor, which specifically triggers electric stimuli according to the speech coding strategy. To make this speech coding easier and more natural, research efforts have recently been invested in the development of analogue silicon cochleas. Some Analogue Very Large Scale Integrated Circuits have been evaluated as speech processors for cochlea implants. Even micro-engineered approaches exist, which implement the cochlea as a hydromechanical system on a physical substrate. Even the signal-transducing sensor units " the inner hair cells " have been micro-engineered on a physical substrate.

Fraunhofer IDMT took a slightly different approach to solving the underlying partial differential equations of the mathematically described cochlea definition in a digital computer system. The cochlea and the sensor model are coupled. The structure and function of the outer ear, the middle ear, the cochlea, the inner hair cells, the spiral ganglion cells and higher cognitive maps for vowel recognition and sound-source localization are modelled and physiologically parameterized, taking psychoacoustic phenomena into account.

The audio signal transduction process is very complex. The audio signal, consisting of sound pressure fluctuations in the air, is converted to movements of the tympanic membrane. This is mechanically coupled to a group of tiny bones in the middle ear, the hammer, the anvil and the stirrup, which cause the fluid-filled cochlea to vibrate. Sensing the fluid velocity along the basilar membrane, the inner hair cells convert this into the release of neurotransmitter vesicles. The neurotransmitters diffuse through the synaptic cleft of the adherent spiral ganglion cells and bind to the receptor ligand sites of the cells ion channels. The triggering of an electric postsynaptic potential at the spiral ganglion cell is modelled according to the Hodgkin-Huxley rate kinetic equations. The auditory system model therefore directly produces the stimuli patterns for the electrodes of the cochlea implant in a spatio-temporal fashion.

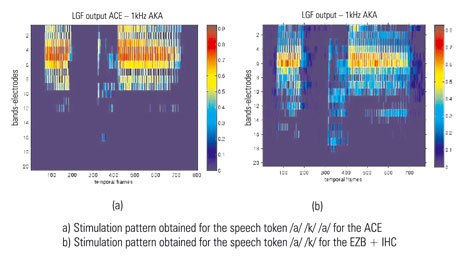

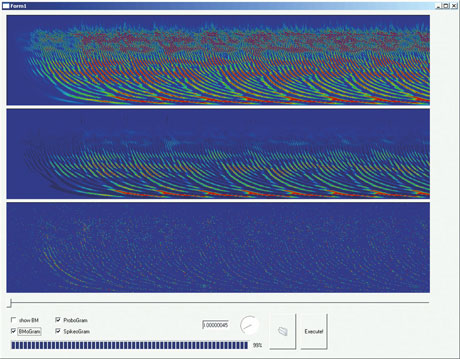

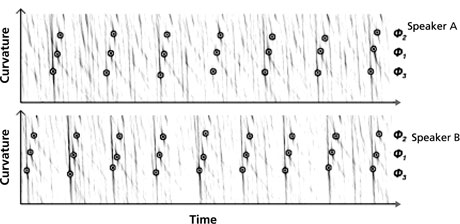

In Figure 1, the stimuli patterns for the conventional advanced combinational encoder (ACE) speech processor strategy are shown on the left. On the right, the stimuli patterns are shown for the new strategy. The latter differ significantly from existing speech-processing coding strategies such as ACE and spectral peak (SPEAK). In Figure 2, the basilar membrane movement is shown in the upper part for the vowel /a/. The middle part shows the neurotransmitter vesicle release probability, and the lower part shows the actually released neurotransmitter vesicles. This strategy computes typical delays in the propagation of signals from apex to helicotrema, which is not reflected in the other strategies. Vowels are coded in this neural representation as bundles of pulse spiking trains of hyperbolic shape. We have found a solution to detect these delay trajectories using a Hubel-Wiesel type computational map. For instance, the vesicle release representation of vowel /a/ undergoes a mathematical transformation, allowing the differences between individual voices to be compensated for. Figure 3 shows the transformed result of the vowel /a/ for two different speakers; the three-point structure is very regular and very similar for both speakers.

This research continues with the aim of improving the system in detail. and allowing it to function in real-time. This will mean that the digital system can be used without prestored stimuli; field tests with several patients will then be run. The computer model will be parameterized, so that the system can be fine-tuned and will be adaptable to the patients needs. The system's performance will be monitored by an automatic speech recognizer that delivers a quality measure of speech intelligibility.

These tests, which are done at the Hearing Research Center in Hannover, Germany, serve to evaluate the system and to compare its performance in terms of speech intelligibility to existing speech processors.

Link:

http://www.idmt.fraunhofer.de

Please contact:

Frank Klefenz

Fraunhofer Institute for Digital Media Technology IDMT, Germany

Tel: +49 3677 467 216

E-mail: klz![]() idmt.fraunhofer.de

idmt.fraunhofer.de