by Cor van Kruijsdijk (Shell Global Solutions International bv)

Shell is using Digital Twin applications to improve operation, maintenance and safety, for example in chemical plants and offshore platforms.

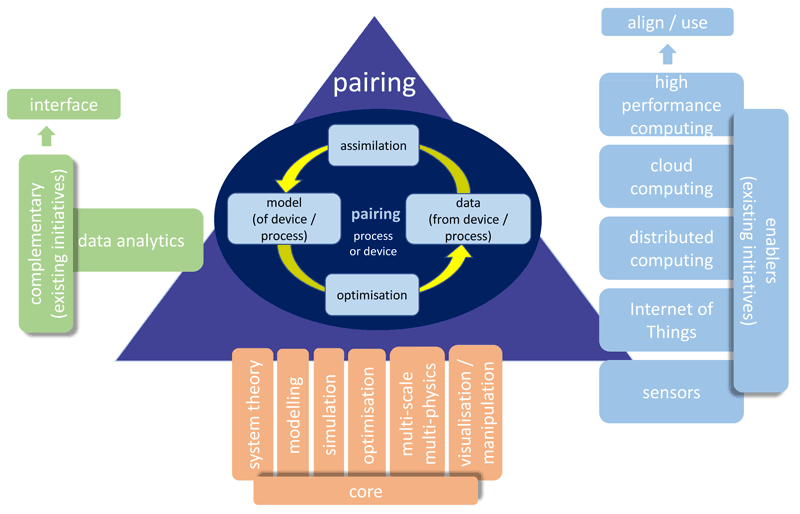

In the early days of space exploration NASA introduced the concept of “pairing” to support operating, maintaining and repairing devices that are not in close proximity. The underlying ideas led to a white paper by Grieves [1] where he introduced the concept of a “digital twin”. A broad definition can be found in Wikipedia: “Digital twin refers to a digital replica of physical assets (physical twin), processes and systems that can be used for various purposes”. A more technical depiction of the fundamental landscape can be seen in Figure 1. In recent years digital twin applications have surfaced in many areas and landed the concept in Gartner’s Top 10 Strategic Technology Trends for 2017.

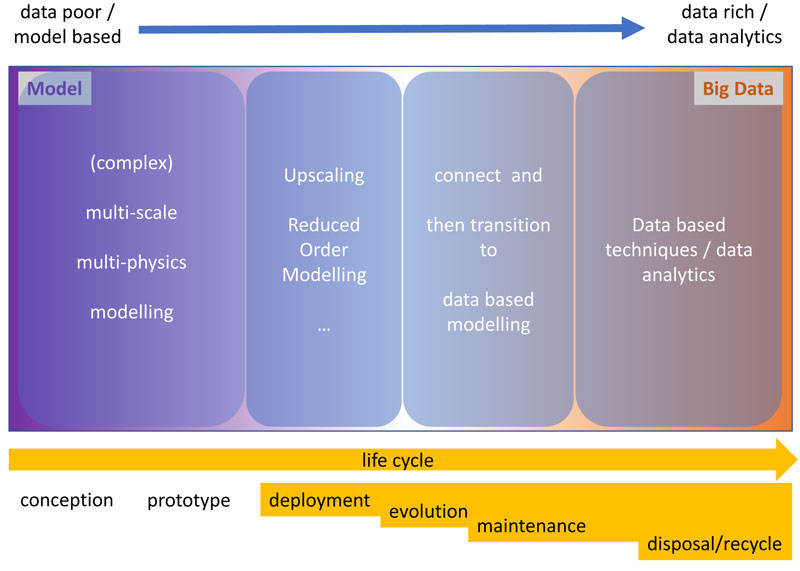

In Shell, digital twins are deployed, for example, for (parts of) chemical plants and offshore platforms as well as smaller assets. They add value in improved operation, maintenance and safety. Moreover, in a drive to further digitalisation many more applications will come online in the near future. Most, if not all, of these will be targeting operating assets and will be data rich. Consequently, data-analytics has a prominent role to play. Digital twin applications earlier in the life cycle (conception, prototype) are scarce. Although many of the core-flow experiments in support of enhanced oil recovery are matched to computer models, these only use part of the digital twin paradigm.

Figure 1: Digital Twin landscape.

The need for digital twins in R&D

The energy transition is an existential challenge for Shell as it impacts our two main pillars, fossil fuels and chemicals [L1]. In addition, it is taking place at an increasingly rapid pace. Significant technological breakthroughs are required to stay within the bounds of the Paris Agreement [L2]. As we are exploring the solutions of the future we need to learn fast and innovate at an even higher pace than traditionally done in our industry. Adopting a digital twin approach will get more value, faster out of our experiments. Our insights into the physical problem are enhanced by exploring the digital solution in places where no data exists. Moreover, it allows us to (digitally) pre-explore the solution space. We can preferentially target the most interesting parts in the solution space, thereby reducing the number of experiments. We can even explore parts of the solution space that are difficult (or even impossible), dangerous or very expensive to target with experiments.

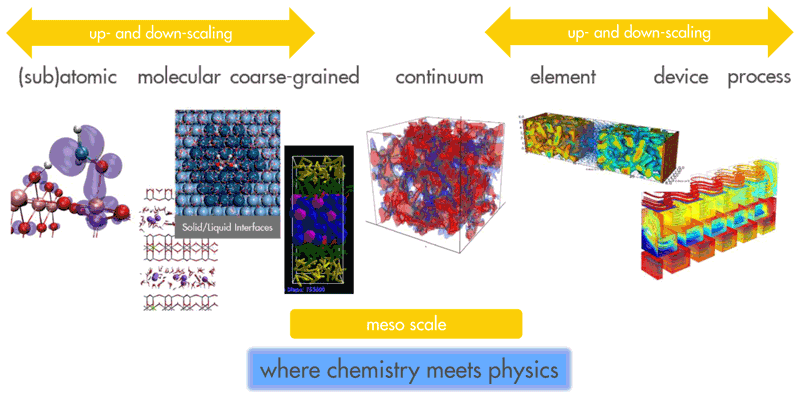

However, during the conception or exploration phase of new technology we usually deal with a much larger solution space that furthermore has a much lower data density. Fortunately, the operational phase requirement of real time results can usually be relaxed in the conception phase. Inevitably we need to rely more on equation-based models than later in the life cycle. Often our problems, such as in electro-chemistry, are inherently multi-scale. Moreover, the transport processes that couple the small (e.g. catalysis) to the engineering scale have multiple drivers. Particularly at the meso-scale (“where chemistry meets physics”) it is not unusual to encounter more than 10 relevant gradients. Usually the relative importance of the drivers (gradients) changes across several spatial (and temporal) scales. Meso-scale models typically require large domains (107-1010 voxels) and yield stiff equations. Although it is an active field of research, it is often limited to medium-sized domains, and incomplete physical descriptions. To achieve the goals set out above, we need to push the current boundaries.

An Open Source Ecosystem

Both the urgency of the problem domains as well as the complexity of the required multi-scale multi-physics models necessitates large scale collaboration. Effective solutions rely on algorithms that scale well on modern and future hardware. Moreover, an effective “digital twin” will be based on system-theoretical foundations, allow easy manipulation, insightful visualisation, extensive data-assimilation and large-scale optimisation; a truly multi-disciplinary challenge (see Figure 1). This collaboration relies on a versatile, fit-for-purpose, open source ecosystem. For all parties (academia, software vendors, start-ups, industry) to participate in this, it will need to allow for sustainable “business models” or drivers for all actors. Hence the ecosystem needs to provide for optimal access, transparency, modularity (largely open), but also proprietary modules, and even IP-protection (where required, for example, in the case of cross-industry participation). EU-MATHS-IN [L3] is working together with an extensive group of companies to get this topic squarely on the agenda of the next EU framework, Horizon Europe.

Links:

[L1] https://kwz.me/htI

[L2] https://kwz.me/htJ

[L3] https://kwz.me/htM

Reference:

[1] M. Grieves: “Digital Twin: Manufacturing Excellence through Virtual Factory Replication”, white paper, 2014 http://innovate.fit.edu/plm/documents/doc_mgr/912/1411.0_Digital_Twin_White_Paper_Dr_Grieves.pdf

Please contact:

Cor van Kruijsdijk

Shell Global Solutions International bv, The Netherlands