by Stephen O’Sullivan and Turlough Downes

Many of the stars in our universe form inside vast clouds of magnetized gas known as plasma. The complexity of these clouds is such that astrophysicists wishing to run simulations could comfortably use hundreds of thousands of processors on the most powerful supercomputers. In the past, a serious obstacle to capitalizing on such computational power has been that the methods available for solving the necessary equations were poorly suited to implementation on massively parallel supercomputers.

Background: The Physics of Multifluid Gases

Usually, to study how a plasma behaves when a magnetic field is present, astrophysicists assume a single fluid with the field firmly anchored into it: if the plasma moves then so does the field, and vice versa. This picture makes some intuitive sense since charged particles try to travel along magnetic field lines – this is the principle used in older cathode-ray tube TV sets to direct the electrons onto the phosphor inside the screen.

The single-fluid picture, however, is only approximate. Most real plasmas consist of different families of particles, each of a different size and charge. These families will behave differently when interacting with the same magnetic field and with the rest of the gas. Indeed, many particles in astrophysical plasmas have no charge and so feel no direct effect of the magnetic field at all!

So, in many environments the physics is far richer than the simple single-fluid approximation: electrons, ions, neutral particles and even electrically charged dust grains can move differently. The plasma can no longer be said to act as a single coherent fluid with a tied-in magnetic field. As a consequence, the field may spread and twist in complex ways in response to the differing flows of the different families of particles. Under these circumstances, it becomes necessary to adopt a true multifluid picture of the system.

It is the necessity of adhering to the multifluid model which has made the prospect of running simulations of star-forming gas clouds on large-scale supercomputers prohibitively difficult in the past.

The HYDRA Project

Since 2004, Dr Stephen O’Sullivan and Dr Turlough Downes have been advancing a wholly new approach to the simulation of astrophysical gas clouds on parallel supercomputers. HYDRA, the principal code under development during that time, has evolved from a bleeding edge one-dimensional single-processor prototype to a three-dimensional production class code with successful runs completed on almost 300,000 cores of the Jülich supercomputer JUGENE.

The essence of the numerical approach employed by HYDRA is that, while the physics of multifluid interactions is complex, the resulting effects on fluctuations in the magnetic field can be classified into just two types: dissipation, which smooths the field; and dispersion, which separates fluctuations at different scales. Crucially for HYDRA, by appropriate treatment of the governing equations, these effects may be considered individually. The numerical challenges of integrating the equations are then found to be more manageable: a previously obscure technique known as Super Time Stepping has been adapted from the literature to treat dissipation while a completely novel scheme called the Hall Diffusion Scheme has been developed to deal with dispersion.

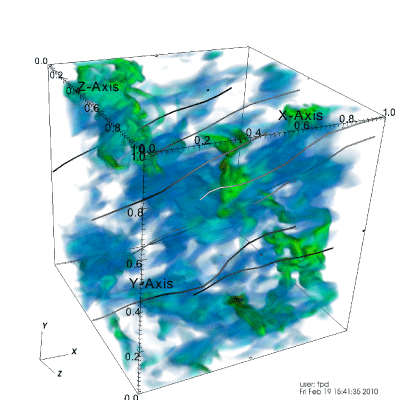

Figure 1 shows a recent run from a simulation which includes the full multifluid physics of turbulence in star-forming clouds. The simulation is carried in a cube made up of 5123 computational zones. Such a simulation is very computationally demanding and can take up to twelve days to run using 4096 cores.

Figure 1: Snapshot from simulation of decaying turbulence in star-forming gas cloud.

Until recently, most of the simulations run under this project were carried out for idealized scenarios with initially turbulent flows that were allowed simply to relax without the influence of any effects which might stir the gas up. Current efforts are focused on investigating the consequences of randomly mixing the flow. Under such circumstances, the kinetic energy added via mixing may ultimately end up increasing the strength of the magnetic fields. This so-called dynamo effect may have a profound influence on the evolution of star-forming clouds in nature. In addition, the mixing of the cloud can prevent or, perhaps counter-intuitively, actually enhance the prospect of stars like our own sun forming.

At present, the principal institutions involved in this project are the Dublin Institute of Technology (School of Mathematical Sciences), Dublin City University (School of Mathematical Sciences & National Centre for Plasma Science and Technology) and the Dublin Institute for Advanced Studies (School of Cosmic Physics). Recent support has been provided by Science Foundation Ireland, under the Research Frontiers Programme, and the Partnership for Advanced Computing in Europe (PRACE). Support from the Higher Education Authority under Cycle 3 of the Programme for Research in Third-Level Institutions (PRTLI 3) was important during the early development phase of the code.

Central to the progress that has been made in this project are state-of-the-art Irish computational facilities, including the supercomputing ‘capability’ facilities of the Irish Centre for High-End Computing (ICHEC). Part of the code development was also carried out using facilities at the Argonne National Laboratory, USA.

Links:

http://www.dit.ie

http://www.dcu.ie

http://www.ncpst.ie

http://www.dias.ie

http://www.ichec.ie

http://www.prace-project.eu

http://www.cosmogrid.ie

http://www.sfi.ie

http://www.hea.ie

http://www.anl.gov

Please contact:

Stephen O’Sullivan

School of Mathematical Sciences, Dublin Institute of Technology, Ireland

Tel: +353 1 402 4823

E-mail:

Turlough Downes

School of Mathematical Sciences, Dublin City University, Ireland

Tel: +353 1 700 5270

E-mail: